The Service Mesh (ASM) traffic scheduling suite provides sophisticated throttling policies to implement advanced throttling features such as global throttling, user-specific throttling, setting burst traffic windows, and configuring a custom consumption rate of request tokens for the traffic destined to a specified service. This topic describes how to use RateLimitingPolicy provided by the ASM traffic scheduling suite to implement user-specific throttling.

Background information

The throttling policies of the ASM traffic scheduling suite use the token bucket algorithm. The system generates tokens at a fixed rate and adds them to a token bucket until the bucket capacity is reached. Sending requests between services needs to consume tokens. If enough tokens exist in a bucket, requests consume tokens when they are sent. If tokens are insufficient in a bucket, requests may be queued or discarded. The token bucket algorithm ensures that the average rate of data transmission does not exceed the rate of token generation, while dealing with burst traffic at a certain degree.

Prerequisites

A Container Service for Kubernetes (ACK) managed cluster is added to your ASM instance whose version is 1.21.6.83 or later. For more information, see Add a cluster to an ASM instance.

You have connected to the ACK cluster by using kubectl. For more information, see Obtain the kubeconfig file of a cluster and use kubectl to connect to the cluster.

The ASM traffic scheduling suite is enabled. For more information, see Enable the ASM traffic scheduling suite.

Automatic sidecar proxy injection is enabled for the default namespace in the ACK cluster. For more information, see Manage global namespaces.

An ingress gateway named ingressgateway is created and port 80 is enabled. For more information, see Create an ingress gateway.

Preparations

Deploy the sample HTTPBin and sleep services and check whether the sleep service can access the HTTPBin service.

Create an httpbin.yaml file that contains the following content:

Run the following command to deploy the HTTPBin service:

kubectl apply -f httpbin.yaml -n defaultCreate a sleep.yaml file that contains the following content:

Run the following command to deploy the sleep service:

kubectl apply -f sleep.yaml -n defaultRun the following command to access the sleep pod:

kubectl exec -it deploy/sleep -- shRun the following command to send a request to the HTTPBin service:

curl -I http://httpbin:8000/headersExpected output:

HTTP/1.1 200 OK server: envoy date: Tue, 26 Dec 2023 07:23:49 GMT content-type: application/json content-length: 353 access-control-allow-origin: * access-control-allow-credentials: true x-envoy-upstream-service-time: 1200 OK is returned, which indicates that the access is successful.

Step 1: Create a throttling policy by using RateLimitingPolicy

Use kubectl to connect to the ASM instance. For more information, see Use kubectl on the control plane to access Istio resources.

Create a ratelimitingpolicy.yaml file that contains the following content:

apiVersion: istio.alibabacloud.com/v1 kind: RateLimitingPolicy metadata: name: ratelimit namespace: istio-system spec: rate_limiter: bucket_capacity: 2 fill_amount: 2 parameters: interval: 30s limit_by_label_key: http.request.header.user_id selectors: - agent_group: default control_point: ingress service: httpbin.default.svc.cluster.localThe following table describes some of the fields. For more information, see Description of RateLimitingPolicy fields.

Field

Description

fill_amount

The number of tokens to be added within the time interval specified by the interval field. In this example, the value is 2, which indicates that the token bucket is filled with two tokens after each interval specified by the interval field.

interval

The interval at which tokens are added to the token bucket. In this example, the value is 30s, which indicates that the token bucket is filled with two tokens every 30 seconds.

bucket_capacity

The maximum number of tokens in the token bucket. When the request rate is lower than the token bucket filling rate, the number of tokens in the token bucket will continue to increase, until the maximum number

bucket_capacityis reached.bucket_capacityis used to allow a certain degree of burst traffic. In this example, the value is 2, which is the same as the value of the fill_amount field. In this case, no burst traffic is allowed.limit_by_label_key

The labels that are used to group requests in the throttling policy. After you specify these labels, requests with different labels are throttled separately and separate token buckets are used for them. In this example,

http.request.header.user_idis used, which indicates that requests are grouped by using theuser_idrequest header. This simulates the scenario of user-specific throttling. This example assumes that requests initiated by different users have differentused_idrequest headers.selectors

The services to which the throttling policy is applied. In this example,

service: httpbin.default.svc.cluster.localis used, which indicates that throttling is performed on thehttpbin.default.svc.cluster.localservice.

Run the following command to create a throttling policy by using RateLimitingPolicy:

kubectl apply -f ratelimitingpolicy.yaml

Step 2: Verify the result of user-specific throttling

Use kubectl to connect to the ACK cluster, and then run the following command to enable bash for the sleep service:

kubectl exec -it deploy/sleep -- shRun the following commands to access the /headers path of the HTTPBin service twice in succession as user1:

curl -H "user_id: user1" httpbin:8000/headers -v curl -H "user_id: user1" httpbin:8000/headers -vExpected output:

< HTTP/1.1 429 Too Many Requests < retry-after: 14 < date: Mon, 17 Jun 2024 11:48:53 GMT < server: envoy < content-length: 0 < x-envoy-upstream-service-time: 1 < * Connection #0 to host httpbin left intactWithin 30 seconds after the previous step is executed, run the following command to access the /headers path of the HTTPBin service once as user2:

curl -H "user_id: user2" httpbin:8000/headers -vExpected output:

< HTTP/1.1 200 OK < server: envoy < date: Mon, 17 Jun 2024 12:42:17 GMT < content-type: application/json < content-length: 378 < access-control-allow-origin: * < access-control-allow-credentials: true < x-envoy-upstream-service-time: 5 < { "headers": { "Accept": "*/*", "Host": "httpbin:8000", "User-Agent": "curl/8.1.2", "User-Id": "user2", "X-Envoy-Attempt-Count": "1", "X-Forwarded-Client-Cert": "By=spiffe://cluster.local/ns/default/sa/httpbin;Hash=ddab183a1502e5ededa933f83e90d3d5266e2ddf87555fb3da1ad40dde3c722e;Subject=\"\";URI=spiffe://cluster.local/ns/default/sa/sleep" } } * Connection #0 to host httpbin left intactThe output shows that throttling is not triggered when user2 accesses the same path. This indicates that user-specific throttling is successful.

References

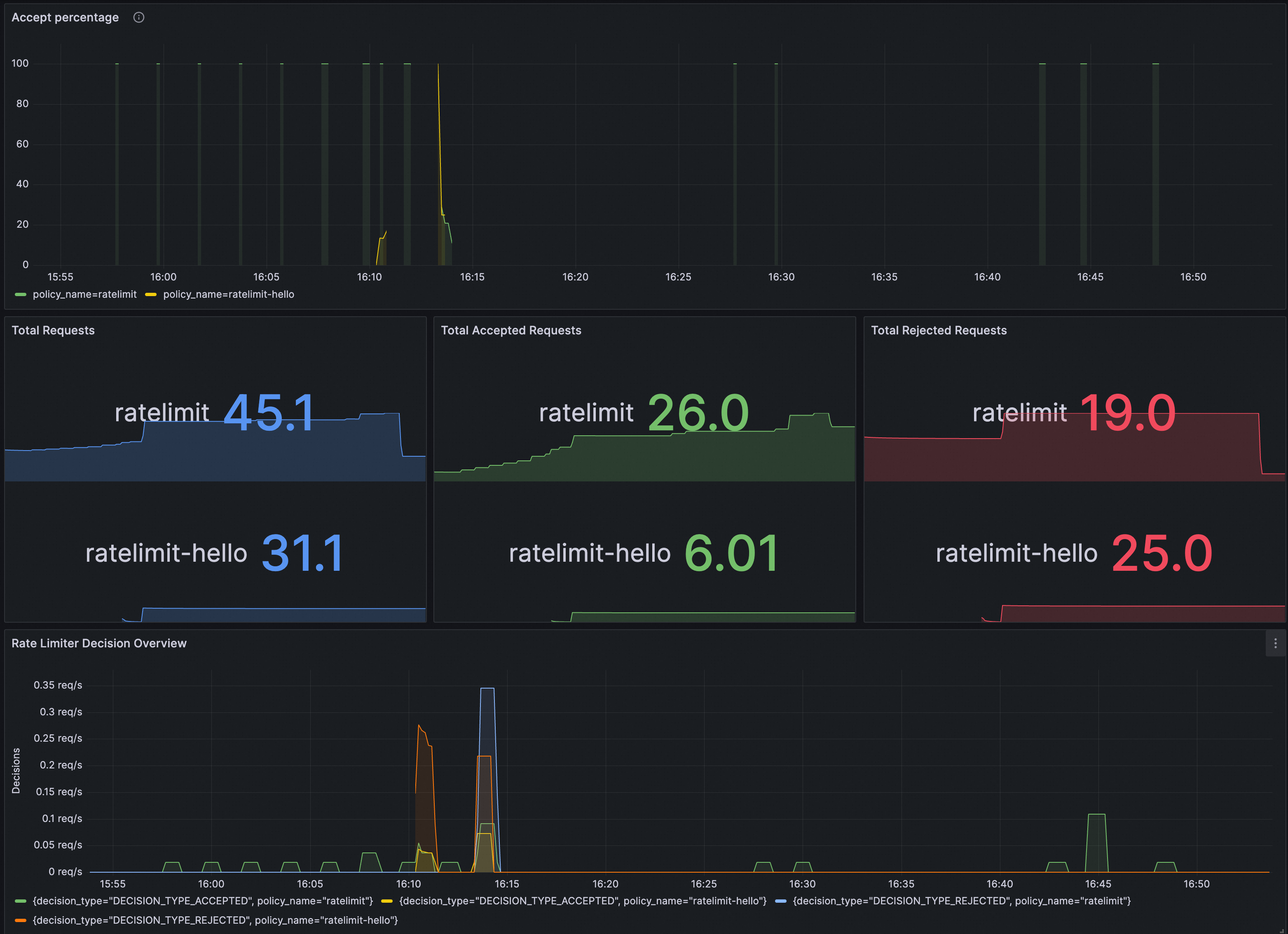

You can verify whether RateLimitingPolicy takes effect on Grafana. You need to ensure that the Prometheus instance for Grafana has been configured with ASM traffic scheduling suite.

You can import the following content into Grafana to create a dashboard for RateLimitingPolicy.

The dashboard is as follows.