You can deploy the cloud-native AI suite in Container Service for Kubernetes (ACK) Pro clusters, ACK Serverless Pro clusters, and ACK Edge Pro clusters that run Kubernetes 1.18 or later. This topic describes how to deploy the cloud-native AI suite in a cluster. This topic also describes how to install and configure AI Dashboard and AI Developer Console.

Prerequisites

An ACK Pro cluster, ACK Serverless Pro cluster, or ACK Edge Pro cluster is created. The Kubernetes version of the cluster is 1.18 or later. For more information, see Create an ACK Pro cluster, Create an ACK Serverless Pro cluster, and Create an ACK Edge cluster.

Enable Managed Service for Prometheus and Enable Log Service are selected on the Component Configurations page when you create a cluster, or ack-arms-prometheus and logtail-ds are installed from the Operations page after you create the cluster. These services or components are required only if you install and configure AI Dashboard. For more information, see Managed Service for Prometheus and Collect text logs from Kubernetes containers in DaemonSet mode.

Deploy the cloud-native AI suite

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its name. In the left-side pane, choose .

On the Cloud-native AI Suite page, click Deploy. On the page that appears, select the components that you want to install.

The following table describes how to configure the parameters. The following table also describes the components and the supported cluster types.

Configuration in the console

Component configuration

Supported cluster

Configuration item

Description

Component name and description

Namespace

ACK Pro cluster

ACK Serverless Pro cluster

ACK Edge Pro cluster

Elasticity

Specify whether to enable the elastic feature. For more information, see Kubernetes-based model training jobs and Containerized elastic inference services.

ack-alibaba-cloud-metrics-adapter, an auto scaling component.

kube-system

Acceleration

Specify whether to enable the Fluid Data Acceleration feature. For more information, see Overview of Fluid.

ack-fluid, a data caching and acceleration component.

fluid-system

Scheduling

Specify whether to enable the Scheduling Component (Batch Task Scheduling, GPU Sharing, Topology-aware GPU scheduling, and NPU scheduling) feature. You can click Advanced to configure custom parameters.

ack-ai-installer, a scheduling component.

kube-system

Specify whether to enable the Kube Queue feature. For more information, see Use ack-kube-queue to manage job queues.

ack-kube-queue, a scheduling component that manages workloads in Kubernetes.

kube-queue

Interaction Mode

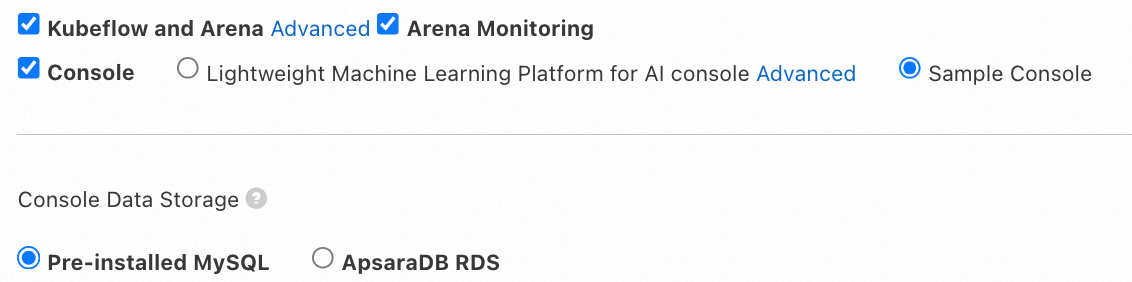

Arena: Select this option if you want to use the Arena CLI. To use the Arena CLI, you also need to install and configure the Arena client. After you install the Arena client, you can use the Arena CLI to integrate Kubeflow training operators. You can click Advanced to configure custom parameters.

If you select Kube Queue, Console, and Kubeflow Pipelines at the same time, the Arena option is required. For more information, see Configure the Arena client.

ack-arena (ecosystem tool), a machine learning CLI.

kube-system

Console: Deploy a lightweight Platform for AI. You can click Advanced to configure custom parameters.

ack-pai, a lightweight Platform for AI. Recommended.

You can integrate algorithms and engines that are deeply optimized by PAI based on years of experience into containerized applications. Services such as Data Science Workshop (DSW), Deep Learning Containers (DLC), and Elastic Algorithm Service (EAS) improve the elasticity and efficiency of AI model development, training, and inference, enhancing effectiveness of training and inference while reducing the barriers to AI development.

pai-system

Console: After you select this option to deploy AI Suite Console, configure parameters in the Note dialog box. For more information, see Install and configure AI Dashboard and AI Developer Console.

ack-ai-dashboard (ecosystem tool), a visualized O&M console.

kube-ai

ack-ai-dev-console (ecosystem tool), a deep learning development console.

kube-ai

Console Data Storage

After you set Interaction Mode to Console, set Console Data Storage to Pre-installed MySQL or ApsaraDB RDS. For more information, see Install and configure AI Dashboard and AI Developer Console.

ack-mysql, a MySQL database component.

kube-ai

Workflow

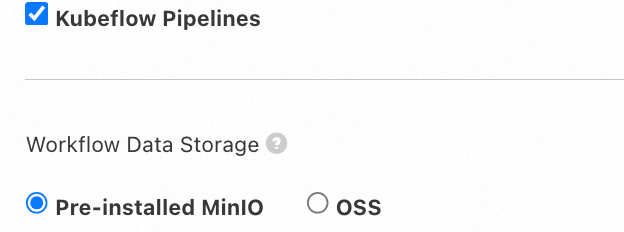

After you select Kubeflow Pipelines, you can set Workflow Data Storage to Pre-installed MinIO or OSS. For more information, see Install and configure Kubeflow Pipelines.

ack-ai-pipeline (ecosystem tool), a platform for building end-to-end machine learning workflows.

kube-ai

Monitoring

Specify whether to install the Monitoring Component. For more information, see Work with cloud-native AI dashboards.

ack-arena-exporter, a cluster monitoring component.

kube-ai

Click Deploy Cloud-native AI Suite in the lower part of the page. The system checks the environment and the dependencies of the selected components. After the environment and the dependencies pass the check, the system deploys the selected components.

After the components are installed, you can view the following information in the Components list:

You can view the names and versions of the components that are installed in the cluster. You can deploy or uninstall components.

If a component is updatable, you can update the component.

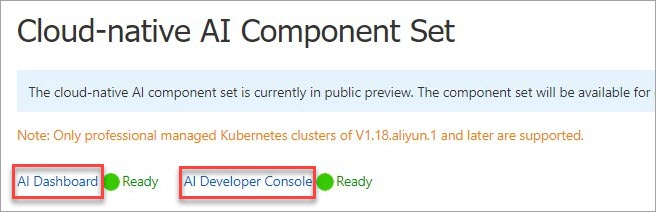

After you install ack-ai-dashboard and ack-ai-dev-console, you can find the hyperlinks to AI Dashboard and AI Developer Console in the upper-left corner of the Cloud-native AI Suite page. You can click a hyperlink to access the corresponding component.

After the installation is complete, you can find the hyperlinks to AI Dashboard and AI Developer Console in the upper-left corner of the Cloud-native AI Suite page. You can click a hyperlink to access the corresponding component.

Install and configure AI Dashboard and AI Developer Console

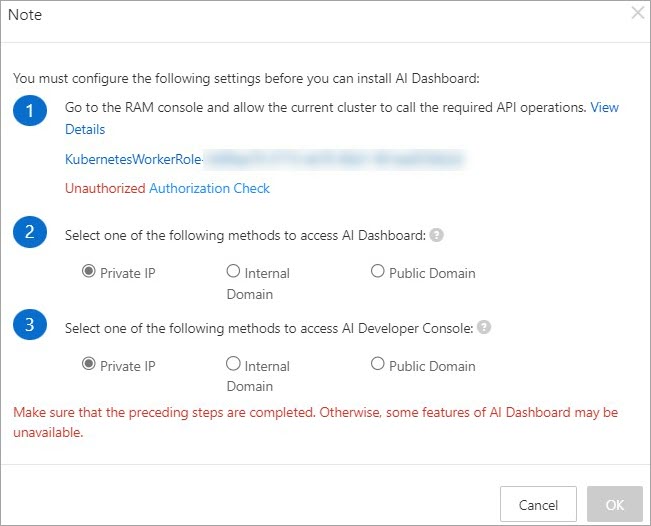

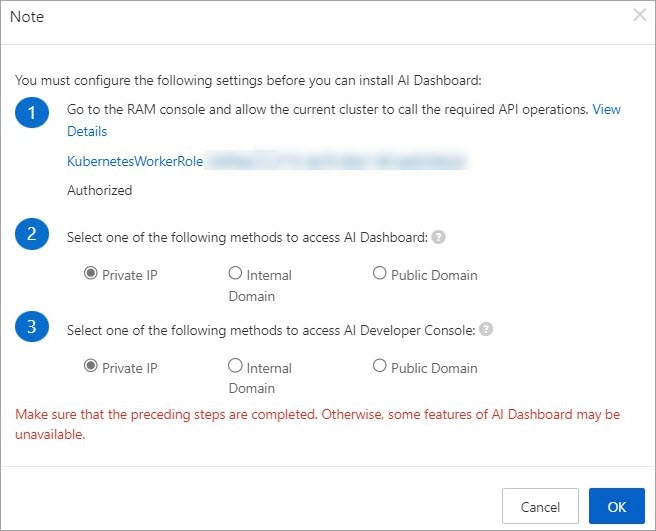

In the Interaction Mode section of the Cloud-native AI Suite page, select Console. The Note dialog box appears, as shown in the following figure.

Create a custom policy to grant permissions to the RAM worker role.

Create a custom policy.

Log on to the RAM console. In the left-side navigation pane, choose Permissions > Policies.

On the Policies page, click Create Policy.

Click the JSON tab. Add the following content to the

Actionfield and click Next to edit policy information."log:GetProject", "log:GetLogStore", "log:GetConfig", "log:GetMachineGroup", "log:GetAppliedMachineGroups", "log:GetAppliedConfigs", "log:GetIndex", "log:GetSavedSearch", "log:GetDashboard", "log:GetJob", "ecs:DescribeInstances", "ecs:DescribeSpotPriceHistory", "ecs:DescribePrice", "eci:DescribeContainerGroups", "eci:DescribeContainerGroupPrice", "log:GetLogStoreLogs", "ims:CreateApplication", "ims:UpdateApplication", "ims:GetApplication", "ims:ListApplications", "ims:DeleteApplication", "ims:CreateAppSecret", "ims:GetAppSecret", "ims:ListAppSecretIds", "ims:ListUsers"Specify the Name parameter in the

k8sWorkerRolePolicy-{ClusterID}format and click OK.

Grant permissions to the RAM worker role of the cluster.

Log on to the RAM console. In the left-side navigation pane, choose Identities > Roles.

Enter the RAM worker role in the

KubernetesWorkerRole-{ClusterID}format into the search box. Find the role that you want to manage and click Grant Permission in the Actions column.In the Select Policy section, click Custom Policy.

Enter the name of the custom policy that you created in the

k8sWorkerRolePolicy-{ClusterID}format into the search box and select the policy.Click OK.

Return to the Note dialog box and click Authorization Check. If the authorization is successful, Authorized is displayed and the OK button becomes available. Then, perform Step 3.

Select a method to access AI Dashboard and a method to access AI Developer Console. Then, click OK.

You can use a private IP address, internal domain name, or public domain name to access AI Dashboard or AI Developer Console.

In the production environment, use private IPs or public domain names to access services.

The public domain name method is recommended only for testing. If you need to use a public domain name, bind it with the public IP of the cluster's Nginx Ingree SLB in your local Host file.

NoteIf you want to use a private IP address to access AI Dashboard or AI Developer Console, select Private IP in the Note dialog box.

For more information about how to use a private IP address or internal domain name to access AI Dashboard or AI Developer Console, see Access AI Dashboard.

Set the Console Data Storage parameter.

After you select Console, the Console Data Storage parameter appears below the Interaction Mode parameter. You can select Pre-installed MySQL or ApsaraDB RDS.

Pre-installed MySQL

If you do not select ApsaraDB RDS, Pre-installed MySQL is used by default. Due to stability and SLA issues, we recommend that you use this method only for testing. If you select this method, a new disk is created and billed each time you deploy the cloud-native AI suite. You need to manually release the disks.

ImportantIf the cluster or storage fails, data may be lost.

When you deploy the cloud-native AI suite, ACK creates a persistent volume claim (PVC) based on the specified StorageClass, and creates and mounts a disk whose size is 120 GB to persist MySQL data. You are charged for the disk and must manage the disk. If you no longer use the disk, release the disk at the earliest opportunity. For more information about how to release a disk, see Release a disk.

ApsaraDB RDS

NoteIf connection issues occur when you use ApsaraDB RDS, refer to Troubleshoot failures in connecting to an ApsaraDB RDS for MySQL instance.

If you want to change the method to store data, you must uninstall and redeploy the cloud-native AI suite. If a Secret named

kubeai-rdsalready exists, delete the Secret by using kubectl.

Purchase an ApsaraDB RDS instance and create a database and account. For more information, see Getting Started. For more information about the billing of ApsaraDB RDS, see Billing overview.

Click Deploy Cloud-native AI Suite in the lower part of the page to install the cloud-native AI suite.

Click the name of the cluster and choose Configurations > Secrets in the left-side navigation pane.

Select

kube-aifrom the Namespace drop-down list on the top of the page.Click Create from YAML in the upper-right corner of the page.

Copy the following YAML content to create a Secret named

kubeai-rds:apiVersion: v1 kind: Secret metadata: name: kubeai-rds namespace: kube-ai type: Opaque stringData: MYSQL_HOST: "Your RDS URL" MYSQL_DB_NAME: "Database name" MYSQL_USER: "Database username" MYSQL_PASSWORD: "Database password"Parameter

Description

name

The name of the Secret.

namespace

The name of the namespace.

MYSQL_HOST

MYSQL_DB_NAME

MYSQL_USER

MYSQL_PASSWORD

The parameters related to the ApsaraDB RDS for MySQL instance. For more information, see Create an ApsaraDB RDS for MySQL instance and Create accounts and databases.

Install and configure Kubeflow Pipelines

After you select Kubeflow Pipelines, you must set the Workflow Data Storage parameter.

Pre-installed MinIO

If you do not select OSS, Pre-installed MinIO is used by default. Due to stability and SLA issues, we recommend that you use this method only for testing. If you select this method, a new disk is created and billed each time you deploy the cloud-native AI suite. You need to manually release the disks.

If the cluster or storage fails, data may be lost.

When you deploy the cloud-native AI suite, ACK creates a PVC based on the specified StorageClass, and creates and mounts a disk whose size is 20 GB to persist MinIO data. You are charged for the disk and must manage the disk. If you no longer use the disk, release the disk at the earliest opportunity. For more information about how to release a disk, see Release a disk.

OSS

Add a namespace named kube-ai if it does not exist in the cluster.

kubectl create ns kube-aiBefore you install the Kubeflow Pipelines provided by the cloud-native AI suite, click the name of the cluster on the Clusters page of the ACK console. Then, choose Configurations > Secrets in the left-side navigation pane.

Select the namespace kube-ai from the Namespace drop-down list on the top of the page.

Click Create from YAML in the upper-right corner of the page.

Copy the following YAML content and click Create. The cluster automatically executes the YAML file and creates a Secret named

kubeai-oss.apiVersion: v1 kind: Secret metadata: name: kubeai-oss namespace: kube-ai type: Opaque stringData: ENDPOINT: "https://oss-cn-beijing.aliyuncs.com" ACCESS_KEY_ID: "****" ACCESS_KEY_SECRET: "****"Parameter

Description

name

The name of the Secret.

namespace

The name of the namespace.

Notenamespace: kube-ai is automatically created when you deploy the cloud-native AI suite.

ENDPOINT

The endpoint of Object Storage Service (OSS). In this example, the endpoint of OSS in the China (Beijing) region is used. For more information, see Regions and endpoints.

ACCESS_KEY_ID

ACCESS_KEY_SECRET

Enter the AccessKey pair of your account. For information about how to obtain an AccessKey pair, see Obtain an AccessKey pair.

ImportantTo ensure data security, we recommend that you enter the AccessKey pair of a RAM user. After you log on as a RAM user, you need to attach the

AliyunOSSFullAccesspolicy to the RAM user.After the Secret is created, wait for a while and if a bucket named

mlpipeline-<clusterid>is created in the OSS console, it indicates that OSS is configured to be used as the storage. For more information about the billing of OSS, see Billing.Deploy the Kubeflow Pipelines under Cloud-native AI Suite.