ACK provides the following Container Network Interface (CNI) plug-ins: Terway and Flannel. This topic compares the features and applicable scenarios of Terway and Flannel.

Terway is a network plug-in developed by Alibaba Cloud. The plug-in builds networks based on elastic network interfaces (ENIs). Terway supports the use of extended Berkeley Packet Filter (eBPF) to accelerate network traffic. Terway also supports network policies and pod-level switches and security groups. Terway is suitable for scenarios such as high-performance computing, gaming, and microservices that require large nodes, and high network performance and security.

Flannel is an open source network plug-in provided by the community. Flannel uses the Virtual Private Cloud (VPC) of Alibaba Cloud in ACK. Packets are forwarded based on the VPC route table. Flannel is suitable for scenarios that require smaller nodes, simplified network configuration, and no requirements for custom control over the container network.

The container network plug-in must be installed when you create a cluster and cannot be changed after the cluster is created.

When using Flannel, ALB Ingress only supports forwarding requests to NodePort and LoadBalancer Services, and does not support ClusterIP Services.

Item | Terway | Flannel |

Network performance |

| |

Node quota | When you use Terway, the maximum number of nodes depends on the capacity of a single cluster.

| When Flannel is used, the maximum number of nodes depends on the number of VPC route table entries and the capacity of the cluster. VPC route table entries: By default, 200 entries are supported. After you apply to increase the quota, up to 1000 entries are supported. One node corresponds to one entry in a cluster. Up to 1000 nodes are supported.

|

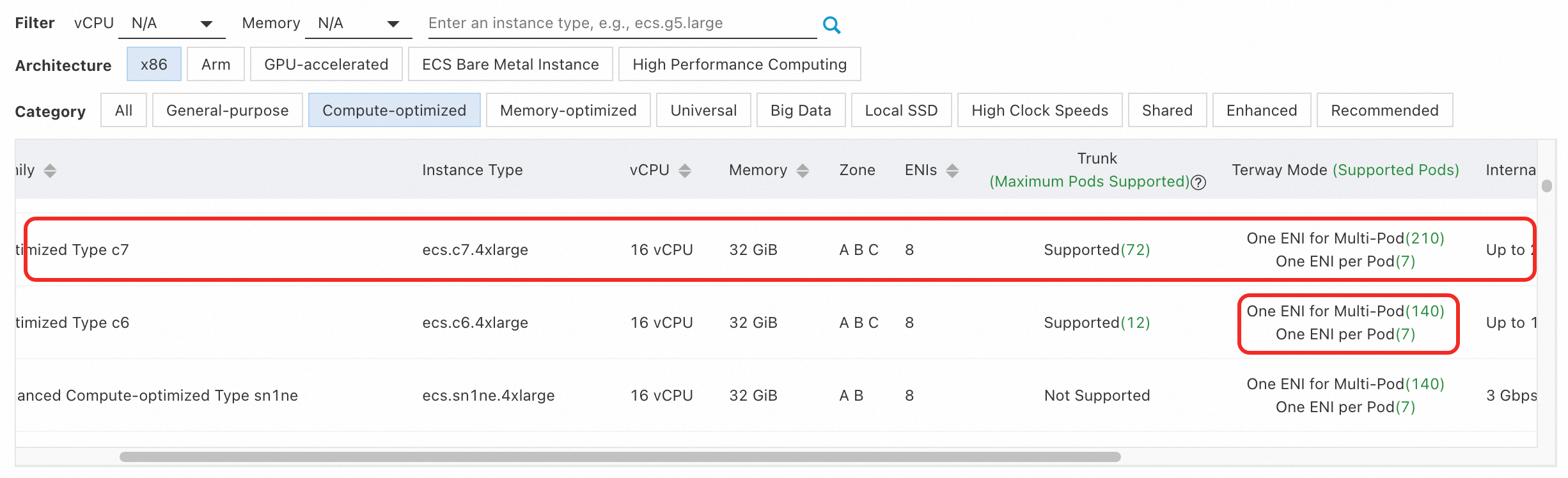

Number of pods on a node | Pods use the ENI of the node. The number of pods on a node depends on the specifications and metrics of the node, such as the number of ENIs and the number of private IPv4 addresses per ENI.

The preceding figure shows an ECS instance of the Compute-intensive Type c7, ecs.c7.4xlarge, and 16 vCPU 32 GiB specifications.

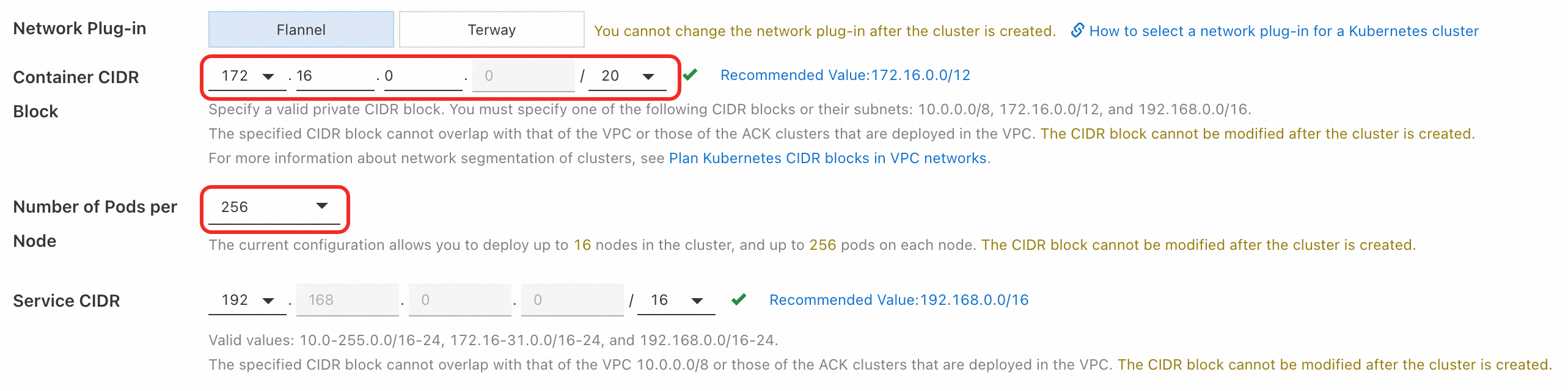

Compute-intensive Type c6 and Compute-intensive Type c7 instances have the same number of ENIs. However, the difference in the numbers of private IPv4 addresses per ENI allows Compute-intensive Type c6 to create up to 140 pods in inclusive ENI mode. For more information, see Method for calculating the maximum number of pods on a node. | The number of pods on a node depends on the number of pods and subnet mask that you set when you specify the Pod CIDR Block parameter.

The preceding figure shows that the CIDR block of pods in the ACK Pro cluster is 172.16.0.0/20. Each node can run up to 256 pods. Up to 16 nodes can be deployed in the ACK Pro cluster. Important After you configure Flannel, you cannot change the maximum number of nodes. |

Pod CIDR block |

|

|

Network security |

|

|

IPv4/IPv6 dual stack | IPv4/IPv6 dual stack is supported. | IPv4/IPv6 dual stack is not supported. Note The Flannel plug-in used in ACK is modified to adapt to the Alibaba Cloud environment and is not synchronized with the open source community. For more information about the release notes of Flannel, see Flannel. |

Fixed IP addresses of pods | You can specify a static IP address for each pod. | You cannot specify a static IP address for each pod. |

Session persistence | The backend server of the SLB instance directly connects to pods and relies on the session persistence feature to ensure uninterrupted service when the backend pods change. | The backend server of the SLB instance uses NodePort to connect to a pod. The traffic is interrupted when the pod changes, which may lead to service retries. |

Accesses between multiple clusters | Pod in different clusters can communicate when the relevant ports are opened in security group rules. | Not supported. |

Retention of pod source IP addresses | The source IP addresses used by pods to access other endpoints in the VPC are all pod IP addresses. This facilitates auditing. | If a pod accesses another endpoint in a VPC, the source IP address is the node IP address. The pod IP address cannot be retained. |

What to do next

After you create a cluster, you cannot modify the pod CIDR block, Service CIDR block, or the node CIDR block. The preceding CIDR blocks limit the maximum number of pods, Services, and nodes that can be deployed in the cluster. If you want to enable logical network isolation between different resources, make sure that the CIDR blocks of the resources do not overlap with each other. This also allows you to configure access control and custom routes. We recommend that you plan the CIDR block before you create a Kubernetes cluster. For more information, see Network planning of an ACK managed cluster.

After you plan the CIDR block:

If you want to use Terway, install Terway when you create a cluster. For more information, see Work with Terway.

If you want to use Flannel, install Flannel when you create a cluster. For more information, see Work with Flannel.

References

For more information about how to limit the number of nodes in a cluster and how to increase the quota, see Quotas and limits.

For more information about FAQs when you use container networks, see FAQs about container networks.