Disclaimer: This is a translated work of Qinxia's 漫谈分布式系统, all rights reserved to the original author.

When learning new things, in addition to understanding what it is and how to use it, I usually pay special attention to why there is this thing and why should I be knowing it. And when it's necessary, I then start learning on how it is implemented and what is worth learning from.

This habit of mine has greatly improved my work efficiency and also helped me to think more diligently.

So, as this is the first article in this series, I want to put focus on why there is a distributed system and when a distributed system is needed.

Those who want to get the good stuff right away can skip to the TL;DR part at the end. Of course, I would not recommend it.

If you are taking coding 101 at school or took to work on real-situation problems on your first job, the programs you write are fairly simple. Because the problems are simple.

Taking processing data as an example, you may just be parsing a file of over 10K and then generate a word frequency analysis report. It's not so complicated, a dozen lines of code could just do the trick.

Until one day, you are to deal with 1000 files, some of them very big files with over several hundred MBs. You applied the usual procedure and found that the running time was a bit long. So you want to optimize it.

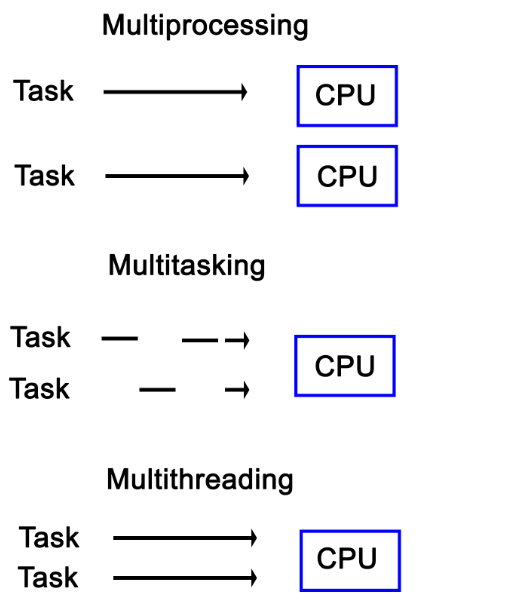

You think, with 1000 unrelated files…ah, multi-thread! After processing one file with one thread, all you need to do is to add the results. You put into use the operating system knowledge learned in the school and the multi-threaded library in the programming language. You're happy, and you think that your coding teacher will be very pleased with such good thinking.

After all, if multi-threading is not fast enough, like when multi-core is limited in multi-threading in Python, you can always change to multi-processing.

If the thread and process switching overhead are so big that it affects the overall performance-usually in the so-called I/O bound scenario, techniques such as coroutine can also be used.

Whether it is a thread, process, or coroutine, the purpose is to parallelize the calculation to speed up the running process.

But if the amount of calculation is so huge that it drained the computing power of the machine, and it can no longer run such big data, what now?

Well, if one machine is not enough, then we'll add a few more machines. So from the "computing Parallelization" of multithreading/process/coroutine, we evolved the technique to "computing distributed" (of course, distributed is also parallelized computing to a certain extent).

This isn't the end of the story. Now, what if the data needs to be processed is 10T, and the machine you have is only equipped with a 500G hard disk, what should you do then?

One way is to expand vertically, which is to accrue a machine with tens of T hard drives; the other is to expand horizontally, which is buying a few more machines. The former will easily reach a bottleneck. After all, while the data is unlimited, the capacity of a machine is not. So in the case of large amounts of data, you can but choose the second solution.

However, distributing data to multiple machines won't essentially solve the problem of data storage.

Also, after the computing is distributed, programs can't read data from the same machine. Then efficiency will inevitably be dragged down by the performance of a single machine, of its disk IO, network bandwidth, etc. This calls for data storage to be distributed to various machines.

For these two reasons, data storage distribution is shaping up.

I mentioned earlier about the distributed computing that

We process a file with one thread and combine the results.

First split the task and then merge the results. It is essentially the idea of division and conquer. Division and conquer is a very general and application-independent method. There is no need to implement it again for every application. A standard library, or framework, to make the name fancy, would also work.

After solving this problem, we enrich the functions, define API, and viola! A rough version is done.

So, we have a distributed computing framework.

When it comes to the distributed storage, some problems can't be ignored like:

• How to split data? At what granularity?

• How does the user know which data is?

The answers to these questions are obvious:

• As mentioned earlier, it is not appropriate to split data into files. The file size may vary and is not conducive to the balanced distribution of data. It needs to be split into smaller units.

• To let the user know which machine to go to get the desired data, especially after the cutting granularity of the data is no longer a file that the user can understand, a mapping relationship between data units and machine/service locations needs to be maintained.

Of course, there are far more problems, and splitting data is just one of them. After solving these problems, we improve them and encapsulate APIs and services. We will have a framework that can store massive amounts of data, or we call it an engine.

So we have a distributed storage engine.

Distributed storage engines and distributed computing frameworks are the most basic components of a distributed system.

Generalized distributed systems, of course, are not solely made up of these two things. But they are the most basic core things. With them, we can process massive amounts of data without being limited to a single machine.

Yes, distributed systems are powerful and useful. But when should we use it, and when should we not use anti-aircraft guns to hit mosquitoes?

The answer is very simple. If you analyze the problems that distributed systems are trying to solve, it is clear at a glance:

• When you tried various optimization methods and still can't reduce the running time to an acceptable level.

• When you find that you can't find a machine with a large enough hard drive to hold your data, or can't afford the cost.

Then very likely, it's time you need to consider a distributed system.

As for the specific limit of running time or data storage, it is different from the application scenario.

For example, the report that the boss wants to read every morning can be left running for an hour at night. The quasi-real-time monitoring data of the whole platform can not wait for a few hours.

Also for indicator statistics, hundreds of GBs of user registration data may not be considered large, but a few hundred GBs of user behavior data may be massive data. The latter is more likely than the former to require a distributed system because the growth rate will be an order of magnitude or two faster.

A question that seems nonsensical at first but is worth thinking about is: is microservices a distributed system?

Similarly: is the database that sheds tables and accesses middleware a distributed system?

Broadly speaking, yes, they are.

But in a narrow sense, also from my standpoint in this series, distributed systems refer to systems in the field of big data to process massive data.

Of course, distributed systems are far more than two simple categories, and they are not as easy to set up as it seems. There are still many problems that need to be considered and solved, many of them are precisely the problems caused by distribution itself (sounds disturbing I know), which is what I will talk about in most of the later articles.

In this way, we have a basic understanding of what this thing is as mentioned at the beginning.

(See, focusing on why it exists and then what it is doesn't affect the way we learn about distributed systems. The reason I organized the article in this way is to emphasize the importance of thinking more about "Why".)

The distributed system that this series of articles focuses on is a multi-machine system to process massive data.

The reason why we need distributed systems is to conquer the constraints of a single machine. To be more specific, it is to solve these two problems:

• A single machine is slow, even when applying coroutine, multi-threaded, and multi-process.

• A single machine can't hold all the data, no matter how much money you spend on memories.

In practical applications, there are two basic core types of distributed systems:

• Distributed computing framework, as to solve the problem of slow calculation

• Distributed storage engine, as to solve the problem of storing data

__

Now that we know why there is a distributed system and when a distributed system should be used. The next question is, where do distributed systems come from, and who made them?

In the second article, I will discuss this issue.

This is a carefully conceived series of 20-30 articles. I hope to let everyone have a basic and core grasp of the distributed system in a story-telling way. Stay Tuned for the next one!

Learning about Distributed Systems - Part 2: The Interaction Between Open Source and Business

64 posts | 57 followers

FollowAlibaba Cloud_Academy - May 26, 2022

Alibaba Cloud_Academy - May 31, 2022

Alibaba Cloud_Academy - August 25, 2023

Alibaba Cloud_Academy - August 4, 2022

Alibaba Cloud_Academy - June 13, 2022

Alibaba Cloud_Academy - July 24, 2023

64 posts | 57 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Alibaba Cloud Academy

Alibaba Cloud Academy

Alibaba Cloud provides beginners and programmers with online course about cloud computing and big data certification including machine learning, Devops, big data analysis and networking.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn MoreMore Posts by Alibaba Cloud_Academy