By Dong YiQuan

This article is organized based on the sharing by Dong Yiquan, the Director of R&D at Trip.com, at the 2025 China Trusted Cloud Conference. Dong Yiquan's GitHub ID is CH3CHO, and he is also a Maintainer of Higress. The sharing content is divided into the following four parts.

In order to further improve service levels and quality, Trip.com began exploring the field of large artificial intelligence models early on. As work deepened, the application areas of large model services continued to expand, and the number of internal applications needing to access these large model services increased. Inevitably, we encountered the following issues.

In such scenarios, we naturally think of using gateways to unify the management of these service accesses and to add various traffic governance functions at different layers.

After comparing multiple open-source projects, we chose Higress as the foundation for building AI gateways.

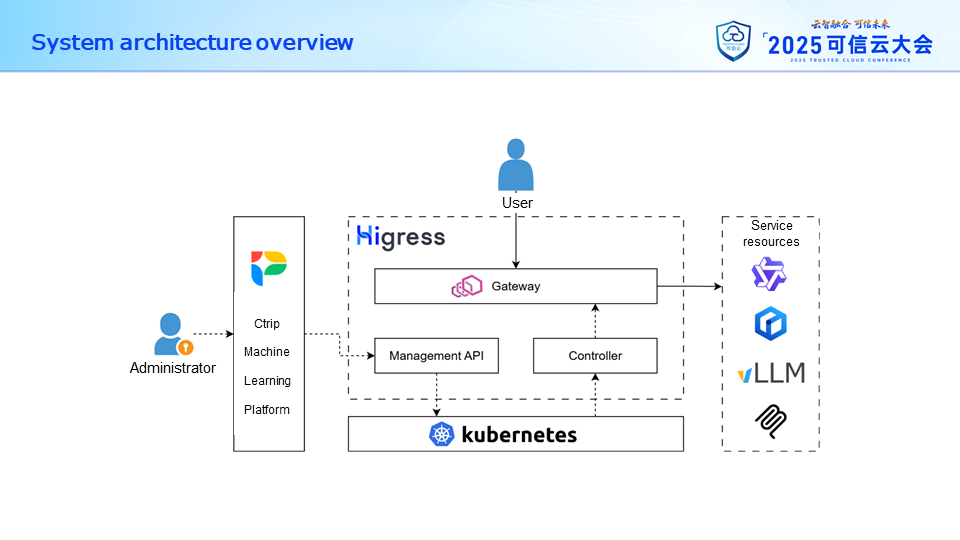

After implementing Higress as the AI gateway infrastructure internally, our entire AI service access architecture is shown in the diagram below. All components of the gateway are deployed within the internal Kubernetes cluster, responsible for managing server resources and configuration information.

The gateway itself consists of three components:

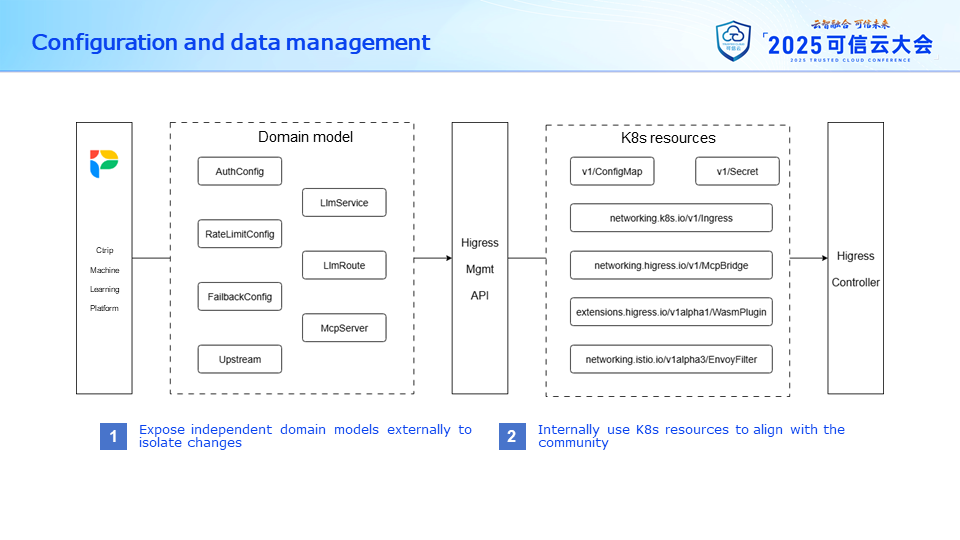

Regarding configuration data, Higress uses some native resource types from K8s and some custom resources. We made no changes here. However, when integrating with the machine learning platform, we designed independent domain models for the large model access and MCP Server access scenarios based on actual business needs, and we conducted secondary development on Higress's Management API, adding model conversion functionality and supporting both incremental and full synchronization operations for all configurations.

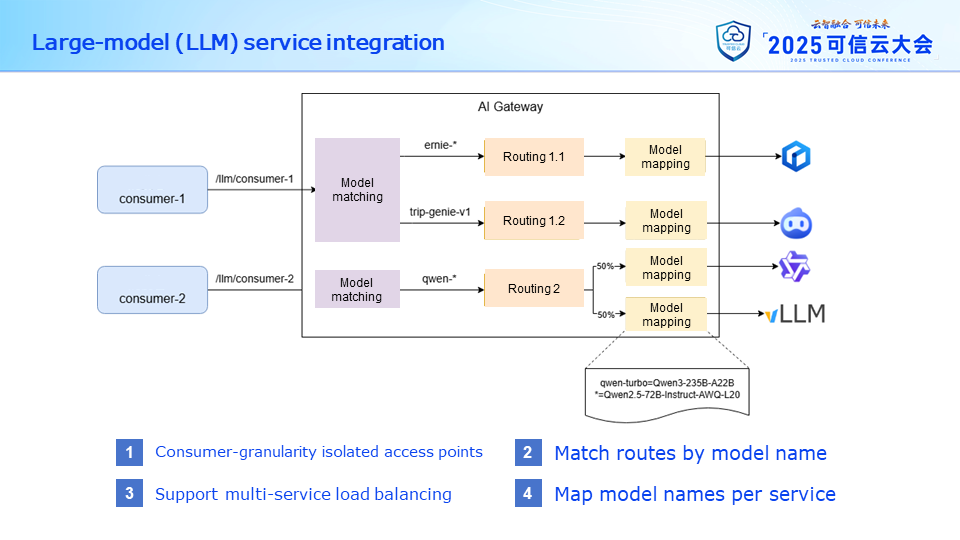

In terms of large model service access, considering the need for risk isolation, we set different access point paths for different accessing parties (here referred to as consumers). Each access point path can associate multiple model routes using model names for matching. Each model route can also associate multiple backend large model services, achieving load balancing between services.

When forwarding requests to large model services, the gateway also supports mapping model names. This means that users can initiate calls using a unified model alias, and when forwarding to different large model services, the specific model name can be replaced according to the actual situation of the service.

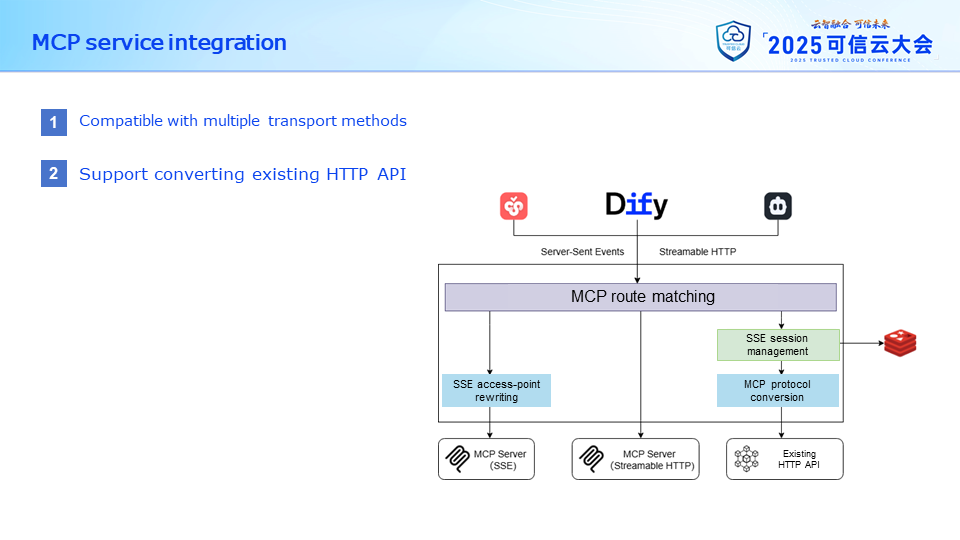

Recently, we added the capability to integrate MCP service access onto the gateway. This part is more like a traditional API gateway, exposing a service to the gateway for external access.

However, in addition to supporting existing MCP services, the gateway also supports converting existing HTTP APIs into MCP services. Users can access such MCP services using SSE or Streamable HTTP methods. We will elaborate further on this part regarding existing conversions later.

For all requests processed by the AI gateway, the accessing party must provide access credentials for authentication and authorization. Currently, we primarily use the Bearer Token authentication mechanism for accessing parties. Each token is associated with a consumer. Which services a consumer can access requires application and approval.

On the backend service side, most large model services require authentication upon access. These access credentials are stored uniformly within the gateway, and the consumer side does not need to concern itself with this. However, on the MCP service side, the situation is a bit more complex. Some MCP services do not require authentication, while others require authentication information. The gateway layer supports service providers to choose based on actual situations. If authentication is required, credentials can be stored uniformly within the gateway or can be required to be provided by the calling party. The gateway will also adjust the credential information transmission method based on configuration to meet end-to-end authentication requirements.

Of course, these are all normal situations. Next, we will discuss some mechanisms for handling abnormal traffic.

First, throttling.

Each consumer applying for access to large models must fill in the corresponding throttling threshold, which can be one of three types: Token per Minute (TPM), Query per Minute (QPM), and concurrent request count. This not only protects our gateway and backend services from sudden traffic disruptions but also facilitates capacity planning for the gateway and services' operation and maintenance teams, and helps users manage costs.

These throttling mechanisms utilize the Wasm plugin extension points provided by Higress, using Redis as a central counter to achieve global throttling statistics, and utilizing LUA scripts to implement atomic updates of the counter.

Next is degradation.

If, under unexpected circumstances, a backend large model service fails, we can pre-configure corresponding model degradation rules. When the originally routed large model service returns 4xx, 5xx, or other abnormal response codes, the gateway will not directly return the response to the caller. Instead, it will forward the request to a designated large model service for degradation and return the response data from the degradation service. This degradation operation only occurs once, and considering that the list of models supported by the degradation service may differ from the original service, we can configure independent model name mapping rules for the degradation service.

In the comparison chart of call counts depicted in the black line below, we can see that when the service corresponding to the green line fails, requests are automatically switched to the service corresponding to the yellow line. The reason for this chart is that the gateway itself also provides powerful observability capabilities.

Third are logs and monitoring.

The gateway's request logs are written to local disks and rolled over via logrotate to avoid occupying too much storage space. The content of the logs can be customized and modified. Through Wasm plugins combined with custom log templates, we record many detailed information regarding large model requests in the logs, such as model name, token consumption count, input and output message content, and so on. These details assist us in analyzing user usage patterns and help users optimize their usage methods.

Log collection is straightforward. This part also reuses the existing monitoring chain of the company, sending log content to Kafka via FileBeat, consuming Kafka for log information through components similar to LogStash, parsing and recomposing, and then writing into ClickHouse, finally providing access via Kibana.

The monitoring aspect is even more straightforward. The gateway itself exposes an interface for Prometheus to scrape. The scraped monitoring information can be viewed on the internal Grafana.

This is a general overview of the gateway's situation. Next, we will share some key challenges. Of course, with the help of Higress, the originally challenging aspects have become more manageable.

First is adapting to the interface contracts of various large model suppliers. Currently, the most general protocol for requesting large models is OpenAI's API protocol, and the services provided by the gateway are based on this protocol. However, some large model services do not fully comply with this protocol; for example, some have different interface paths, while others use different authentication methods.

This means the gateway needs to modify the request and response data when forwarding data to align with the interface protocol supported by the other end. Fortunately, Higress has already adapted to many types of large model services available in the market, so we basically do not need to make changes to connect to various large model services, but promoting MCP service access has not been so simple.

Trip.com currently has a vast number of HTTP services covering all aspects of business scenarios. Leveraging them directly and converting them into MCP services for AI use would significantly aid the business side in integrating with the entire AI system.

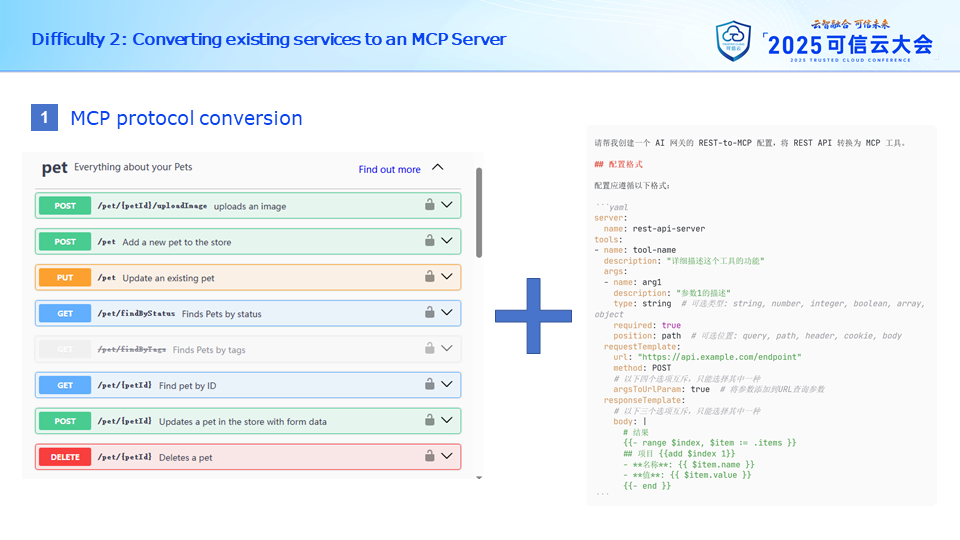

However, it is well known that to expose an interface as a tool on the MCP Server, it requires a tool description, including the interface name, parameter list, etc.

Our REST API's maximum capability is to generate this kind of interface contract using Swagger to produce OpenAPI. Therefore, the core issue is to convert the left-side interface contract into the right-side tool description.

Besides request parameters, we also need to format the backend interface's response data to serve as the MCP Server's response data to facilitate understanding by large models.

This is clearly a repetitive task. Given it's highly repetitive, we can let AI help us complete it.

By providing the interface contract together with the hint on the right side to the large model, it can generate a basic usable description. Only minimal manual verification and adjustments are required for usage.

After completing the protocol conversion, we moved to the next point of concern.

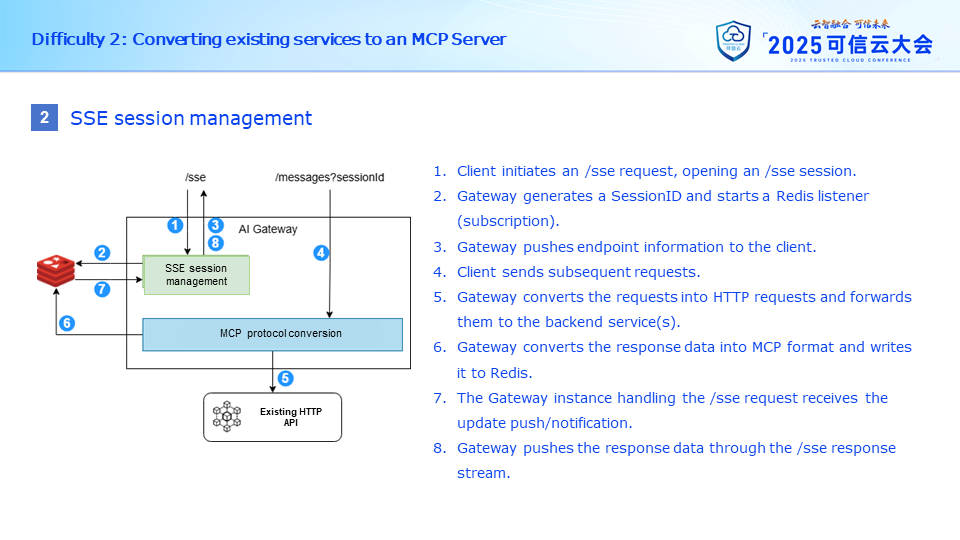

Although the SSE transport method has already been deprecated by MCP officials, many calling parties still wish for the gateway to support SSE, which uses a request-response separation design, necessitating session management functionality at the gateway layer.

The general process is as follows: The MCP Client requests the /SSE interface of the service, initiating a new session. The gateway then generates a new SessionID and listens to a channel associated with this SessionID in Redis, returning the Endpoint information corresponding to this MCP Server to the client. The client can then make subsequent requests to this Endpoint, such as initializing listeners, retrieving tool lists, invoking tools, etc. The response data for these requests will not be returned directly to the client but will be published to the Redis Channel being listened to, passing the information into the context of the /SSE request and then pushing it to the client.

This concludes the technical details of the gateway implementation.

Currently, the AI gateway at Trip.com has integrated multiple large models, possessing the capability to stably support large-scale model calls, laying a solid foundation for the company's exploration of artificial intelligence technology. We are also continuously integrating various MCP Servers to enrich the entire product ecosystem.

As you can see, many functions of our entire AI gateway are organically provided by open-source Higress. Our main task is to adapt it to Trip.com's R&D system and connect it with surrounding governance platforms. Through our validation, we have also discovered some scenarios that the community does not yet support. We submitted these via Pull Request and they have already been merged into the codebase. We believe that as more people use open-source products and contribute open-source code, our community will continue to improve.

As the saying goes: Every return of code is a continuation of open-source vitality.

Moving forward, we will continue to iterate on the capabilities of the gateway, optimizing aspects such as model routing rules, post-processing of model outputs, recognition of calling party priorities, and content security protection. We will integrate AI capabilities into the gateway itself, extending beyond merely serving as a layer carrying the business, and further enhance the gateway's security and compliance, allowing it to play a larger role in the overall AI traffic links.

If you want to learn more about Alibaba Cloud API Gateway (Higress), please click: https://higress.ai/en/

Disclaimer: This article is an objective description of Ctrip's use of Higress and does not constitute an endorsement by Ctrip of Higress's functionality or availability.

HiMarket Is Officially Open Source, Providing Enterprises With a Ready-To-Use AI Open Platform

634 posts | 55 followers

FollowAlibaba Cloud Native Community - November 17, 2025

Alibaba Cloud Native Community - March 2, 2023

Alibaba Cloud Community - March 14, 2025

Alibaba Cloud Community - August 15, 2025

Alibaba Clouder - November 2, 2020

Alibaba Cloud Community - November 12, 2024

634 posts | 55 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by Alibaba Cloud Native Community