By Liu Yu (This article is originally from Go Serverless.)

I have always wanted a cartoon version of my avatar, but I cannot make it. Therefore, I wonder if I can implement this function using AI and deploy it to Serverless architecture for more people to try it out.

The backend project adopts the v2 version of AnimeGAN, a famous animation-style conversion filter library in the industry. The following is the effect:

Specific information about this model will not be introduced and explained in detail here. AI models are exposed through interfaces by combining with the Python Web framework:

from PIL import Image

import io

import torch

import base64

import bottle

import random

import json

cacheDir = '/tmp/'

modelDir = './model/bryandlee_animegan2-pytorch_main'

getModel = lambda modelName: torch.hub.load(modelDir, "generator", pretrained=modelName, source='local')

models = {

'celeba_distill': getModel('celeba_distill'),

'face_paint_512_v1': getModel('face_paint_512_v1'),

'face_paint_512_v2': getModel('face_paint_512_v2'),

'paprika': getModel('paprika')

}

randomStr = lambda num=5: "".join(random.sample('abcdefghijklmnopqrstuvwxyz', num))

face2paint = torch.hub.load(modelDir, "face2paint", size=512, source='local')

@bottle.route('/images/comic_style', method='POST')

def getComicStyle():

result = {}

try:

postData = json.loads(bottle.request.body.read().decode("utf-8"))

style = postData.get("style", 'celeba_distill')

image = postData.get("image")

localName = randomStr(10)

# Image Acquisition

imagePath = cacheDir + localName

with open(imagePath, 'wb') as f:

f.write(base64.b64decode(image))

# Content Prediction

model = models[style]

imgAttr = Image.open(imagePath).convert("RGB")

outAttr = face2paint(model, imgAttr)

img_buffer = io.BytesIO()

outAttr.save(img_buffer, format='JPEG')

byte_data = img_buffer.getvalue()

img_buffer.close()

result["photo"] = 'data:image/jpg;base64, %s' % base64.b64encode(byte_data).decode()

except Exception as e:

print("ERROR: ", e)

result["error"] = True

return result

app = bottle.default_app()

if __name__ == "__main__":

bottle.run(host='localhost', port=8099)The code is partially improved based on the Serverless architecture:

/tmp directory is writable in function mode, so the picture will be cached to the /tmp directory.tmp.The preceding code is more AI-related. In addition, there needs to be an interface to obtain the model list, model path, and other related information:

import bottle

@bottle.route('/system/styles', method='GET')

def styles():

return {

"AI动漫风": {

'color': 'red',

'detailList': {

"风格1": {

'uri': "images/comic_style",

'name': 'celeba_distill',

'color': 'orange',

'preview': 'https://serverless-article-picture.oss-cn-hangzhou.aliyuncs.com/1647773808708_20220320105649389392.png'

},

"风格2": {

'uri': "images/comic_style",

'name': 'face_paint_512_v1',

'color': 'blue',

'preview': 'https://serverless-article-picture.oss-cn-hangzhou.aliyuncs.com/1647773875279_20220320105756071508.png'

},

"风格3": {

'uri': "images/comic_style",

'name': 'face_paint_512_v2',

'color': 'pink',

'preview': 'https://serverless-article-picture.oss-cn-hangzhou.aliyuncs.com/1647773926924_20220320105847286510.png'

},

"风格4": {

'uri': "images/comic_style",

'name': 'paprika',

'color': 'cyan',

'preview': 'https://serverless-article-picture.oss-cn-hangzhou.aliyuncs.com/1647773976277_20220320105936594662.png'

},

}

},

}

app = bottle.default_app()

if __name__ == "__main__":

bottle.run(host='localhost', port=8099)I added a new function as a new interface to be exposed to the public. Why don't we add such an interface in the project just now to maintain one more function?

The second interface (the interface for obtaining the AI processing list) is simple. However, there are more problems with the interface of the first AI model.

Therefore, the Serverless Devs project needs to be used for processing:

Please refer to: https://docs.serverless-devs.com/en/fc/yaml/readme

Complete the s.yaml writing:

edition: 1.0.0

name: start-ai

access: "default"

vars: # Global Variable

region: cn-hangzhou

service:

name: ai

nasConfig: # After configuration, the function can access the specified NAS.

userId: 10003 # userID, Default Vaule: 10003

groupId: 10003 # groupID, Default Vaule: 10003

mountPoints: # Directory Configuration

- serverAddr: 0fe764bf9d-kci94.cn-hangzhou.nas.aliyuncs.com # NAS Server Address

nasDir: /python3

fcDir: /mnt/python3

vpcConfig:

vpcId: vpc-bp1rmyncqxoagiyqnbcxk

securityGroupId: sg-bp1dpxwusntfryekord6

vswitchIds:

- vsw-bp1wqgi5lptlmk8nk5yi0

services:

image:

component: fc

props: # Attribute Value

region: ${vars.region}

service: ${vars.service}

function:

name: image_server

description: Image Processing Service

runtime: python3

codeUri: ./

ossBucket: temp-code-cn-hangzhou

handler: index.app

memorySize: 3072

timeout: 300

environmentVariables:

PYTHONUSERBASE: /mnt/python3/python

triggers:

- name: httpTrigger

type: http

config:

authType: anonymous

methods:

- GET

- POST

- PUT

customDomains:

- domainName: avatar.aialbum.net

protocol: HTTP

routeConfigs:

- path: /*Proceed:

s build --use-docker

s deploy

s nas command mkdir /mnt/python3/python

s nas upload -r Local Dependency Path /mnt/python3/pythonAfter completion, the project can be tested through the interface.

In addition, WeChat Mini Program need an https background interface, so https-related certificate information needs to be configured here, which is not explained in detail.

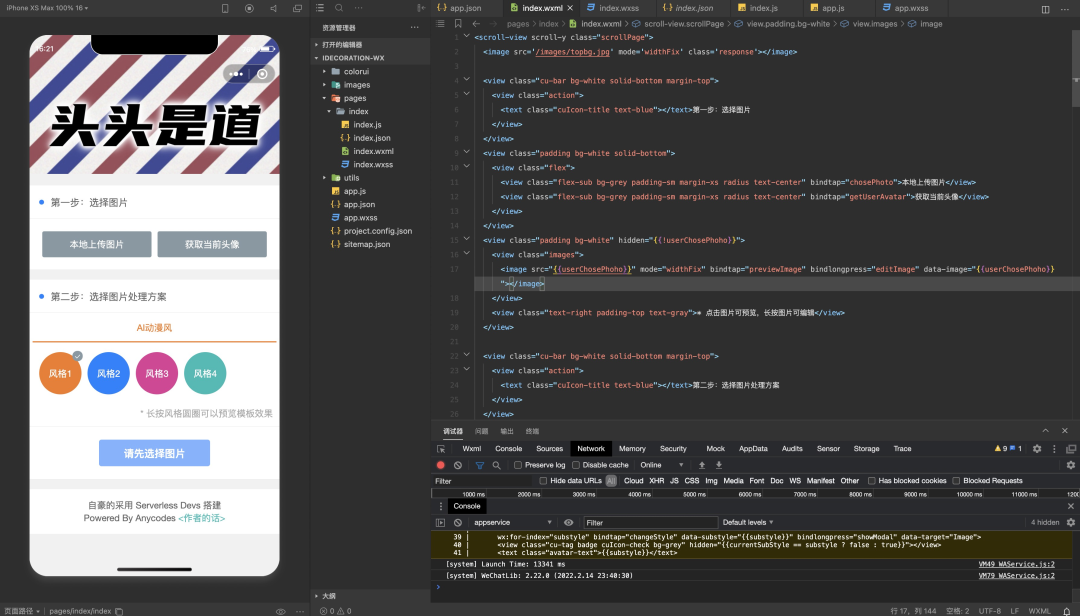

I will deploy my code on WeChat Mini Program. You can try on other platforms too, but the specific implementation may be different. The project still uses colorUi, and the entire project only has one page:

Page Related Layout:

<scroll-view scroll-y class="scrollPage">

<image src='/images/topbg.jpg' mode='widthFix' class='response'></image>

<view class="cu-bar bg-white solid-bottom margin-top">

<view class="action">

<text class="cuIcon-title text-blue"></text>Step 1: Select an image

</view>

</view>

<view class="padding bg-white solid-bottom">

<view class="flex">

<view class="flex-sub bg-grey padding-sm margin-xs radius text-center" bindtap="chosePhoto">Upload an image locally</view>

<view class="flex-sub bg-grey padding-sm margin-xs radius text-center" bindtap="getUserAvatar">Obtain the current avatar</view>

</view>

</view>

<view class="padding bg-white" hidden="{{!userChosePhoho}}">

<view class="images">

<image src="{{userChosePhoho}}" mode="widthFix" bindtap="previewImage" bindlongpress="editImage" data-image="{{userChosePhoho}}"></image>

</view>

<view class="text-right padding-top text-gray">* Click the image to preview, long press the image to edit</view>

</view>

<view class="cu-bar bg-white solid-bottom margin-top">

<view class="action">

<text class="cuIcon-title text-blue"></text>Step 2: Select an image processing scheme

</view>

</view>

<view class="bg-white">

<scroll-view scroll-x class="bg-white nav">

<view class="flex text-center">

<view class="cu-item flex-sub {{style==currentStyle?'text-orange cur':''}}" wx:for="{{styleList}}"

wx:for-index="style" bindtap="changeStyle" data-style="{{style}}">

{{style}}

</view>

</view>

</scroll-view>

</view>

<view class="padding-sm bg-white solid-bottom">

<view class="cu-avatar round xl bg-{{item.color}} margin-xs" wx:for="{{styleList[currentStyle].detailList}}"

wx:for-index="substyle" bindtap="changeStyle" data-substyle="{{substyle}}" bindlongpress="showModal" data-target="Image">

<view class="cu-tag badge cuIcon-check bg-grey" hidden="{{currentSubStyle == substyle ? false : true}}"></view>

<text class="avatar-text">{{substyle}}</text>

</view>

<view class="text-right padding-top text-gray">* Long press the style circle to preview the template effect</view>

</view>

<view class="padding-sm bg-white solid-bottom">

<button class="cu-btn block bg-blue margin-tb-sm lg" bindtap="getNewPhoto" disabled="{{!userChosePhoho}}"

type="">{{ userChosePhoho ? (getPhotoStatus ? 'AI将花费较长时间' : '生成图片') : '请先选择图片' }}</button>

</view>

<view class="cu-bar bg-white solid-bottom margin-top" hidden="{{!resultPhoto}}">

<view class="action">

<text class="cuIcon-title text-blue"></text>Generate the result

</view>

</view>

<view class="padding-sm bg-white solid-bottom" hidden="{{!resultPhoto}}">

<view wx:if="{{resultPhoto == 'error'}}">

<view class="text-center padding-top">The service is temporarily unavailable. Please try again later.</view>

<view class="text-center padding-top">Or add the developer's WeChat:<text class="text-blue" data-data="zhihuiyushaiqi" bindtap="copyData">zhihuiyushaiqi</text></view>

</view>

<view wx:else>

<view class="images">

<image src="{{resultPhoto}}" mode="aspectFit" bindtap="previewImage" bindlongpress="saveImage" data-image="{{resultPhoto}}"></image>

</view>

<view class="text-right padding-top text-gray">* Click the image to preview, long press the image to save.</view>

</view>

</view>

<view class="padding bg-white margin-top margin-bottom">

<view class="text-center">Proud to build with Serverless Devs</view>

<view class="text-center">Powered By Anycodes <text bindtap="showModal" class="text-cyan" data-target="Modal">{{"<"}}Author's words{{">"}}</text></view>

</view>

<view class="cu-modal {{modalName=='Modal'?'show':''}}">

<view class="cu-dialog">

<view class="cu-bar bg-white justify-end">

<view class="content">Author's words</view>

<view class="action" bindtap="hideModal">

<text class="cuIcon-close text-red"></text>

</view>

</view>

<view class="padding-xl text-left">

Hello everyone, I am Liu Yu. Thank you very much for your attention and use of this applet. It is an avatar generation gadget I made in my spare time. It is based on the" artificialretardation "technology. Anyway, it is awkward now, but I will try my best to make this small program" intelligent ". If you have any good comments, please contact me.<text class="text-blue" data-data="service@52exe.cn" bindtap="copyData">Email</text>or<text class="text-blue" data-data="zhihuiyushaiqi" bindtap="copyData">WeChat</text>. It is worth mentioning that this project is based on Alibaba Cloud Serverless architecture and is built through Serverless Devs developer tools.

</view>

</view>

</view>

<view class="cu-modal {{modalName=='Image'?'show':''}}">

<view class="cu-dialog">

<view class="bg-img" style="background-image: url("{{previewStyle}}");height:200px;">

<view class="cu-bar justify-end text-white">

<view class="action" bindtap="hideModal">

<text class="cuIcon-close "></text>

</view>

</view>

</view>

<view class="cu-bar bg-white">

<view class="action margin-0 flex-sub solid-left" bindtap="hideModal">Disable preview</view>

</view>

</view>

</view>

</scroll-view>The page logic is simple:

// index.js

// Instances of Application Acquisition

const app = getApp()

Page({

data: {

styleList: {},

currentStyle: "动漫风",

currentSubStyle: "v1模型",

userChosePhoho: undefined,

resultPhoto: undefined,

previewStyle: undefined,

getPhotoStatus: false

},

// Event Processing Function

bindViewTap() {

wx.navigateTo({

url: '../logs/logs'

})

},

onLoad() {

const that = this

wx.showLoading({

title: '加载中',

})

app.doRequest(`system/styles`, {}, option = {

method: "GET"

}).then(function (result) {

wx.hideLoading()

that.setData({

styleList: result,

currentStyle: Object.keys(result)[0],

currentSubStyle: Object.keys(result[Object.keys(result)[0]].detailList)[0],

})

})

},

changeStyle(attr) {

this.setData({

"currentStyle": attr.currentTarget.dataset.style || this.data.currentStyle,

"currentSubStyle": attr.currentTarget.dataset.substyle || Object.keys(this.data.styleList[attr.currentTarget.dataset.style].detailList)[0]

})

},

chosePhoto() {

const that = this

wx.chooseImage({

count: 1,

sizeType: ['compressed'],

sourceType: ['album', 'camera'],

complete(res) {

that.setData({

userChosePhoho: res.tempFilePaths[0],

resultPhoto: undefined

})

}

})

},

headimgHD(imageUrl) {

imageUrl = imageUrl.split('/'); //Cut the path of the avatar into an array

//Convert 46 || 64 || 96 || 132 to 0

if (imageUrl[imageUrl.length - 1] && (imageUrl[imageUrl.length - 1] == 46 || imageUrl[imageUrl.length - 1] == 64 || imageUrl[imageUrl.length - 1] == 96 || imageUrl[imageUrl.length - 1] == 132)) {

imageUrl[imageUrl.length - 1] = 0;

}

imageUrl = imageUrl.join('/'); //Re-concatenate as string

return imageUrl;

},

getUserAvatar() {

const that = this

wx.getUserProfile({

desc: "获取您的头像",

success(res) {

const newAvatar = that.headimgHD(res.userInfo.avatarUrl)

wx.getImageInfo({

src: newAvatar,

success(res) {

that.setData({

userChosePhoho: res.path,

resultPhoto: undefined

})

}

})

}

})

},

previewImage(e) {

wx.previewImage({

urls: [e.currentTarget.dataset.image]

})

},

editImage() {

const that = this

wx.editImage({

src: this.data.userChosePhoho,

success(res) {

that.setData({

userChosePhoho: res.tempFilePath

})

}

})

},

getNewPhoto() {

const that = this

wx.showLoading({

title: '图片生成中',

})

this.setData({

getPhotoStatus: true

})

app.doRequest(this.data.styleList[this.data.currentStyle].detailList[this.data.currentSubStyle].uri, {

style: this.data.styleList[this.data.currentStyle].detailList[this.data.currentSubStyle].name,

image: wx.getFileSystemManager().readFileSync(this.data.userChosePhoho, "base64")

}, option = {

method: "POST"

}).then(function (result) {

wx.hideLoading()

that.setData({

resultPhoto: result.error ? "error" : result.photo,

getPhotoStatus: false

})

})

},

saveImage() {

wx.saveImageToPhotosAlbum({

filePath: this.data.resultPhoto,

success(res) {

wx.showToast({

title: "保存成功"

})

},

fail(res) {

wx.showToast({

title: "异常,稍后重试"

})

}

})

},

onShareAppMessage: function () {

return {

title: "头头是道个性头像",

}

},

onShareTimeline() {

return {

title: "头头是道个性头像",

}

},

showModal(e) {

if(e.currentTarget.dataset.target=="Image"){

const previewSubStyle = e.currentTarget.dataset.substyle

const previewSubStyleUrl = this.data.styleList[this.data.currentStyle].detailList[previewSubStyle].preview

if(previewSubStyleUrl){

this.setData({

previewStyle: previewSubStyleUrl

})

}else{

wx.showToast({

title: "暂无模板预览",

icon: "error"

})

return

}

}

this.setData({

modalName: e.currentTarget.dataset.target

})

},

hideModal(e) {

this.setData({

modalName: null

})

},

copyData(e) {

wx.setClipboardData({

data: e.currentTarget.dataset.data,

success(res) {

wx.showModal({

title: '复制完成',

content: `已将${e.currentTarget.dataset.data}复制到了剪切板`,

})

}

})

},

})The project will request multiple background interfaces, so I will abstract the request method:

// Unified Request Interface

doRequest: async function (uri, data, option) {

const that = this

return new Promise((resolve, reject) => {

wx.request({

url: that.url + uri,

data: data,

header: {

"Content-Type": 'application/json',

},

method: option && option.method ? option.method : "POST",

success: function (res) {

resolve(res.data)

},

fail: function (res) {

reject(null)

}

})

})

}After completion, configure the background interface and publish the audit.

99 posts | 7 followers

FollowAlibaba Cloud Project Hub - December 11, 2020

Alibaba Cloud Native Community - August 25, 2022

Neel_Shah - April 11, 2025

Alibaba Cloud Serverless - May 9, 2020

Alibaba Cloud Serverless - March 8, 2023

Alibaba Cloud Community - January 5, 2026

99 posts | 7 followers

Follow YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More mPaaS

mPaaS

Help enterprises build high-quality, stable mobile apps

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn MoreMore Posts by Alibaba Cloud Serverless