By digoal

A bank’s primary function is to issue loans, but the bank is not the only loan provider out there. Companies with abundant capital are also capable of providing loans, such as insurance companies.

In addition to loans, angel investment, A round financing, and B round financing, all of these financial options require similar decision making. A decision-making system should support these investment decisions.

Contrary to the loan business that provides money, some business models attract money. For example, financial management products, stock markets, and games all want to attract and keep money in their accounts. Therefore, companies with abundant capital or that attract money are capable of issuing loans.

Lending is not easy because loan applicants are various, so strict screening is required for lending. Otherwise, bad accounts will be inevitable.

This article discusses matters related to lending, review, risk control, and public opinion systems.

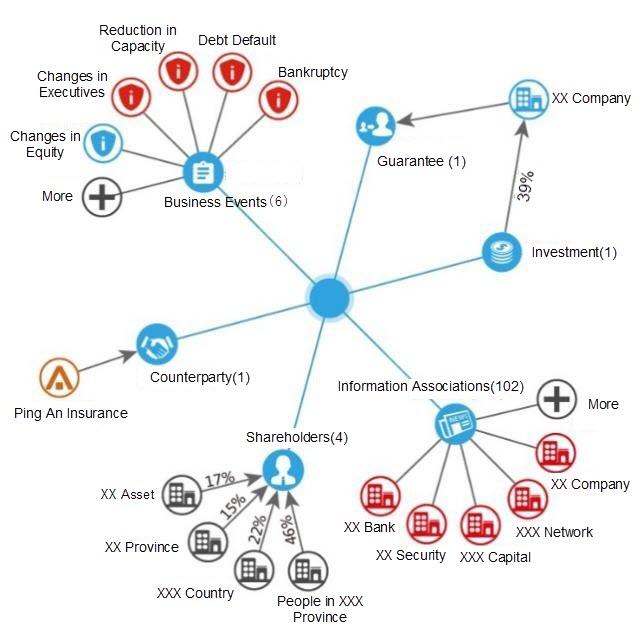

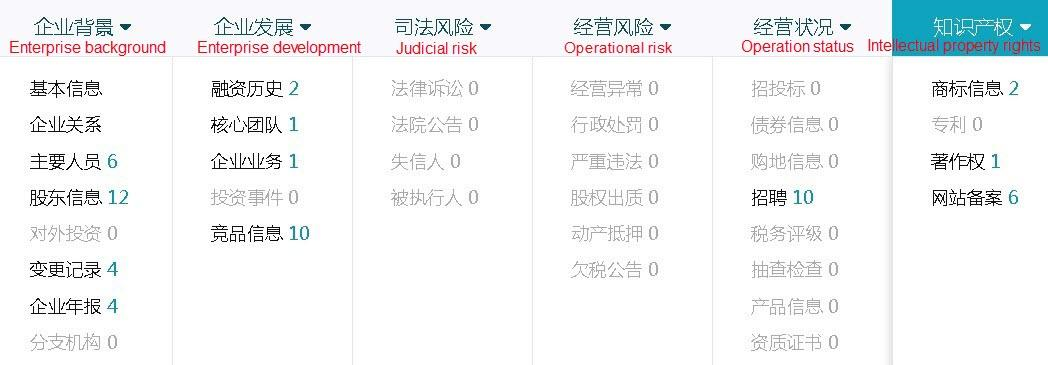

Display the company's profile such as share structure, public opinion events, leadership, intellectual property, and the state of operation to facilitate censor’s review.

Information from web crawlers or partners, like a recruitment website or the local tax bureau, constitutes the company's information network.

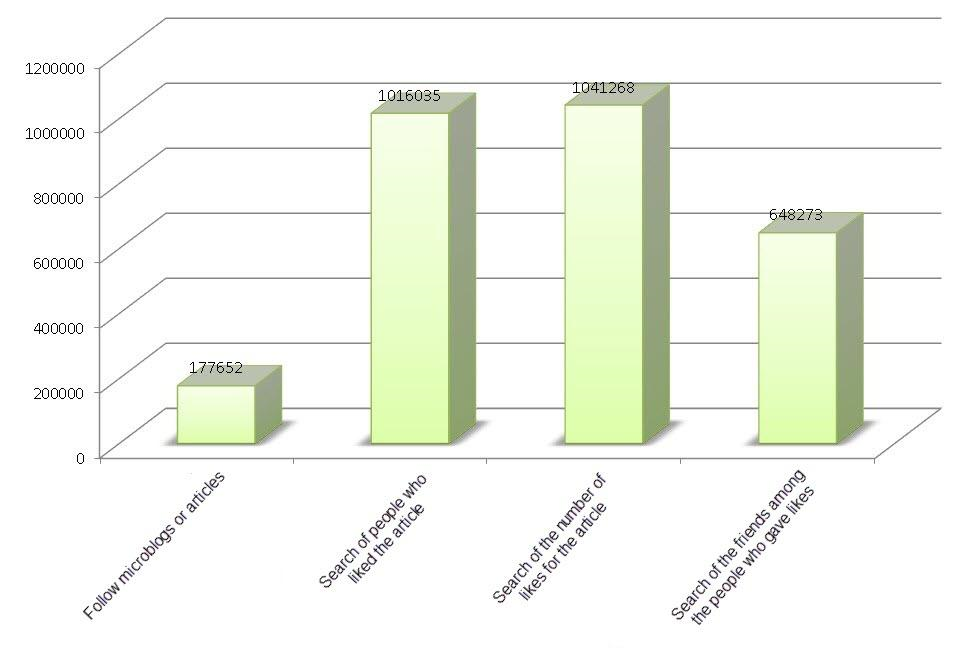

This type of data is very similar to social business data. When enterprise A invests in enterprise B, we can assume that A gives a "like" to B on a social media platform. When enterprise A publishes a financial report, we can regard it as microblogging.

Relevant Performance Indicators:

1) 100 million users are equivalent to 100 million business directories. 1 to 10,000 friends are equivalent to 1 to 10,000 enterprises associated with each enterprise on average. 1 to 1 million likes of a single object are equivalent to 1 to 1 million likes of a single event on average.

2) Follow microblogs or articles

The predicted optimization is up to 300,000/s with the current speed of 177,000/s.

3) Search of people who liked the article: 1,016,000/s

4) Search of the number of likes for the article: 1,041,000/s

5) Search of the friends among the people who gave likes: 648,000/s

6) Machine:

- X86 at a price of about RMB 100,000

- twelve 8 TB SATA hard drive

- one SSD as BCACHE

Compared with the internet social business data, company data is relatively static. Therefore, we should focus more on query performance, finding a company’s data performance step by step.

Take the following case of graph search as an example.

Relevant Performance Indicators:

1) In every 100 million company directories, 50 thousand serve as an enterprise group. Each company is associated with 1,000 other companies to form a super extensive network of 100 billion companies.

Taking one company as the center, we can search through the company network of three layers with a 15ms response time.

The number of companies: millions

We predict that there are 1,000 pieces of data for each company's relationship, public opinion events, financial reports, etc.

On average, each company is directly associated with 50 other companies.

The number of individuals (legal representatives, stockholders, supervisor, etc.): millions

Each person is associated with several companies.

The total data volume is about 1 billion.

1) The number of relatively static data (individuals, company information, prominent members, stockholders, etc.): millions

For one-to-many data, such as primary member IDs and stockholder IDs, use array type to improve query efficiency.

create table corp_info (...) ; --企业相对静态信息

create table people_info (...) ; --个人相对静态信息 We recommend distributing the static data into multiple tables and associate them with PK to decrease the amount of data scanned when only querying a few fields. Since PostgreSQL uses a row storage format, fewer fields can reduce IO amplification and improve the hit rate.

2) The number of public opinion and flow data: billions

create table corp_feed (corp_id pk, typ int, event); 3) The number of relational data: 10 - 100 million

We don't need to consider the positive and inverse relation of storage redundancy at this magnitude, which facilitates the sharding of FDW and HybridDB.

create table corp_rel (...) ; 4) Online to offline

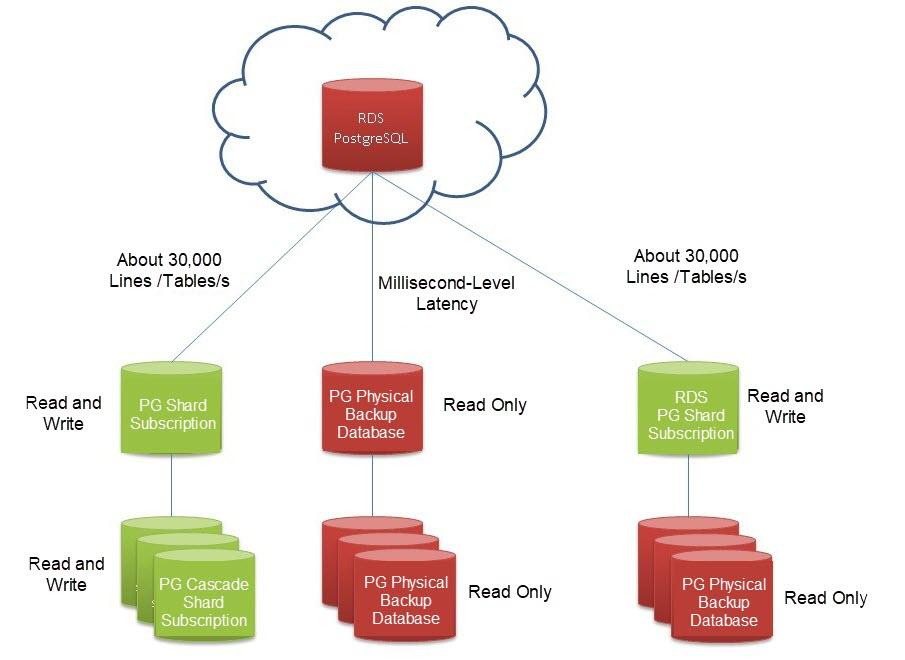

We can copy the data in Alibaba Cloud to an offline database via logical subscription and physical stream replication.

Logical subscription supports table-level subscription. Each table’s subscription speed is about 30,000 rows per second when the table is in an independent subscription channel. It can also support some data in the subscription table through rules or triggers and even implement operations such as format conversion. The standby database in the logical subscription can be read and written, but a large transaction delay in the logical subscription is higher than that in the physical replication.

Physical stream replication supports full database replication and has a low delay, while the standby database is read-only.

The following explains the need for RDS standby databases:

Due to the SOX audit requirement, some companies have to create a standby node in their data centers.

Some companies want to establish daily development or test environments through this method.

For example, all data is stored in the cloud due to architecture requirements, while some acceleration data is stored offline. Also, all data in the cloud is split across multiple databases and needs to be merged for analysis. Therefore, for many-to-one replications, it can be achieved through PostgreSQL’s logical subscription feature.

5) Sharding

Although sharding is not required for the scenarios and data volume involved in this article (based on subsequent tests, RDS PostgreSQL single database supports 100 billion data points, so stability and performance are good), we can perform sharding based on the queried ID. If the positive and inverse relations are provided, it can be made through redundant storage.

There are many examples of PostgreSQL Sharding:

- Sharding supported by a kernel layer

- Sharding supported by plproxy proxy

- Sharding and mpp supported by [citus plug-in](https://github.com/citusdata/citus)

6) Sharding supported by client proxy

- https://github.com/dangdangdotcom/sharding-jdbc

- https://github.com/go-pg/sharding/

create table corp_info ( -- 企业信息

id int primary key, -- 企业ID,主键

info text, -- 企业信息

core_team int[] -- 核心团队成员ID

-- 忽略其他字段

);

create table people_info ( -- 人信息

id int primary key, -- 人ID,主键

info text, -- 信息

rel_corp int[], -- 和哪些公司相关

rel_type int[] -- 分别是什么关系(法人、建立、董事长,。。。。)

-- 忽略其他字段

);

create table corp_rel1 ( -- 企业正向关系

corp_id1 int, -- 企业ID

corp_id2 int, -- 企业ID

reltypid int -- 关系类型

);

create index idx_corp_rel1 on corp_rel1 (corp_id1);

create table corp_rel2 ( -- 企业反向关系

corp_id1 int, -- 企业ID

corp_id2 int, -- 企业ID

reltypid int -- 关系类型

);

create index idx_corp_rel2 on corp_rel2 (corp_id1);

create table corp_event ( -- 企业舆情

corp_id int, -- 企业ID

event text, -- 事件内容

crt_time timestamp -- 时间

-- 其他字段略

);

create index idx_corp_event_1 on corp_event(corp_id, crt_time desc); 10,420 million test data points are generated. The following is the data composition and generation method:

-- 100万 企业数据

insert into corp_info select generate_series(1,1000000), 'test', array(select (random()*1000000)::int from generate_series(1,20));

-- 100万 人数据

insert into people_info select generate_series(1,1000000), 'test', array(select (random()*1000000)::int from generate_series(1,20)), array(select (random()*50)::int from generate_series(1,20));

-- 2000万 企业正向关系

insert into corp_rel1 select random()*1000000, random()*1000000, random()*100 from generate_series(1,20000000);

-- 2000万 企业反向关系

insert into corp_rel2 select random()*1000000, random()*1000000, random()*100 from generate_series(1,20000000);

-- 10亿 企业舆情

insert into corp_event select random()*1000000, 'test', now()+(id||' second')::interval from generate_series(1,1000000000) t(id); Since the data is relatively static, we can cluster it to improve query efficiency. If not, the response time is in milliseconds, but we can reduce the response time to a few percentage points of a millisecond if we do.

cluster corp_rel1 using idx_corp_rel1;

cluster corp_rel2 using idx_corp_rel2;

cluster corp_event using idx_corp_event_1; 1) Query company static data.

vi test.sql

\set id random(1000001,2000000)

select * from corp_info where id=:id;

select * from people_info where id = any (array(select core_team from corp_info where id=:id));

pgbench -M prepared -n -r -P 1 -f ./test.sql -c 48 -j 48 -T 100

transaction type: ./test.sql

scaling factor: 1

query mode: prepared

number of clients: 4

number of threads: 4

duration: 120 s

number of transactions actually processed: 4810415

latency average = 11.361 ms

latency stddev = 0.080 ms

tps = 1464.338375 (including connections establishing)

tps = 1056.298691 (excluding connections establishing)

script statistics:

statement latencies in milliseconds:

0.001 \set id random(1000001,2000000)

0.068 select * from corp_info where id=:id;

0.444 select * from people_info where id = any (array(select core_team from corp_info where id=:id));2) Query both positive and inverse relationship of company relationship data.

vi test1. SQL

\set id random(1000001,2000000)

select * from corp_rel1 where corp_id1=:id;

select * from corp_rel2 where corp_id1=:id;

pgbench -M prepared -n -r -P 1 -f ./test.sql -c 48 -j 48 -T 100

transaction type: ./test.sql

scaling factor: 1

query mode: prepared

number of clients: 4

number of threads: 4

duration: 120 s

number of transactions actually processed: 633784

latency average = 11.361 ms

latency stddev = 0.032 ms

tps = 58301.468890 (including connections establishing)

tps = 1056.298691 (excluding connections establishing)

script statistics:

statement latencies in milliseconds:

0.001 \set id random(1000001,2000000)

0.077 select * from corp_rel1 where corp_id1=:id;

0.077 select * from corp_rel2 where corp_id1=:id;3) Query 10 recent data pieces of company public opinion.

vi test2. SQL

\set id random(1000001,2000000)

select * from corp_event where corp_id=:id order by crt_time desc limit 10;

pgbench -M prepared -n -r -P 1 -f ./test.sql -c 48 -j 48 -T 100

transaction type: ./test.sql

scaling factor: 1

query mode: prepared

number of clients: 4

number of threads: 4

duration: 120 s

number of transactions actually processed: 4810415

latency average = 11.361 ms

latency stddev = 0.020 ms

tps = 1056.253960 (including connections establishing)

tps = 1056.298691 (excluding connections establishing)

script statistics:

statement latencies in milliseconds:

0.001 \set id random(1000001,2000000)

0.081 select * from corp_event where corp_id=:id order by crt_time desc limit 10;1) Performance

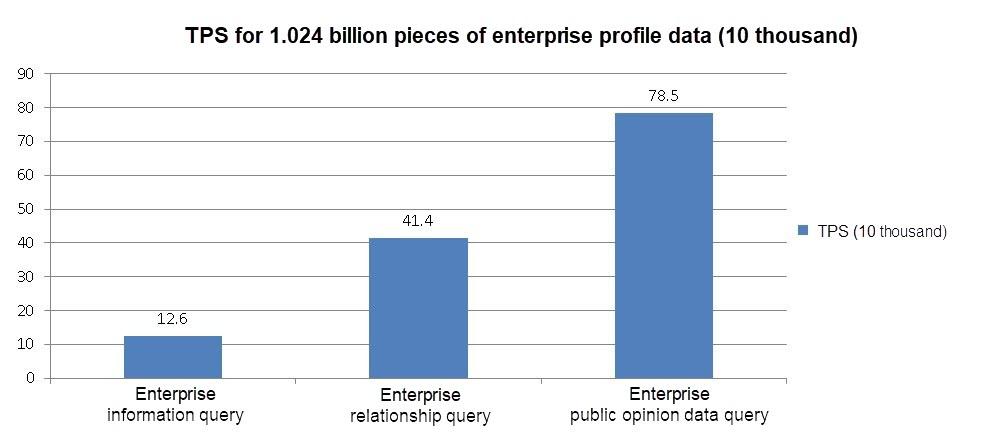

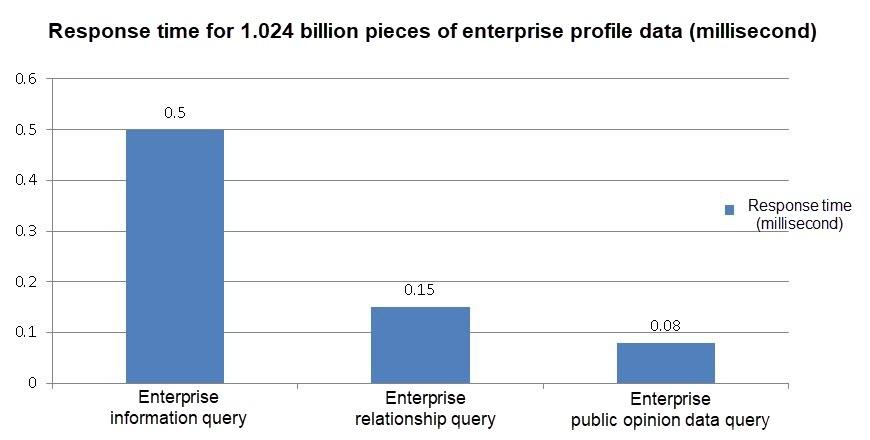

Querying company static data:

Querying both positive and inverse relationship of company relationship data:

Querying 10 recent data pieces of company public opinion:

2) With ApsaraDB RDS for PostgreSQL, customers need not be concerned about basic issues like operation & maintenance, disaster tolerance, backup recovery, resize, reduction, and HA. They can focus more on business.

3) When you analyze demands, PostgreSQL 10 features like multi-core parallel threads, JIT, and operator multiplex can easily process 1 billion data.

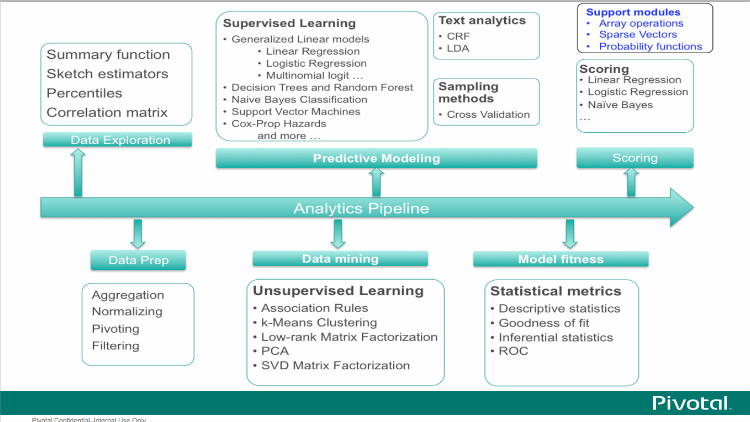

4) Machine Learning Requirement

ApsaraDB RDS for PostgreSQL provides a machine learning plug-in called MADlib. It supports mining methods like classification, regression, clustering, topic modeling, association rule mining, descriptive statistics, and validation.

5) Online to Offline

Through the stream replication and logical subscription interface provided by RDS for PostgreSQL, you can copy data to the remote end or multiple RDS to flexibly combine with the business. It achieves a flexible architecture, including multi-master support.

6) Combination of Alibaba Cloud Products

With OSS, HybridDB for PostgreSQL, and ApsaraBD RDS for PostgreSQL, we can construct a business data loop including StreamCompute, online business, and data analysis.

1) MADlib supports multiple machine learning algorithms and can help achieve machine learning for databases by running SQL statements.

2) Logical subscription allows copying multiple databases at the table level or row level. We can read or write to the copied slave nodes.

3) Physical stream replication allows copying multiple standby databases. Copied standby databases are read-only.

The following explains the need for RDS standby databases:

Due to the SOX audit requirement, some companies have to create a standby node in their data centers.

Some companies want to establish daily development or test environments through this method.

For example, all data is stored in the cloud due to architecture requirements, while some acceleration data is stored offline. Also, all data in the cloud is split across multiple databases and needs to be merged for analysis. Therefore, for many-to-one replications, it can be achieved through PostgreSQL logical subscription.

4) Array type is used to store one-to-many relationships. PostgreSQL supports highly efficient index search for arrays.

5) SQL StreamCompute allows performing operations in real time, such as data alerts, aggregation, and conversion. You can see its outstanding results in the PCC Competition.

1) Social scenario business

2) Demand analysis business relevant to graph search, such as financial risk control, criminal police detection, social relation, and networking analysis

In addition to banks, more and more companies are providing similar services concerning loans and investments.

Lending is not easy because loan applicants are various, so strict screening is required for lending. Otherwise, bad accounts will be inevitable.

Various information including individual information, company information, and public opinion information can be obtained from crawlers and partners. An effective combination of this information can help lenders and investors quickly decide whether or not to lend or invest.

The combination of ApsaraDB RDS for PostgreSQL, AnalyticDB for PostgreSQL, and OSS reduces the burden of database maintenance, HA, disaster tolerance, resize, and reduction. In this way, customers can focus on application development to improve efficiency.

Often, the performance is far better than expected. For typical loan businesses, hundreds of thousands of queries per second is enough.

Uninterrupted Database Switchover Using Session Resource Migration

Alipay Technology - December 26, 2019

Ellen Cibula - January 18, 2023

Alibaba Cloud Community - February 28, 2022

ApsaraDB - May 19, 2025

ApsaraDB - January 15, 2026

Alibaba Clouder - August 12, 2020

AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn MoreMore Posts by digoal