By Digoal

The financial industry relies heavily on shared storage for zero data loss, high availability, and geo-disaster recovery.

Can PostgreSQL's replication-based solution achieve zero data loss, high availability, and geo-disaster recovery?

It is entirely possible, more flexible, and reliable.

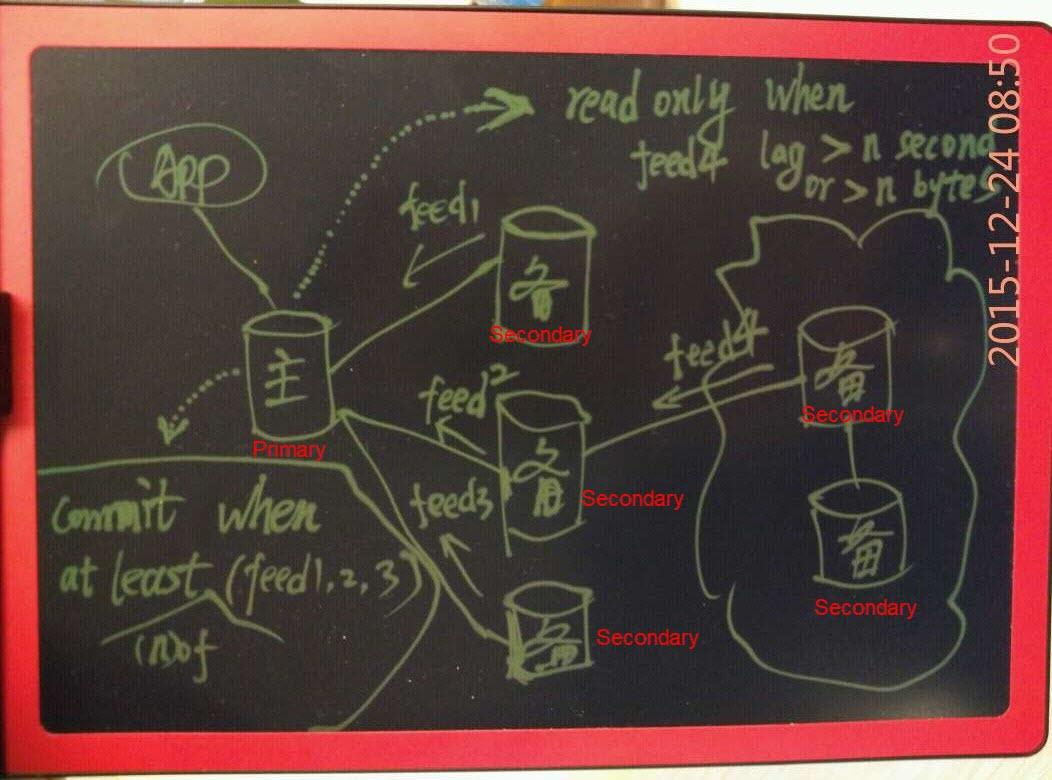

The primary/secondary synchronization is implemented through the streaming replication. Reliability indicators include feedback indicators and consistency indicators.

1) L1: The secondary databases receive the redo logs and write them into the xlog buffer.

2) L2: The secondary databases receive the redo logs and write them to storage.

3) L3: The secondary databases recover after receiving the redo logs.

With the preceding three indicators, the transaction latency is increased step by step (set according to the reliability strength requirements).

1) Notify the application transactions to commit success or failure after both conditions are met:

2) If the asynchronous database has a delay of seconds, the primary database is switched to read-only. (If this parameter is not configured, the primary database is active regardless of the asynchronous database's delay.)

3) If the asynchronous database delays n bytes, the primary database is switched to read-only mode. (If this parameter is not configured, the primary database is switched regardless of the asynchronous database's delay)

If you also need to connect the remote disaster recovery database without any data loss, change the cascade connection between the two remote asynchronous databases in Figure 1 to the direct connection to the primary database, and configure these two remote asynchronous databases as a group. This database group must have one database to give feedback.

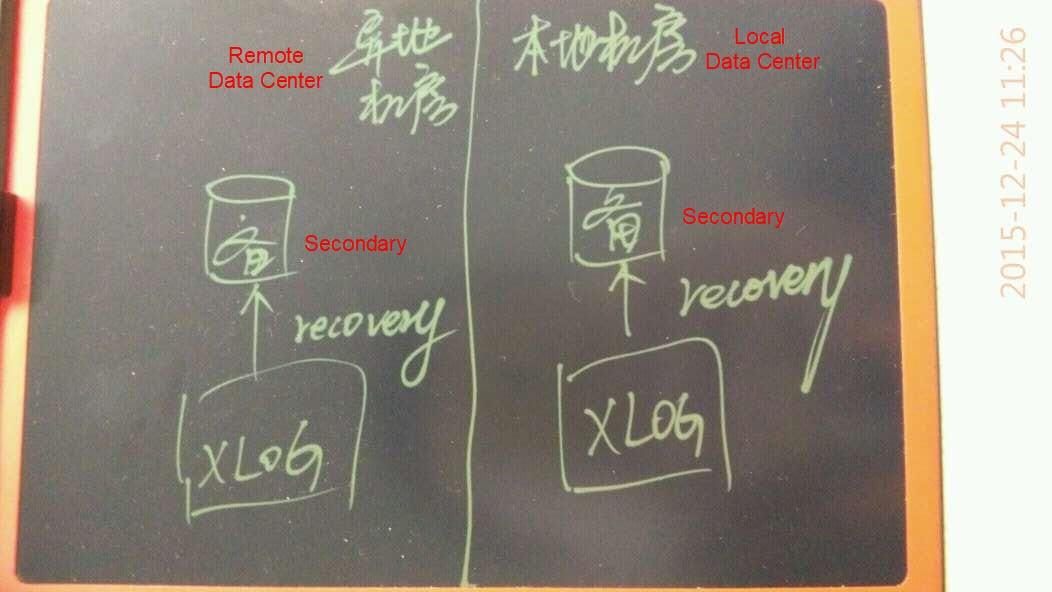

The redo logs actually ensure zero data loss. You can restore the data to the final consistency state as long as the redo logs are not lost.

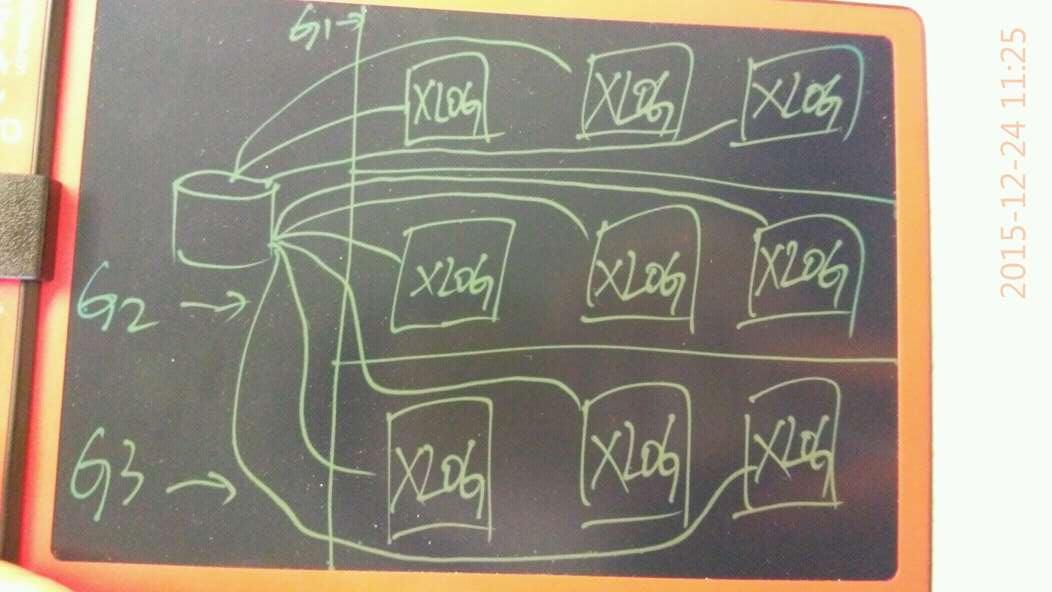

The architecture in Figure 1 can be simplified into the xlog multi-group synchronization mode as follows. Each group represents one data center (including the local data center).

1) The local strong synchronous mode

You must at least synchronize one xlog file in the local data center.

2) The strong synchronous mode for geo-disaster recovery

You must at least synchronize one xlog file in a remote data center.

We recommend that two replicas need to be deployed in the local data center.

You should deploy one replica in the remote data center. You can add one more replica with high-reliability requirements.

This storage class is used by the financial industry or users with high-reliability requirements.

The local data centers:

The local data center has one primary database and three secondary databases and connects to the 10 GE network.

Configure the feedback indicators as two L1 and one L2. The consistency requirement is configured to have more than one host give feedback.

The local and remote data centers are directly connected by the physical separation of optical fibers.

The remote data centers:

The two secondary databases are directly connected to the primary database and configured as the strong synchronous group. At least one feedback must be provided, and both feedback indicators must be configured as L1.

1) The number of strong synchronous replicas is increased to two. One of the two replicas is deployed in the remote data center.

2) In the local data center, one secondary database can connect to two servers. This does not affect the primary database's operation.

3) In the remote data center, one secondary database can connect to one server. This does not affect the primary database's operation.

1) PostgreSQL does not affect the reading.

2) Its impact on the performance of write transactions depends entirely on the network. In a 10 GE network environment, the request latency of write transactions increases by less than 1 ms, with minimal impact.

3) PostgreSQL performs data synchronization based on physical changes. Therefore, the latency between the primary and secondary databases of logical replication does not exist, and the latency of physical streaming replication can be controlled within milliseconds.

4) What is the high availability failover latency when a fault occurs?

Considering factors such as the network jitter and load, the entire switching process is generally controlled between 25 and 45 seconds.

5) Should the application re-establish a session with the database when a failover occurs?

The proxy solution is available, which can continuously open user connections during high availability switching and is transparent to the user. However, if a variable is bound, it needs to be re-bound, and the synchronization of session information will be solved in the future.

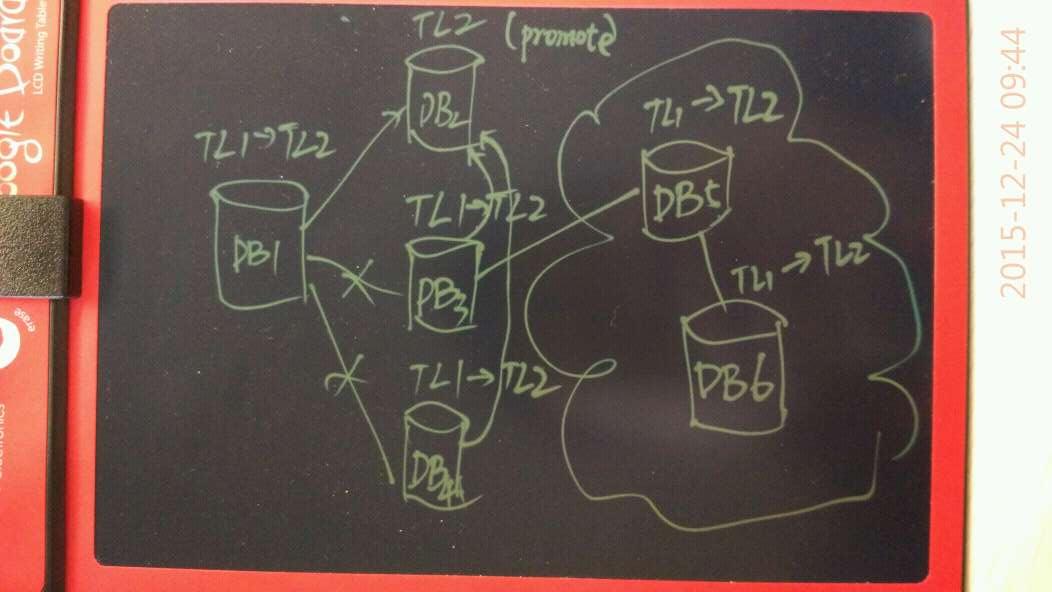

1) You can select from the databases that have reached the synchronous mode according to the configuration priority order.

2) If you configure the full asynchronous mode, you can select from the databases with the lowest latency.

3) If the databases are with the same latency, you can select according to the order of configuration priorities.

After a new primary database is elected, the connection relationship is re-established.

1) The secondary databases can be provided for read-only query, read/write splitting, and load balancing.

2) The timeline switching is very convenient, and the primary/secondary role switching is very flexible.

3) You can also use the secondary databases for drills or upgrading to a major version, quickly set up the test environment, and quickly establish the sample database.

You cannot achieve these in any of the shared storage solutions.

If the strong synchronization is not set up in the remote data center, and the data center to which the primary database belongs fails, switching to the remote data center poses a data loss risk. However, users may decide to switch to the remote data center for availability.

The following issues arise when the data center, to which the primary database belongs, is activated:

1) How to retrieve the data that is not completely synchronized to the remote data center?

2) How to quickly turn the original primary database into a secondary database? Does it need resynchronizing the data and building the primary/secondary database?

1) PostgreSQL's logical decode helps you solve the first issue.

The logical decode can help users extract too many SQL statements executed by the primary database from the time of the remote data center's activation. Meanwhile, it can help extract the rows, old records, and records affected by SQL statements.

With this data, you can resolve the differences in retroactive data at the business level.

2) PostgreSQL's pg_rewind helps you solve the second issue.

How fast? If we assume that the latency between the primary database and the secondary database is minutes during the failover, regardless of the database's size, the rollback can be completed in minutes. And the database becomes a secondary database.

The mechanism is that xlog is generated when the remote data center is activated to the active node crashes. The xlog will be replaced with the data blocks of the primary database.

This is beyond the capabilities of Oracle.

The costs are for the PostgreSQL solution with high local availability and a disaster recovery data center (assuming that users have a single database with 10TB data and a REDO table with 100GB data retained).

The costs are as follows:

1) Two data copies in the local data center (two hosts including the primary database).

2) Three xlog files in the local data center (reuse the above hosts and add one more).

3) One data copy in the remote data center (one host).

4) Two xlog copies in the remote data center (reuse the above hosts and add one more).

A total of five hosts.

The disk can store three data copies (30TB) and five redo log files (500GB).

1) Two local xlogs receive services. If one xlog hangs up, it does not affect the write operation of the primary database.

2) Two local xlogs receive services. If one xlog hangs up, it does not affect the write operation of the primary database.

3) If the primary database fails, services are automatically switched to the local secondary database.

4) When the data center fails, services are automatically switched to the local secondary database.

PostgreSQL: Performing Linear Regression Analysis to Predict Future Data (Part 2)

PostgreSQL: Stock Price Prediction Using Multiple Linear Regression Analysis

Alibaba Clouder - January 28, 2019

Iain Ferguson - April 28, 2022

Alibaba Clouder - July 22, 2020

Alibaba Clouder - February 8, 2021

afzaalvirgoboy - February 25, 2020

Alibaba Clouder - November 4, 2020

Application High Availability Service

Application High Availability Service

Application High Available Service is a SaaS-based service that helps you improve the availability of your applications.

Learn More Security Center

Security Center

A unified security management system that identifies, analyzes, and notifies you of security threats in real time

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn MoreMore Posts by digoal