By Digoal

If you're a DBA in the IT industry, you may be working in a to-business (2B) data analytics company and may have designed a table (including user IDs and several well sorted property values) or have collected some user data and need to provide reports to clients. You may have also queried random property value combinations, and needed to quickly return the results to the client.

These are all common requirements at 2B data platform companies. Oftentimes, you cannot meet the requirements through modeling because the B-end requirements are unpredictable, and any combination of queries requires real-time response.

You may have billions of customer data records, and each of them may have hundreds of properties. Users may need query results for any combination of properties.

In terms of quick response, have you thought about creating indexes for query conditions?

For example,

where col1=? and col2=? and col3<>? or col4=?;

What is your plan for this kind of SQL? Creating an index for (col1,col2), and another for col4?

It is possible that the users may change their query conditions next time.

where col3=1 or col100=?

Creating indexes for col3, col100?

You may find yourself unable to optimize this at all, because there may be thousands of corresponding query index combinations.

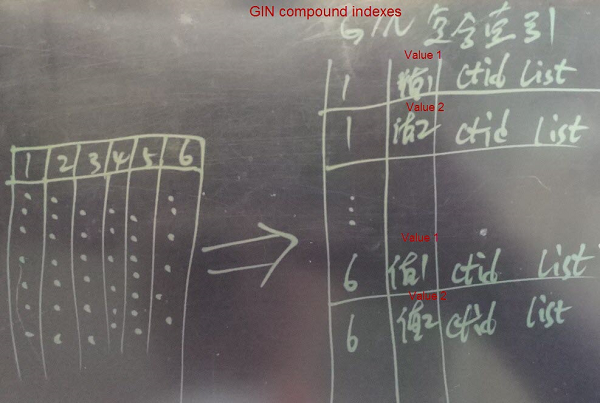

Create GIN composite indexes for fields to be used in queries.

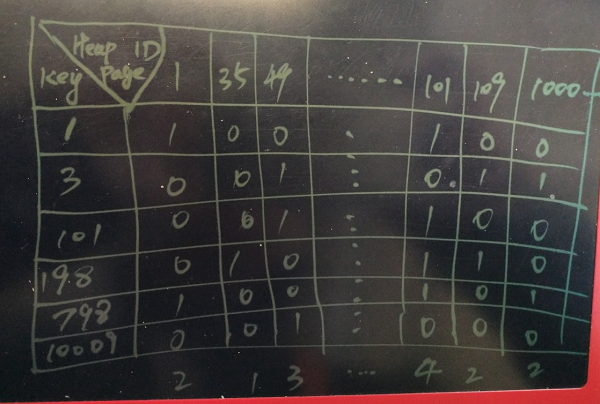

This method works for cases with random field combinations. For multiple query conditions, PostgreSQL uses index + bitmapAnd or bitmapOr to filter BLOCKs internally, to get the intermediate results.

+---------------------------------------------+

|100000000001000000010000000000000111100000000| bitmap 1

|000001000001000100010000000001000010000000010| bitmap 2

&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&&

|000000000001000000010000000000000010000000000| Combined bitmap

+-----------+-------+--------------+----------+

| | |

v v v

Used to scan the heap only for matching pages:

+---------------------------------------------+

|___________X_______X______________X__________|

+---------------------------------------------+

But what makes this method fast?

Because GIN indexes implement the bitmapAnd or bitmapOr internally, which is basically the same as creating a separate B-Tree index for each field (PostgreSQL also supports the merging of bitmapAnd and bitmapOr for multiple B-Tree indexes).

The GIN composite index method can meet the above needs, but when the amount of data or the number of columns becomes very large, the size of GIN indexes will be large, too.

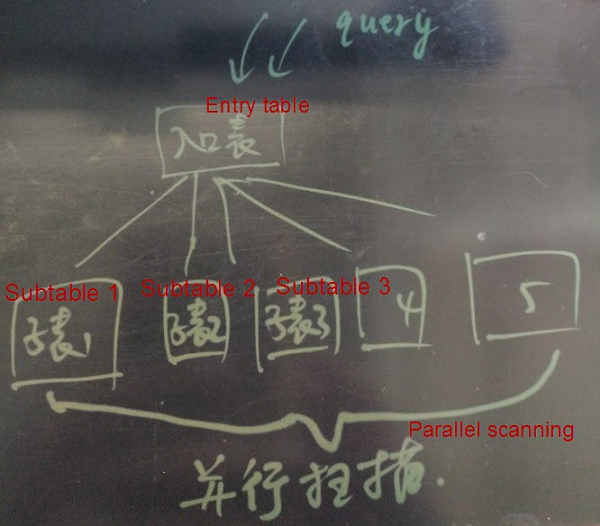

We recommend that you split the GIN indexes into multiple tables (such as random splitting or splitting based on mandatory conditions). This not only reduces the size of GIN indexes, but also allows you to use the multi-table parallelism of PostgreSQL 10 to improve query performance.

PostgreSQL supports both single-table multi-core parallel query and multi-table parallel query.

Single-table parallelism means that a single SQL statement, when processing data in a single table, can use multiple CPUs for computing.

Multi-table parallelism means that when an SQL statement involves the processing of multiple tables (such as APPEND SCAN), it can process the SCAN of multiple tables in parallel.

Multi-table parallelism was first included in PG 10 to support PostgreSQL 10 append scan parallelism

This method converts the entire row of records into a large string, and then creates a full-text index on this string (PostgreSQL has a built-in full-text indexing function), which covers cases involving any field combinations.

This method works for cases without specified columns, but with specified query criteria.

For example, when searching for "Dior perfume", this term can be matched in any fields of the table (such as store names, product names, and user names).

The Bloom filter method has limited effects, and is currently a preview feature. We recommend that you use it with caution.

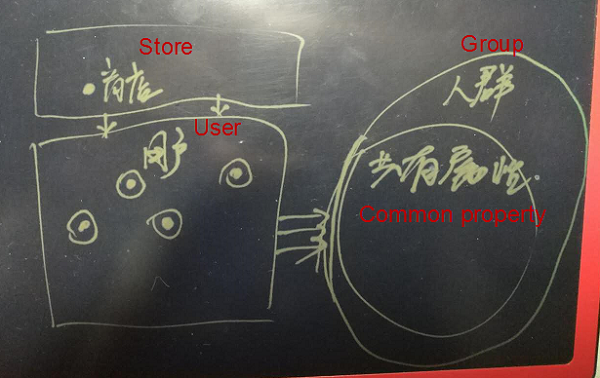

Each user corresponds to multiple tags, and merchants may filter user groups by tag combinations. This is a common practice for advertising companies.

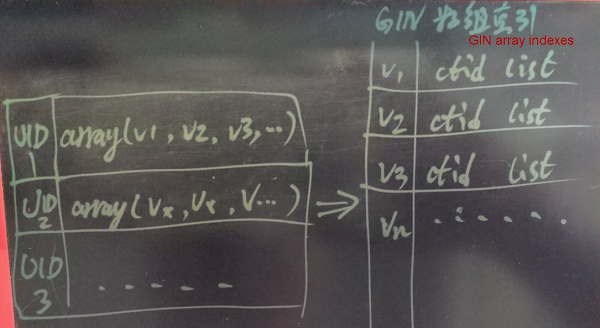

This mainly uses array types and inverted indexes of PostgreSQL, and provides excellent performance.

But what makes this method fast?

ARRAY elements are indexed in an inverted manner. When querying, it performs block-level BITMAP filtering on the query conditions. The filtered data falls to a small number of data blocks, which are rechecked to get the final result.

In fact, the case mentioned at the beginning of this article is very similar to the case of tagging people in the e-commerce industry. Therefore, we can use this method in this case.

What can we do?

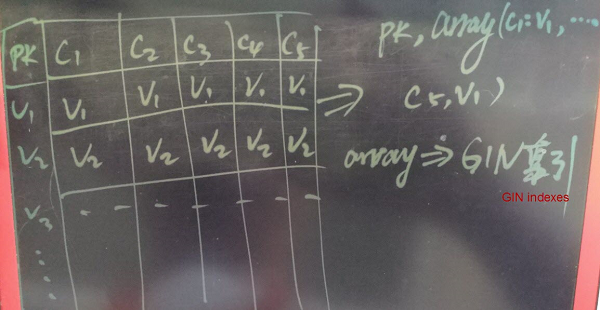

1. Convert multiple fields into an array

First, convert multiple fields into an array field.

For example:

create table test(uid int8 primary key, tag1 int, tag2 text, tag3 int, tag4 text, tag5 timestamp, tag6 date, ...);

is converted to

create table test(uid int8 primary key, tag text[]);

Example

1, 1, 'man', 1023, 'football', '2017-01-01 10:00:00', '1989-09-01'

is converted to

1, array['tag1:1', 'tag2:man', 'tag3:1023', 'tag4:football', 'tag5:...', tag6:...']

2. Cascade values (optional)

If there are queries other than =, <, and > (for fields such as age, sales, and income, there may be greater than or less than range query requirements), then we need to cascade values of the corresponding tags.

3. Split tables (optional)

Tables are split for parallelism, and to ensure the proper size of each table.

There are many methods to split tables, such as random splitting and hashing by UID.

After splitting tables, scan all partition tables and aggregate the results.

Table splitting can be done locally or across different databases. After splitting local tables, we get partition tables. Table splitting across different databases involves data distribution and aggregation.

There are also many cross-database data distribution and aggregation methods, such as postgres_fdw + pg_pathman, plproxy, and program implementation.

4. Create GIN indexes on arrays

Create GIN indexes on array fields. GIN indexes are equivalent to inverted B-tree indexes using array elements as keys, and row numbers as values.

For example, when searching for a user who has a certain tag, we can obtain the HEAP table row number from the GIN index, and then obtain the record. This is very fast.

If a query uses a combination of multiple tags, the BITMAP and/or merging is performed internally, the conditions will be filtered to the data block level, the records will be obtained from the data blocks, and the final results will be obtained by querying the condition FILTER. This is very fast, too.

5. Tagging people <=> combined array query

After converting multiple fields into arrays, tagging people is simplified to array operations. For example

where tag1=? and tag2=? or tag3=?

The procedure to convert them into arrays is as follows:

where arraycol @> array[tag1:?, tag2:?] or arraycol && [tag3:?]

Array query uses GIN index scanning, which is incredibly fast.

This uses the BIT method. When all property values can be enumerated, say all 1 million or whatever number of values can be enumerated, we can use this method to optimize the tagging application.

The BIT method requires 25 times less space than the array method, while maintaining stable performance.

However, the BIT method requires the data to be written in a merged form, preferably using a UDF. The actual case is as follows (including the demo code for data merge).

The varbitx method is exactly the same as the bitmap database pilosa. However, PG is recommended because it has more powerful functions.

The PostgreSQL BitmapAnd and BitmapOr merging action can also be triggered when we use independent indexes on multiple fields. Therefore, the query is still very efficient.

For example, we have 31 fields and 100 million records. The fields are inserted randomly, and the values of fields range gradually from 1000 to 1 million.

postgres=# create table test(

id serial8 primary key,

c1 int, c2 int, c3 int, c4 int, c5 int,

c6 int, c7 int, c8 int, c9 int, c10 int,

c11 int, c12 int, c13 int, c14 int, c15 int,

c16 int, c17 int, c18 int, c19 int, c20 int,

c21 int, c22 int, c23 int, c24 int, c25 int,

c26 int, c27 int, c28 int, c29 int, c30 int

);

create index idx_test_1 on test(c1);

create index idx_test_2 on test(c2);

create index idx_test_3 on test(c3);

create index idx_test_4 on test(c4);

create index idx_test_5 on test(c5);

create index idx_test_6 on test(c6);

create index idx_test_7 on test(c7);

create index idx_test_8 on test(c8);

create index idx_test_9 on test(c9);

create index idx_test_10 on test(c10);

create index idx_test_11 on test(c11);

create index idx_test_12 on test(c12);

create index idx_test_13 on test(c13);

create index idx_test_14 on test(c14);

create index idx_test_15 on test(c15);

create index idx_test_16 on test(c16);

create index idx_test_17 on test(c17);

create index idx_test_18 on test(c18);

create index idx_test_19 on test(c19);

create index idx_test_20 on test(c20);

create index idx_test_21 on test(c21);

create index idx_test_22 on test(c22);

create index idx_test_23 on test(c23);

create index idx_test_24 on test(c24);

create index idx_test_25 on test(c25);

create index idx_test_26 on test(c26);

create index idx_test_27 on test(c27);

create index idx_test_28 on test(c28);

create index idx_test_29 on test(c29);

create index idx_test_30 on test(c30);

postgres=# alter sequence test_id_seq cache 10000;

Write 100 million test data records

vi ins.sql

insert into test (c1,c2,c3,c4,c5,c6,c7,c8,c9,c10,c11,c12,c13,c14,c15,c16,c17,c18,c19,c20,c21,c22,c23,c24,c25,c26,c27,c28,c29,c30) select random()*1000,random()*2000,random()*3000,random()*4000,random()*5000,random()*6000,random()*7000,random()*8000,random()*9000,random()*10000,random()*10000,random()*20000,random()*30000,random()*40000,random()*50000,random()*60000,random()*70000,random()*80000,random()*90000,random()*100000,random()*100000,random()*200000,random()*300000,random()*400000,random()*500000,random()*600000,random()*700000,random()*800000,random()*900000,random()*1000000 from generate_series(1,1000);

pgbench -M prepared -n -r -P 1 -f ./ins.sql -c 50 -j 50 -t 2000

postgres=# select count(*) from test;

count

-----------

100000000

(1 row)

While testing queries of random field combinations, the query conditions were merged internally using bitmapAnd/bitmapOr, delivering outstanding performance.

postgres=# explain (analyze,verbose,timing,costs,buffers) select * from test where c1=1 and c2=1 and c3=1 or (c10=1 and c11=1 or c12=1) and c14 between 1 and 1000000;

QUERY PLAN

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Bitmap Heap Scan on public.test (cost=1238.80..8607.84 rows=4887 width=128) (actual time=21.869..30.420 rows=4906 loops=1)

Output: id, c1, c2, c3, c4, c5, c6, c7, c8, c9, c10, c11, c12, c13, c14, c15, c16, c17, c18, c19, c20, c21, c22, c23, c24, c25, c26, c27, c28, c29, c30

Recheck Cond: (((test.c3 = 1) AND (test.c2 = 1)) OR (((test.c10 = 1) AND (test.c11 = 1)) OR (test.c12 = 1)))

Filter: (((test.c1 = 1) AND (test.c2 = 1) AND (test.c3 = 1)) OR ((((test.c10 = 1) AND (test.c11 = 1)) OR (test.c12 = 1)) AND (test.c14 >= 1) AND (test.c14 <= 1000000)))

Rows Removed by Filter: 16

Heap Blocks: exact=4915

Buffers: shared hit=5230

-> BitmapOr (cost=1238.80..1238.80 rows=4903 width=0) (actual time=20.931..20.931 rows=0 loops=1)

Buffers: shared hit=315

-> BitmapAnd (cost=947.23..947.23 rows=16 width=0) (actual time=17.602..17.602 rows=0 loops=1)

Buffers: shared hit=235

-> Bitmap Index Scan on idx_test_3 (cost=0.00..379.09 rows=32470 width=0) (actual time=7.965..7.965 rows=33036 loops=1)

Index Cond: (test.c3 = 1)

Buffers: shared hit=94

-> Bitmap Index Scan on idx_test_2 (cost=0.00..565.45 rows=48517 width=0) (actual time=7.826..7.826 rows=50054 loops=1)

Index Cond: (test.c2 = 1)

Buffers: shared hit=141

-> BitmapOr (cost=291.32..291.32 rows=4887 width=0) (actual time=3.076..3.076 rows=0 loops=1)

Buffers: shared hit=80

-> BitmapAnd (cost=231.88..231.88 rows=1 width=0) (actual time=2.769..2.769 rows=0 loops=1)

Buffers: shared hit=62

-> Bitmap Index Scan on idx_test_10 (cost=0.00..114.46 rows=9786 width=0) (actual time=1.104..1.104 rows=10085 loops=1)

Index Cond: (test.c10 = 1)

Buffers: shared hit=31

-> Bitmap Index Scan on idx_test_11 (cost=0.00..114.72 rows=9821 width=0) (actual time=1.178..1.178 rows=9883 loops=1)

Index Cond: (test.c11 = 1)

Buffers: shared hit=31

-> Bitmap Index Scan on idx_test_12 (cost=0.00..58.22 rows=4887 width=0) (actual time=0.307..0.307 rows=4904 loops=1)

Index Cond: (test.c12 = 1)

Buffers: shared hit=18

Planning time: 0.460 ms

Execution time: 31.546 ms

(32 rows)

If you have a specified filter such as corporation ID, you can partition the table based on the corporation ID by hashing. This allows precise queries on the corresponding partition, and avoids querying all partitions.

For example:

create table tbl ( -- Master table

...

);

create table tbl_0 ( -- Partition table

crop_id int, -- Partition mod(corp_id, 128)=0

....

);

....

alter table tbl_0 inherit tbl; -- Sets the table inheritance relationship

Creating independent indexes on each column increases the size of the table, which could expand to up to 3 times as large as the original single table (space occupied by field values and row numbers)

It is not suitable for cases that require real-time, highly concurrent, and frequent write, update, and deletion of large amounts of data (it works for cases that write, update, and delete small amounts of data). (Indexes introduce additional consumption, which leads to decreased performance). (In this case, we have 31 fields and 31 indexes, the performance may drop to 20,000 records/s.)

If some of our tables are involved in 1-to-N correlated queries, we can add an array field to multiple tables in order to maintain their correlation and to avoid joining.

Assume that Table A stores information about corporations and the corporations' users, while Table B stores personal user information; Table A and Table B are involved in 1-to-N correlation through user ID (a user may exist in multiple corporations at the same time).

We may need to search for some users (specific conditions can be found in table B) in a specified corporation. This requires a 1-to-N JOIN.

Example

create table a(corp_id int, uid int, ....);

create table b(uid int, ....);

select b.* from a join b on (a.uid=b.uid and a.corp_id=? and b.xxx=xxx);

This kind of query seems to be fine, right? But what can we do if we use partitioned storage or in cases that restrict the joining of Table A and Table B?

In most cases, we have to extract all records of the specified corporation from Table A, and then import the UIDs into Table A for filtering.

-- Step 1

select * from a where corp_id=?

-- Step 2

select * from b where b.xxx=xxx and b.uid in (.......)

This is tedious. PostgreSQL can easily solve this problem.

Method 1, PostgreSQL itself does not restrict cross-database joining in terms of sharding. However, we recommend that you shard the table based on the JOIN field. (If sharding is not based on the JOIN field, PG would push down the conditions, pull data, and JOIN, which is transparent to the business).

Method 2, add a new array field into Table B to store corp_ids, and this avoids joining.

create table a(corp_id int, uid int, ....);

create table b(uid int, corp_id int[], ....); -- Adds the crop_id int[] array field, and maintains relationship between users and corporations

-- Create GIN indexes on arrays

create idx on b using gin (crop_id);

-- Use the array intersection method to search for users that meet certain conditions within a corporation

select * from b where corp_id && array[?] and xxx=xxx;

By virtue of powerful PostgreSQL functions, there are many optimization methods available for the tagging business scenario. Let's summarize the four key methods:

Creating a Real-Time User Profile Recommendation System with PostgreSQL (2)

Alibaba Clouder - July 5, 2019

ray - April 25, 2024

digoal - January 25, 2021

digoal - January 14, 2019

ApsaraDB - February 20, 2021

digoal - December 14, 2018

ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by digoal