By Xunfei, from Alibaba Cloud Storage Team

Sidecar containers are widely used in Kubernetes clusters to collect observable data from application containers. However, their intrusive nature regarding service deployment and the complexity of lifecycle management make this deployment model costly and prone to errors. This article analyzes the challenges in managing sidecar containers for data collection and provides solutions using the management capabilities offered by OpenKruise. It uses iLogtail as an example to describe the best practice for managing sidecar containers that collect observable data based on OpenKruise..

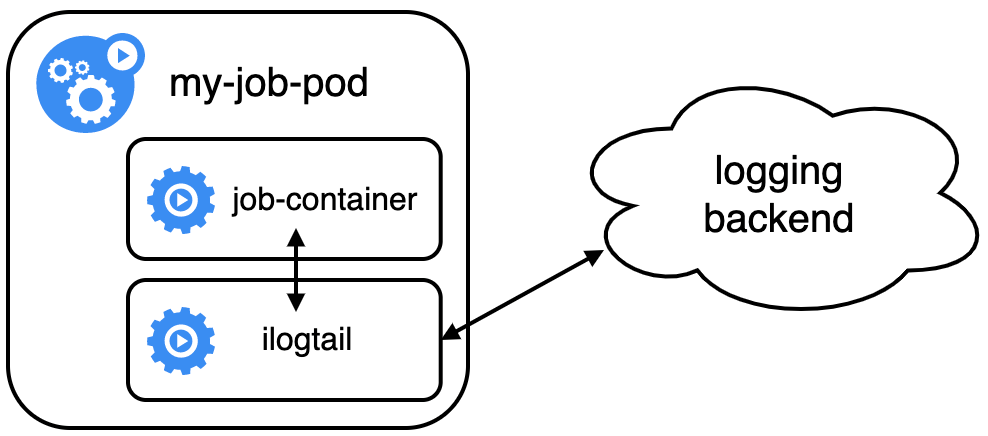

Observable systems serve as the eyes of IT operations. The Kubernetes official documentation introduces various methods for collecting observable data. In summary, there are three main methods: the native method, the DaemonSet method, and the Sidecar method. Each method has its advantages and disadvantages, and no single method can address all the challenges perfectly. Therefore, the choice of method depends on the specific scenario. In the Sidecar mode, each pod is deployed with a separate agent for collection. This approach consumes more resources but offers high stability, flexibility, and multi-tenant isolation. We recommend using the Sidecar mode in job collection scenarios or when serving multiple services on a PaaS platform.

To use the Kubernetes Sidecar mode to collect observable data, the deployment declaration of the business needs to be modified. The sidecar container as an agent and the application container should be deployed in the same pod, and the volume and network should be shared to enable the agent to collect and upload data. To ensure that no data loss occurs during the data collection under any circumstances, the agent process must start up before the application process starts up and exit after the application process ends. For job workloads, how to enable the agent to proactively exit after the application container is completed is another problem to be considered. Taking iLogtail as an example, the following shows how to configure sidecar containers for data collection.

iLogtail is an open-source, lightweight, and high-performance agent for observable data collection provided by Alibaba Cloud. It allows you to collect multiple types of observable data including logs, traces, and metrics to Kafka, ElasicSearch, and ClickHouse. Its stability has been verified by Alibaba Cloud and Alibaba Cloud customers.

apiVersion: batch/v1

kind: Job

metadata:

name: nginx-mock

namespace: default

spec:

template:

metadata:

name: nginx-mock

spec:

restartPolicy: Never

containers:

- name: nginx

image: registry.cn-hangzhou.aliyuncs.com/log-service/docker-log-test:latest

command: ["/bin/sh", "-c"]

args:

- until [[ -f /tasksite/cornerstone ]]; do sleep 1; done;

/bin/mock_log --log-type=nginx --path=/var/log/nginx/access.log --total-count=100;

retcode=$?;

touch /tasksite/tombstone;

exit $retcode

# Notify the sidecar container that the collection is completed. Otherwise, the sidecar container will not exit volumeMounts with the application container.

volumeMounts:

# Share the log directory with the iLogtail sidecar container through volumeMounts.

- mountPath: /var/log/nginx

name: log

# Use volumeMounts to communicate the process status with the iLogtail sidecar container.

- mountPath: /tasksite

name: tasksite

# iLogtail Sidecar

- name: ilogtail

image: sls-opensource-registry.cn-shanghai.cr.aliyuncs.com/ilogtail-community-edition/ilogtail:latest

command: ["/bin/sh", "-c"]

# The purpose of the first `sleep 10` is to wait for iLogtail to start the collection. The iLogtail may start to collect data only after the configuration is pulled from the remote end.

# The purpose of the second `sleep 10` is to wait for iLogtail to complete the collection. The collection is not completed until the iLogtail sends all collected data downstream.

args:

# Notify the application container that the sidecar container is ready.

- /usr/local/ilogtail/ilogtail_control.sh start;

sleep 10;

touch /tasksite/cornerstone;

until [[ -f /tasksite/tombstone ]]; do sleep 1; done;

sleep 10;

/usr/local/ilogtail/ilogtail_control.sh stop;

volumeMounts:

- name: log

mountPath: /var/log/nginx

- name: tasksite

mountPath: /tasksite

- mountPath: /usr/local/ilogtail/ilogtail_config.json

name: ilogtail-config

subPath: ilogtail_config.json

volumes:

- name: log

emptyDir: {

}

- name: tasksite

emptyDir:

medium: Memory

- name: ilogtail-config

secret:

defaultMode: 420

secretName: ilogtail-secretThe volumeMounts section in the configuration declares the shared storage, of which the storage named log is for data sharing and the storage named tasksite is for process coordination. A large amount of code in the args section is used to control the startup and exit sequence of the sidecar container and the application container. The preceding configuration code shows the following disadvantages of Sidecar mode:

SidecarSet is an abstraction of sidecar container management in OpenKruise. SidecarSet is used to inject and upgrade sidecar containers in Kubernetes clusters. It is one of the core workloads of OpenKruise. For more information, see SidecarSet documentation.

Container Launch Priority provides a method to control the startup sequence of containers in a pod. For more information, see Container Launch Priority documentation.

For a job workload, the main application container completes the task and exits, and then notifies the sidecar containers such as those for log collection to exit. For more information, see Job Sidecar Terminator documentation.

There are many cases where iLogtail is used to deploy observable scenarios in the community. For more information, see Use of Kubernetes and Use Cases of iLogtail Community Edition. This article focuses on how to use the preceding capabilities of OpenKruise to simplify the management of iLogtail sidecar containers. If you have any questions about iLogtail, query at GitHub Discussions.

If the Sidecar Terminator feature is enabled in the kruise-controller-manager Deployment, the other two features are enabled by default.

spec:

containers:

- args:

- '--enable-leader-election'

- '--metrics-addr=:8080'

- '--health-probe-addr=:8000'

- '--logtostderr=true'

- '--leader-election-namespace=kruise-system'

- '--v=4'

- '--feature-gates=SidecarTerminator=true'

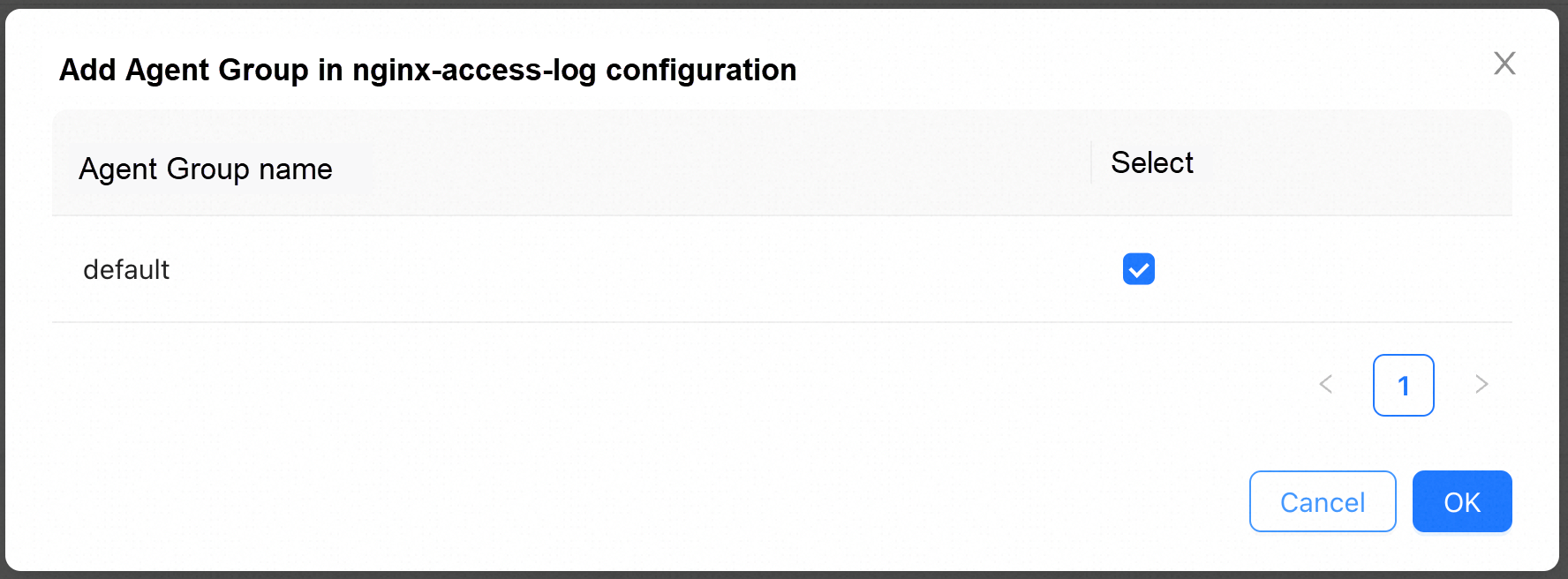

- '--sync-period=0'Create an iLogtail collection configuration in ConfigServer Open Source Edition as follows:

enable: true

inputs:

- Type: file_log

LogPath: /var/log/nginx

FilePattern: access.log

flushers:

- Type: flusher_kafka

Brokers:

- <kafka_host>:<kafka_port>

- <kafka_host>:<kafka_port>

Topic: nginx-access-logAssociate to the machine group

Define the iLogtail SidecarSet configuration as follows:

apiVersion: apps.kruise.io/v1alpha1

kind: SidecarSet

metadata:

name: ilogtail-sidecarset

spec:

selector:

# Pod labels to be injected into the sidecar container

matchLabels:

kruise.io/inject-ilogtail: "true"

# By default, the SidecarSet takes effect on the entire cluster. You can use the namespace field to specify the effective scope.

namespace: default

containers:

- command:

- /bin/sh

- '-c'

- '/usr/local/ilogtail/ilogtail_control.sh start_and_block 10'

# The purpose of parameter 10 is to wait 10 seconds for data to be sent and exit

image: sls-opensource-registry.cn-shanghai.cr.aliyuncs.com/ilogtail-community-edition/ilogtail:edge

livenessProbe:

exec:

command:

- /usr/local/ilogtail/ilogtail_control.sh

- status

name: ilogtail

env:

- name: KRUISE_CONTAINER_PRIORITY

value: '100'

- name: KRUISE_TERMINATE_SIDECAR_WHEN_JOB_EXIT

value: 'true'

- name: ALIYUN_LOGTAIL_USER_DEFINED_ID

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: 'metadata.labels[''ilogtail-tag'']'

volumeMounts:

- mountPath: /var/log/nginx

name: log

- mountPath: /usr/local/ilogtail/checkpoint

name: checkpoint

- mountPath: /usr/local/ilogtail/ilogtail_config.json

name: ilogtail-config

subPath: ilogtail_config.json

volumes:

- name: log

emptyDir: {

}

- name: checkpoint

emptyDir: {

}

- name: ilogtail-config

secret:

defaultMode: 420

secretName: ilogtail-secretNote that only livenessProbe is specified in this example. Do not use readinessProbe. Otherwise, the pod status changes to Not Ready when the sidecar container is upgraded.

In scenarios where machine resources are insufficient, you can set Sidecar container request.cpu to 0 to reduce the number of requests for pod resources. In this case, the QoS of pods is Burstable.

Define the Nginx Mock Job to contain only nginx-related configurations as follows:

apiVersion: batch/v1

kind: Job

metadata:

name: nginx-mock

namespace: default

spec:

template:

metadata:

name: nginx-mock

labels:

# The label injected into the iLogtail sidecar container.

kruise.io/inject-ilogtail: "true"

spec:

restartPolicy: Never

containers:

- name: nginx

image: registry.cn-hangzhou.aliyuncs.com/log-service/docker-log-test:latest

command: ["/bin/sh", "-c"]

args:

- /bin/mock_log --log-type=nginx --path=/var/log/nginx/access.log --total-count=100

volumeMounts:

# Use volumeMounts to share the log directory with the iLogtail sidecar container.

- mountPath: /var/log/nginx

name: log

volumes:

- name: log

emptyDir: {

}

- name: tasksite

emptyDir:

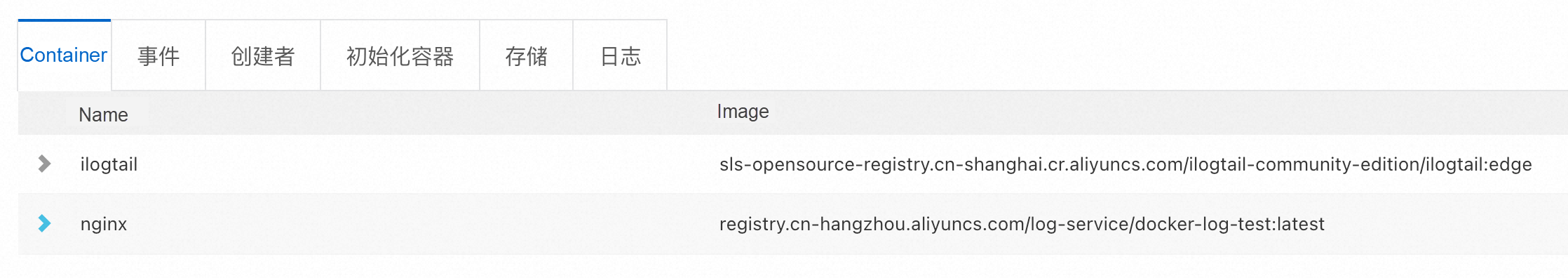

medium: MemoryAfter the nginx mock job is applied to the Kubernetes cluster, the created pods are injected into the iLogtail sidecar container. This indicates that kruise.io/inject-ilogtail: "true" has taken effect as follows:

Without this configuration, only the nginx container will be used instead of the iLogtail container.

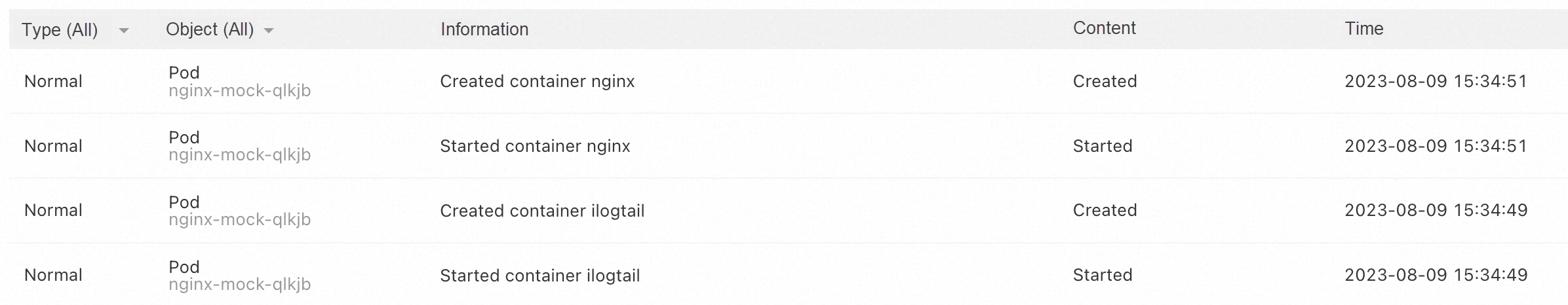

According to the Kubernetes events, the iLogtail container takes precedence over the nginx container. This indicates that KRUISE_CONTAINER_PRIORITY has taken effect as follows:

Without this configuration, the iLogtail container may start up later than the nginx container, resulting in incomplete data collection.

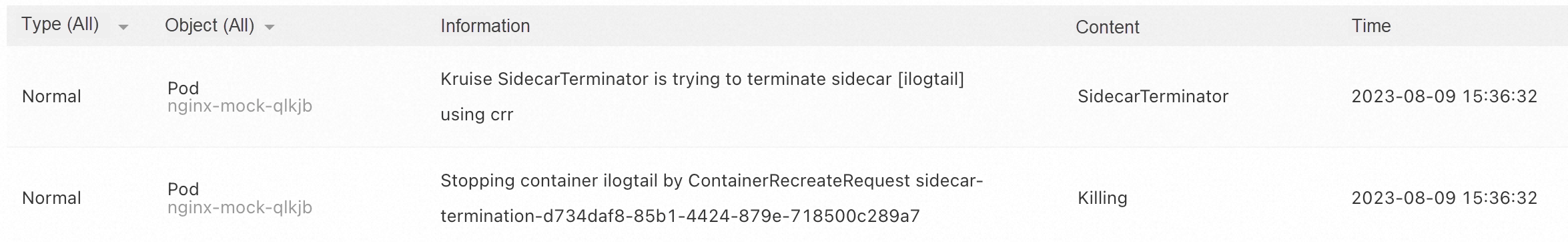

According to the Kubernetes events, the iLogtail container exits after the nginx container completes the collection. This indicates that the KRUISE_TERMINATE_SIDECAR_WHEN_JOB_EXIT has taken effect as follows:

Without this configuration, pods will remain running because the sidecar container will not exit with the application container unless you manually delete the job.

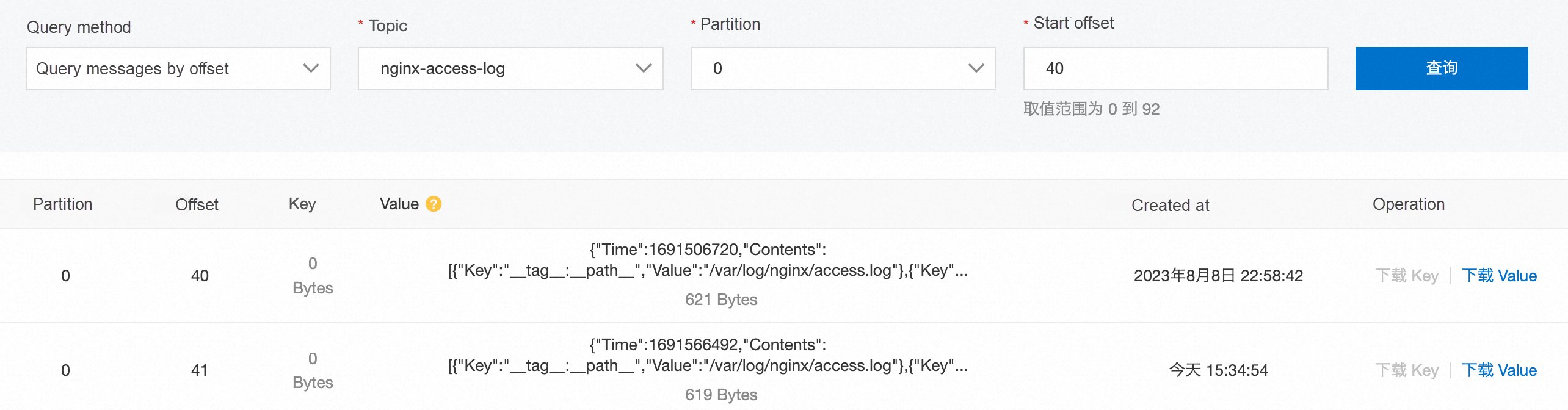

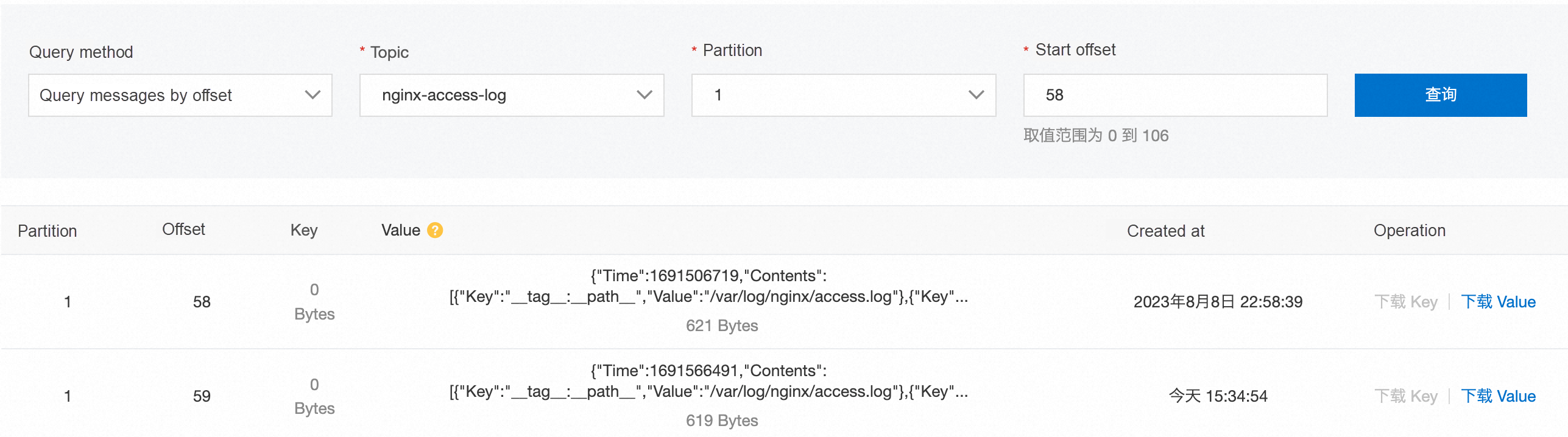

The correct startup and exit sequence of the preceding two sidecar containers ensures the integrity of the data collected by iLogtail. Query the data collected to Kafka by offset. It is shown that the collected data is complete, with a total of 100 pieces (92 - 40 + 106 - 58 = 100). The results are as follows:

We can update the configuration of the iLogtail SidecarSet to the latest version and then apply it.

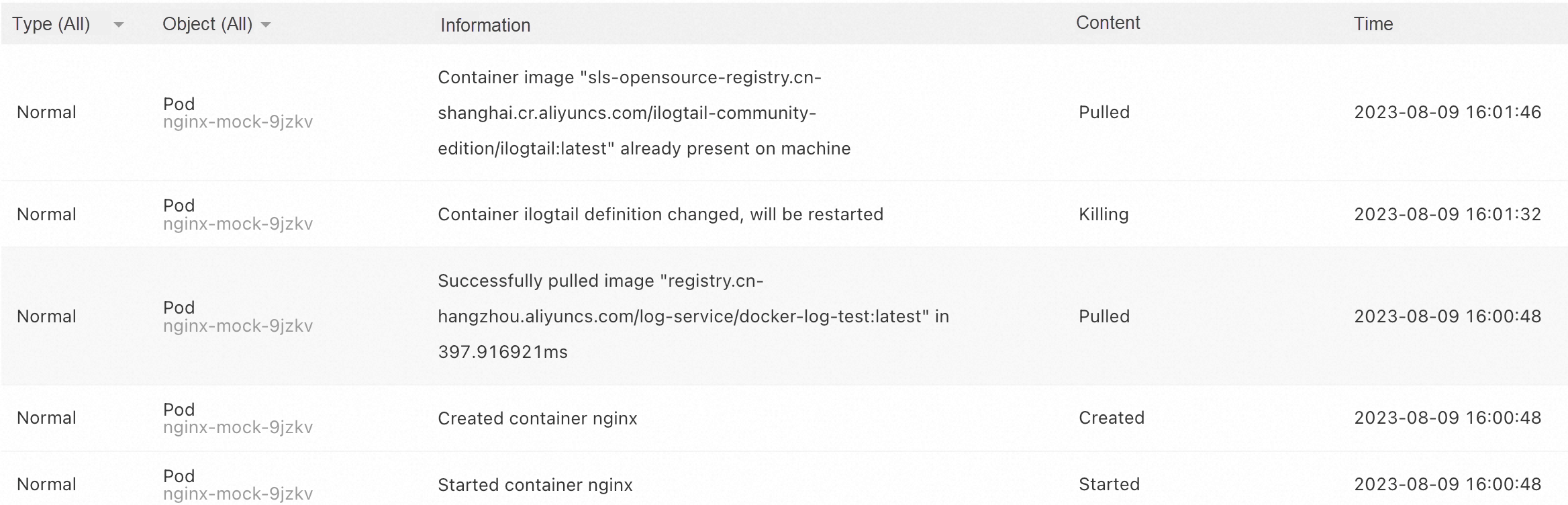

image: sls-opensource-registry.cn-shanghai.cr.aliyuncs.com/ilogtail-community-edition/ilogtail:latestThe Kubernetes events show that the pod is not rebuilt. The iLogtail container is rebuilt due to the change in the declaration, while the nginx container is not interrupted. The results are as follows:

This feature depends on the in-place update of Kruise. For more information, see Kruise InPlace Update. However, when you upgrade a sidecar container, risks may arise. If a sidecar container fails to be upgraded, you may encounter the situation of Pod Not Ready, and the business is affected. Therefore, SidecarSet provides a variety of canary release capabilities to avoid risks. For more information, see Kruise SidecarSet.

apiVersion: apps.kruise.io/v1alpha1

kind: SidecarSet

metadata:

name: sidecarset

spec:

# ...

updateStrategy:

type: RollingUpdate

# Maximum number of unavailability

maxUnavailable: 20%

# Batch release

partition: 90

# Canary release, through pod label

selector:

matchLabels:

# Some Pods contain canary labels,

# or any other labels where a small number of pods can be selected

deploy-env: canaryIn addition, if the container is similar to a service mesh or an envoy mesh, it requires the hot upgrade featureof SidecarSet. For more information, see Hot Upgrade Sidecar.

If you want to release a Kruise SidecarSet by using an Argo-cd, you must configure the SidecarSet Custom CRD Health Checks. Based on this configuration, Argo-cd can check whether the SidecarSet is released and whether the pod is ready.

apiVersion: v1

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/name: argocd-cm

app.kubernetes.io/part-of: argocd

name: argocd-cm

namespace: argocd

data:

resource.customizations.health.apps.kruise.io_SidecarSet: |

hs = {}

-- if paused

if obj.spec.updateStrategy.paused then

hs.status = "Suspended"

hs.message = "SidecarSet is Suspended"

return hs

end

-- check sidecarSet status

if obj.status ~= nil then

if obj.status.observedGeneration < obj.metadata.generation then

hs.status = "Progressing"

hs.message = "Waiting for rollout to finish: observed sidecarSet generation less then desired generation"

return hs

end

if obj.status.updatedPods < obj.spec.matchedPods then

hs.status = "Progressing"

hs.message = "Waiting for rollout to finish: replicas hasn't finished updating..."

return hs

end

if obj.status.updatedReadyPods < obj.status.updatedPods then

hs.status = "Progressing"

hs.message = "Waiting for rollout to finish: replicas hasn't finished updating..."

return hs

end

hs.status = "Healthy"

return hs

end

-- if status == nil

hs.status = "Progressing"

hs.message = "Waiting for sidecarSet"

return hsThe approach where a pod harbors multiple containers is increasingly being embraced by developers. As a result, an effective method for managing sidecar containers is becoming an urgent requirement within the Kubernetes ecosystem. Innovations like Kruise SidecarSet, Container Launch Priority, and Job Sidecar Terminator represent some of the advancements in sidecar container management.

Using OpenKruise to oversee iLogtail log collection significantly eases the management of sidecar containers, decoupling their configuration from application containers. This clarity in container startup order allows for sidecar container upgrades without the need to recreate pods. Nonetheless, certain challenges remain unresolved, such as determining the appropriate resource allocation for sidecar containers, planning log path mounting strategies, and differentiating sidecar configurations across various application pods. Hence, we look forward to collaborating with more developers in the community to address these issues. Contributions of ideas to enrich the Kubernetes ecosystem are also warmly welcomed.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Using Project Policies to Isolate iLogtail Write Permissions in Different Networks

ePLDT Becomes Premium Partner of Alibaba Cloud to Serve Digital Needs of Philippine Businesses

1,044 posts | 257 followers

FollowAlibaba Cloud Community - August 2, 2022

Alibaba Developer - April 15, 2021

Alibaba Cloud Native Community - December 29, 2023

Alibaba Container Service - October 13, 2022

Alibaba Cloud Native Community - November 19, 2024

Alibaba Cloud Community - December 21, 2023

1,044 posts | 257 followers

Follow Best Practices

Best Practices

Follow our step-by-step best practices guides to build your own business case.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by Alibaba Cloud Community