By Lin Fan, Senior Engineer from Alibaba's R&D Efficiency Team

Good code submission practices and proper pre-change checks can help reduce failures, but cannot completely eliminate the risk. Although adding multiple test environment copies can efficiently control the impact scope of failures, most enterprises have limited resources, resulting in a conflict between reducing test environment costs and improving test environment stability.

To solve this problem, the innovative Alibaba R&D efficiency team designed an amazing service-level reusable virtualization technology called feature environment. In this article, we will focus on test environment management and cover this unique method used at Alibaba.

Many practices at Alibaba seem simple, but actually rely on many well thought out concepts, such as the management of test environments.

Internet product services are usually composed of web apps, middleware, databases and many back-office business programs. A runtime environment is a small self-contained ecosystem. The most basic runtime environment is the online environment, which deploys the officially released version of the product to provide users with continuous and reliable services.

In addition, many runtime environments that are not open to external users are also available, and are used for the routine development and verification of product teams. These environments are collectively referred to as test environments. The stability of the formal environment, apart from the quality of the software itself, is mainly related to the running infrastructures, such as the host and the network, while the stability of the test environment is more affected by human factors. Test environment failures are common due to frequent version changes and the deployment of unverified code.

A good code submission habit and appropriate pre-change check can help reduce the occurrence of faults, but cannot eliminate them entirely. Increasing multiple test environment replicas can effectively control the impact scope of faults. However, enterprise resources are limited, so reducing the cost and improving the stability of the test environment have become two objectives to balance.

In this field, the ingenious Alibaba R&D efficiency team has designed a service-level reusable virtualization technology, called "Feature Environments", the idea of which is clever and impressive. This article focuses on the topic of test environment management to discuss this way of working with Alibaba characteristics.

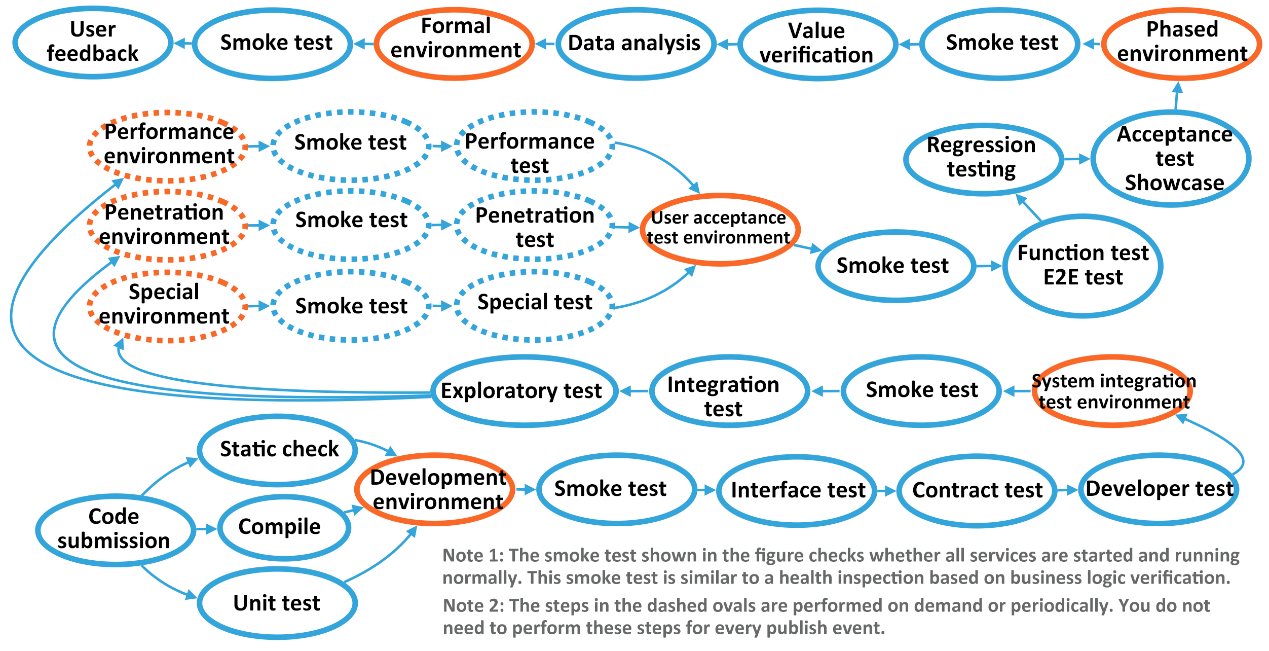

Test environments are widely used. Common test environments, such as the system integration test environment, the user acceptance test environment, the pre-release test environment, and the phased test environment, reflect the delivery lifecycle of the product and, indirectly, the organization structure of the entire team.

The test environment for a small workshop-style product team is very simple to manage. Each engineer can start the full suite of software components locally for debugging. If you still think this is not safe, it should be enough to add a public integrated test environment.

As the product scales up, it becomes time consuming and cumbersome to start all service components locally. Engineers can only run some components to be debugged locally, and then use the rest of the components in the public test environment to form a complete system.

Moreover, with the expansion of the team size, the responsibilities of each team member are further subdivided and new sub-teams are formed, which means that the communication cost of the project increases and the stability of the public test environment becomes difficult to control. In this process, the impact of the complexity of test environment management is not only reflected in the cumbersome service joint debugging, but also directly reflected in the changes in delivery process and resource costs.

A significant change in the delivery process is the increase in the variety of test environments. Engineers have designed various dedicated test environments for different purposes. The combination of these test environments forms a unique delivery process for each enterprise. The following figure shows a complex delivery process for large projects.

From the perspective of individual services, environments are connected by pipelines, coupled with levels of automated testing or manual approval operations, to realize the transfer between the environments. Generally, the higher the level of environment, the lower the deployment frequency, and therefore the higher the relative stability. On the contrary, in a low-level environment, new deployments may occur at any time, interrupting others who are using the environment. Sometimes, to reproduce some special problem scenarios, some developers have to log on to the server directly to perform operations, further affecting the stability and availability of the environment.

Faced with a test environment that may collapse at any time, small enterprises try to adopt a method of "blocking", that is, to restrict service change time and set up strict change specifications, while large enterprises are good at "unchoking", that is, to increase replicas of the test environment to isolate the impact range of faults. Obviously, if the method of "blocking" is adopted, the situation of the overwhelmed test environment will definitely get worse and worse, which is the truth that has long been revealed in The legend of King Yu Tamed the Flood a thousand years ago. So, deliberate control cannot save the fragile test environment.

In recent years, the rise of DevOps culture has freed developers' hands end-to-end, but it is a double-edged sword for the management of the test environment. On the one hand, DevOps encourages developers to participate in O&M to understand the complete product lifecycle, which helps to reduce unnecessary O&M incidents. On the other hand, DevOps allows more people to access the test environment, so more changes and more hotfixes appear. From a global perspective, these practices have more advantages than disadvantages, but they cannot improve test environment stability. Simple process "unchoking" is also unable to save the fragile test environment.

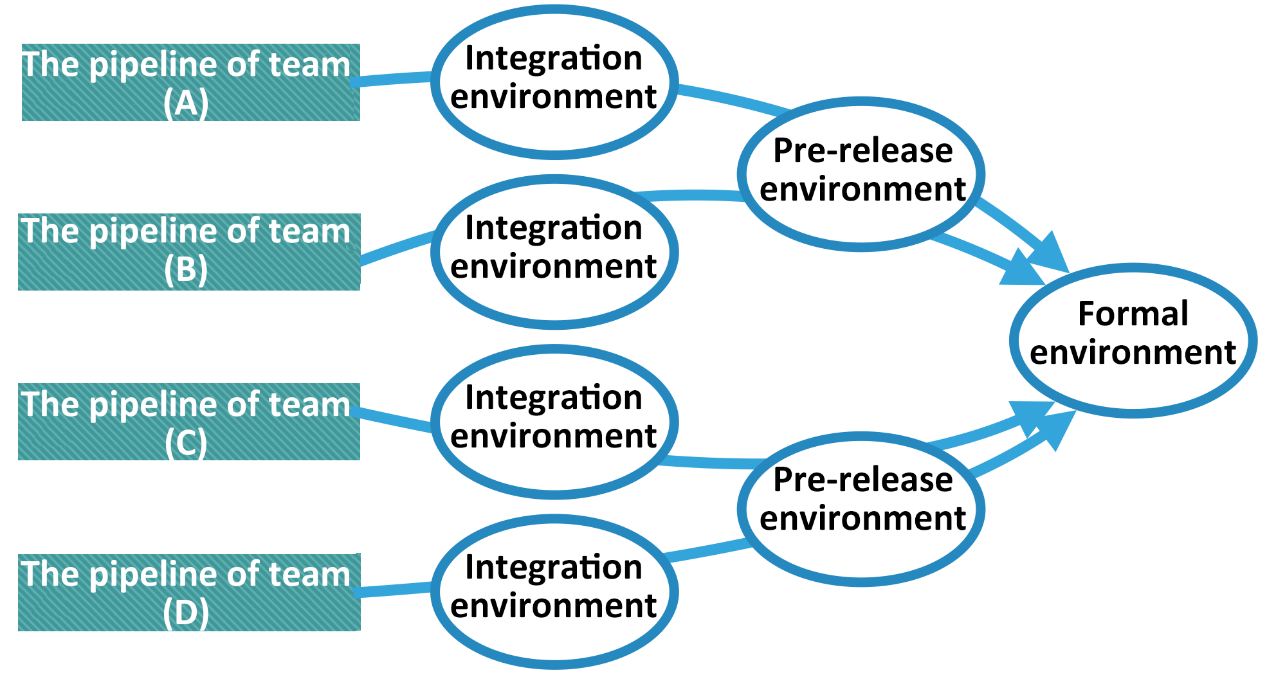

Then, what should be invested has to be invested. The low-level test environments used by different teams are made independent, so that each team sees a linear pipeline, and the shape of river convergence appears when viewed as a whole.

Therefore, ideally, each developer should obtain an exclusive and stable test environment, and complete their work without interference. However, due to the costs, only limited test resources can be shared within the team in reality, and the interference between different members in the test environment becomes a hidden danger affecting the quality of software development. Increasing the number of replicas of the test environment is essentially a way to increase cost in exchange for efficiency. However, many explorers who try to find the optimal balance between the cost and the efficiency seem to go further and further along the same road of no return.

Due to the objective scale and volume, Alibaba product teams are also susceptible to the above-mentioned troubles of managing the test environment.

In Alibaba, there are also many types of test environments. The naming of various test environments is closely related to their functions. Although some names are commonly used in the industry, no authoritative naming standard has been formed. In fact, the name of the environment is only a form. The key lies in the fact that various test environments should be adapted to specific application scenarios respectively, and some differences should exist between scenarios more or less.

Some of the differences lie in the types of services being run. For example, the performance test environment may only need to run the most visited key services related to stress testing, while it is only a waste of resources if it runs other services. Some differences lie in the source of the access data. For example, the data source of the developer test environment is definitely different from that of the formal environment, so the fake data used in the test does not contaminate online users' requests. The pre-release environment (or the user acceptance test environment) uses the data source (or replicas of formal data sources) consistent with the formal environment, so as to reflect the operation of new functions on real data. The automated test-related environment has a separate set of test databases to avoid interference from other manual operations during testing.

Some other differences lie in users. For example, both the phased environment and the pre-release environment use formal data sources, but the users in the phased environment are a small number of real external users, while the users in the pre-release environment are all internal personnel. In short, it is not necessary to create a test environment for a test scenario without business specificity.

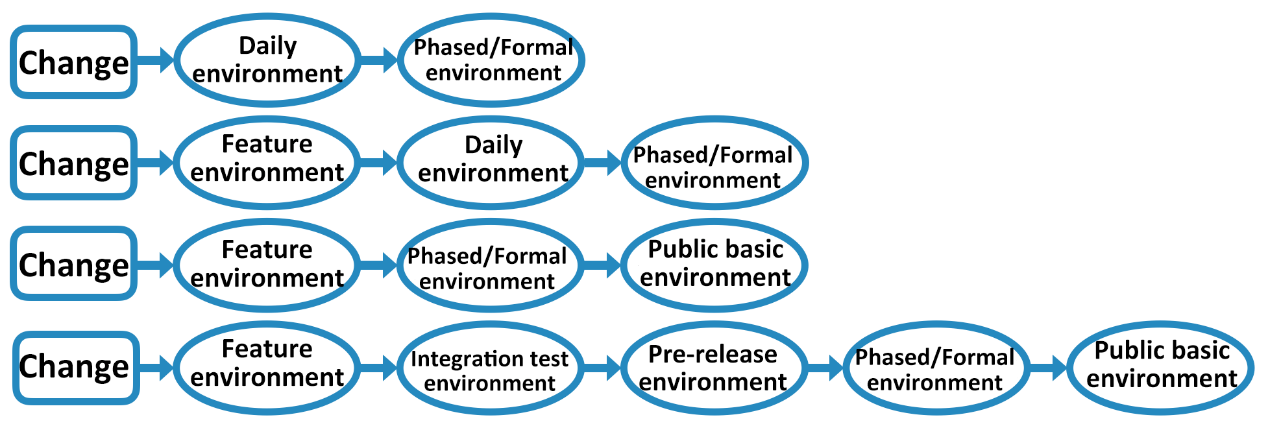

At the Group level, Alibaba has relatively loose restrictions on the form of the pipeline. Objectively, only the front-line development teams know what the best delivery process for the team should be. The Alibaba development platform only standardizes some recommended pipeline templates, on which developers can build. Several typical template examples are listed below:

Here, several environment type names that are less common in the outside world appear and will be described in detail later.

Cost management problems are tricky and worth exploring. The costs related to the test environment mainly include the "labor costs" required to manage the environment and the "asset costs" required to purchase infrastructure. With automated and self-service tools, labor-related costs can be effectively reduced. Automation is also a big topic. It is advisable to discuss it in another article, so we will not delve into it here for the time being.

The reduction of asset purchase costs depends on the improvement and progress of technology (excluding the factors of price changes caused by large-scale procurement), while the development history of infrastructure technology includes two major areas: hardware and software. The significant cost reduction brought by hardware development usually benefits from new materials, new production processes and new hardware design ideas. However, at present, the substantial decrease in infrastructure costs brought by software development is mostly due to the breakthrough of virtualization (that is, resource isolation and multiplexing) technology.

The earliest virtualization technology is virtual machines. As early as the 1950s, IBM began to use this hardware-level virtualization method to improve the resource utilization exponentially. Different isolated environments on the virtual machine run complete operating systems respectively, so that the isolation is high and the universality is strong. However, it is slightly cumbersome for the scenario of running business services. After 2000, open-source projects, such as KVM and XEN, popularized the hardware-level virtualization.

At the same time, another lightweight virtualization technology emerged. The early container technology, represented by OpenVZ and LXC, achieved the virtualization of the runtime environment built on the kernel of the operating system, which reduced the resource consumption of the independent operating system and obtained higher resource utilization at the expense of certain isolation.

Later, Docker, with its concept of image encapsulation and single-process container, promoted this kernel-level virtualization technology to a high level sought after by millions of people. Following the pace of technological advancement, Alibaba began using virtual machines and containers very early. During the shopping carnival on "Singles' Day" in 2017, the proportion of online business services being containerized reached 100%. The next challenge, however, is whether infrastructure resources can be used more efficiently.

By getting rid of the overhead of hardware command conversion and operating systems for virtual machines, only a thin layer of kernel namespace isolation exists between programs and ordinary programs running in containers, which has no runtime performance loss at all. As a result, virtualization seems to have reached its limit in this direction. The only possibility is to put aside generic scenarios, focus on specific scenarios of test environment management, and continue to seek breakthroughs. Finally, Alibaba has found a new treasure in this area: the service-level virtualization.

The so-called service-level virtualization is essentially based on the control of message routing to achieve the reuse of some services in the cluster. In the case of service-level virtualization, many seemingly large standalone test environments actually consume minimal additional infrastructure resources. Therefore, it is no longer a significant advantage to provide each developer with a dedicated test environment cluster.

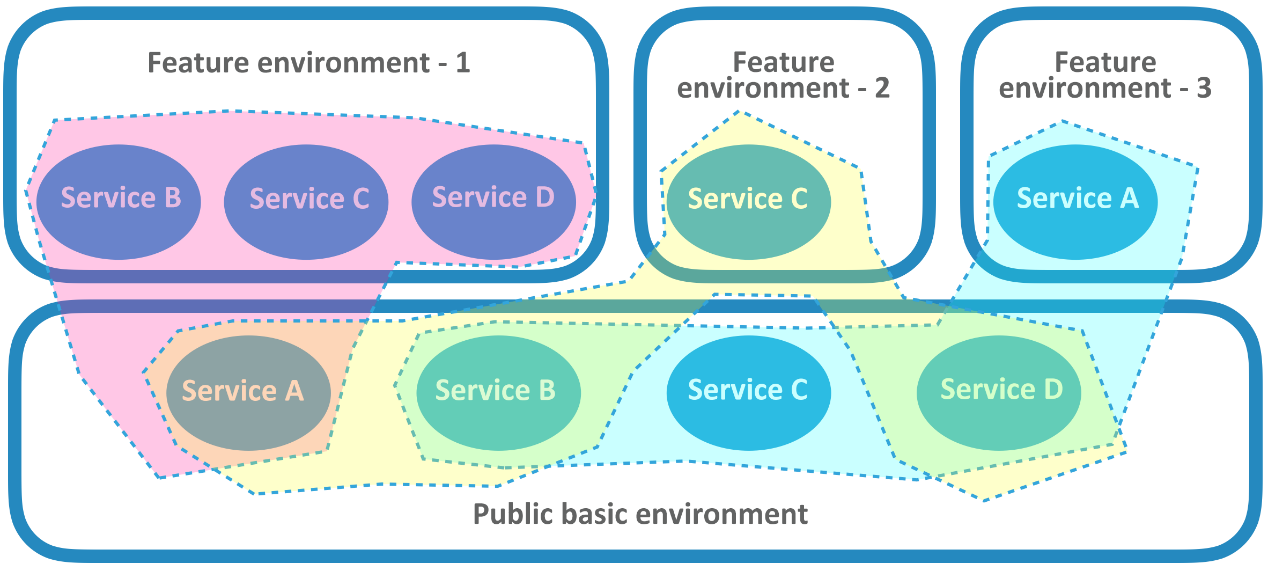

Specifically, the Alibaba delivery process includes two special types of test environments: the "shared basic environment" and the "feature environment", which form a test environment usage method with Alibaba characteristics. The shared basic environment is a complete service runtime environment, which typically runs a relatively stable service version. Some teams use a low-level environment (called the "daily environment") that always deploys the latest version of each service as the shared basic environment.

The feature environment is the most interesting part of this method. It is a virtual environment. Superficially, each feature environment is an independent and complete test environment consisting of a cluster of services. In fact, apart from the services that some current users want to test, other services are virtualized through the routing system and message-oriented middleware, pointing to the corresponding services in the shared basic environment. In the general development process at Alibaba, development tasks needs to go through the feature branches, release branches, and many related links to be finally released and launched. Most environments are deployed from the release branch, but this kind of self-use virtual environments for developers are deployed from the version of the code feature branch. Therefore, it can be called the "feature environment" (it is called the "project environment" in Alibaba).

For example, the complete deployment of a transaction system consists of more than a dozen small systems, including the authentication service, the transaction service, the order service, and the settlement service, as well as corresponding databases, cache pools, and message-oriented middleware. Then, its shared basic environment is essentially a complete environment with all services and peripheral components. Suppose that two feature environments are running at this time. One only starts the transaction service, and the other starts the transaction service, the order service and the settlement service. For users in the first feature environment, although all services except the transaction service are actually proxied by the shared basic environment, it seems that the transaction service owns a complete set of environments during use: You can freely deploy and update the transaction service version in the environment and debug it without worrying about affecting other users. For users in the second feature environment, the three services deployed in the environment can be jointly debugged and verified. If the authentication service is used in the scenario, the authentication service in the shared basic environment responds.

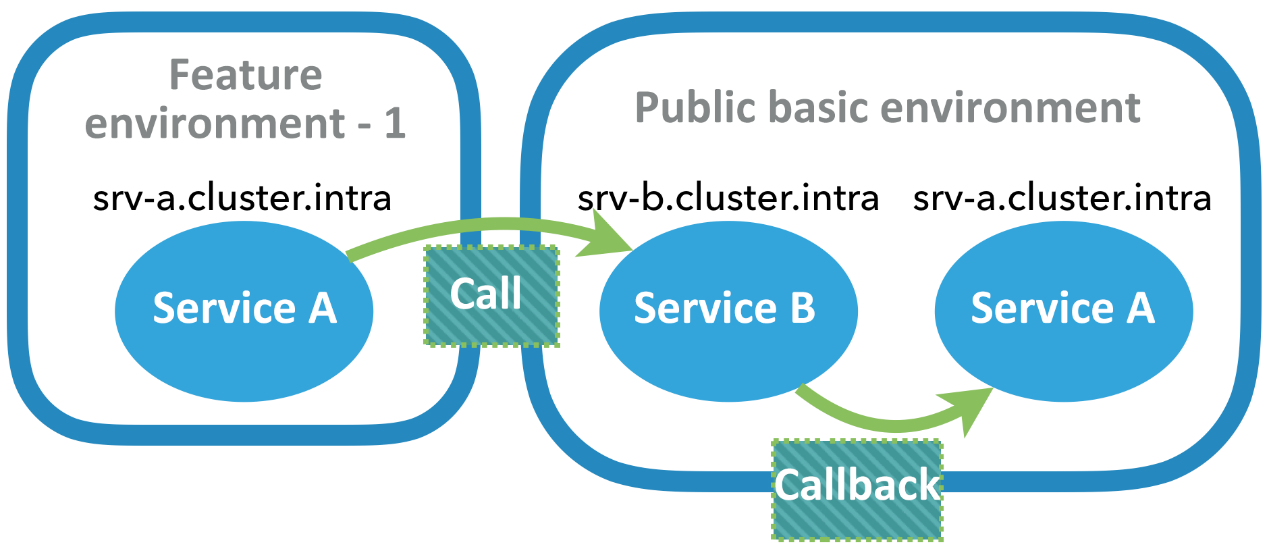

Doesn't this seem to be the routing address corresponding to the dynamically modified domain name, or the delivery address corresponding to the message subject? In fact, it is not that simple, because the route of the shared basic environment cannot be modified for a certain feature environment. Therefore, the orthodox routing mechanism can only realize one-way target control, that is, the service in the feature environment can actively initiate a call to ensure the correct routing. If the initiator of the request is in the shared basic environment, it is impossible to know which feature environment to send the request to. For HTTP requests, it is even difficult to handle callbacks. When a service in a shared basic environment is called back, domain name resolution targets the service with the same name in the shared basic environment.

How can data be routed and delivered correctly in both ways? Let's go back to the essence of the problem: Which feature environment the request should go into is relevant to the initiator of the request. Therefore, the key to implement two-way binding lies in identifying the feature environment in which the request initiator is located and conducting end-to-end routing control. This process is somewhat similar to "phased release" and can be solved using a similar approach.

Thanks to Alibaba's technical accumulation in the middleware field and the widespread use of tracing tools, such as EagleEye, it is easy to identify request initiators and trace callback links. In this way, the routing control is simple. When using a feature environment, the user needs to "join" the environment. This operation associates the user identification (such as, the IP address or the user ID) with the specified feature environment. Each user can only belong to one feature environment at a time. When the data request passes through the routing middleware (such as message queue, message gateway, and HTTP gateway), once it is identified that the initiator of the request is currently in the feature environment, the request is routed to the service in the environment. If the environment does not have the same service as the target, then the request is routed or delivered to the shared basic environment.

The feature environment does not exist independently. It can be built on top of container technology for greater flexibility. Just as the convenience of infrastructure acquisition can be obtained by building a container on a virtual machine, in a feature environment, rapid and dynamic deployment of services through a container means that users can add a service that needs to be modified or debugged to the feature environment at any time, or destroy a service in the environment at any time, so that the shared basic environment can automatically replace it.

Another problem is service cluster debugging.

In conjunction with the way that the feature branch of AoneFlow works, if different service branches of several services are deployed to the same feature environment, real-time joint debugging of multiple features can be performed to use the feature environment for integration testing. However, even though the feature environment has a low creation cost, the services are deployed on the test cluster. This means that every time you modify the code, you need to wait for the pipeline to be built and deployed, saving the space overhead, but not shortening the time overhead.

To further reduce costs and improve efficiency, the Alibaba team members have come up with another idea: to add local development machines to the feature environment. Within the Group, both the development machine and the test environment use the intranet IP address, so it is not difficult to directly route the requests from the specific test environment to the development machine with some modifications. This means that, even if a user in the feature environment accesses a service that actually comes from the shared basic environment, some of the services on the subsequent processing link can also come from the feature environment or even from the local environment. In this way, debugging services in the cluster becomes simple without waiting a long time for the pipeline to be built, as if the entire test environment runs locally.

Do you think service-level virtualization is too niche and out-of-reach for ordinary developers? This is not the case. You can immediately build a feature environment yourself to give it a try.

The Alibaba feature environment implements two-way routing service-level virtualization, which includes various common service communication methods, such as the HTTP call, the RPC call, the message queue, and the message notification. It can be challenging to complete such a fully functional test environment. From a general-purpose point of view, you can start with the most popular HTTP protocol and build a simple feature environment that supports one-way routing.

To facilitate environment management, it is best to have a cluster that can run containers. In the open-source community, full-featured Kubernetes is a good choice. Some concepts related to routing control in Kubernetes are displayed as resource objects to users.

Briefly, the Namespace object can isolate the routing domain of the service, which is not the same as the kernel Namespace used for container isolation. Don't confuse these two. The Service object is used to specify the routing target and name of the service. The Deployment object corresponds to the actually deployed service. The Service object of type ClusterIP (NodePort and LoadBalancer types, which are ignored for the moment) can route a real service in the same Namespace, while the Service object of type ExternalName can be used as the routing proxy of the external service in the current Namespace. The management of these resource objects can be described by using files in YAML format. After learning about these, you can start to build the feature environment.

The building process of infrastructure and Kubernetes cluster is skipped. Let's get straight to the point. First, prepare a public infrastructure environment for routing backups, which is a full-scale test environment, and includes all services and other infrastructure in the tested system. External access is not considered for the moment. The corresponding Service objects of all services in the shared basic environment can use ClusterIP type, and assume that these objects correspond to the Namespace named pub-base-env. In this way, Kubernetes automatically assigns the domain name "service name.svc.cluster" and the cluster global domain name "service name.pub-base-env.svc.cluster" available in the Namespace to each service in this environment. With the guarantee of a backup, you can start to build a feature environment. The simplest feature environment can contain only one real service (such as trade-service), and all other services are proxied to the public infrastructure environment by using the ExternalName type Service objects. Assuming that the environment uses the Namespace named feature-env-1, whose YAML is described as follows (information of non-key fields is omitted):

kind: Namespace

metadata:

name: feature-env-1

________________________________________

kind: Service

metadata:

name: trade-service

namespace: feature-env-1

spec:

type: ClusterIP

...

________________________________________

kind: Deployment

metadata:

name: trade-service

namespace: feature-env-1

spec:

...

________________________________________

kind: Service

metadata:

name: order-service

namespace: feature-env-1

spec:

type: ExternalName

externalName: order-service.pub-base-env.svc.cluster

...

________________________________________

kind: Service

...Note that the service, order-service, can be accessed by using the local domain name order-service.svc.cluster in the current feature environment Namespace, and the request will be routed to the global domain name order-service.pub-base-env.svc.cluster configured by the Service, that is, it will be routed to the service with the same name in the public infrastructure environment for processing. Other services in the Namespace do not perceive this difference. Instead, they may assume that all related services are deployed in this Namespace.

If the developer modifies the order-service during the development of the feature environment, the modified version should be added to the environment. All you need to do is modify the Service object property of order-service by using the patch operation of Kubernetes, change it to the ClusterIP type, and create a Deployment object in the current Namespace to associate it with.

The modified Service object is only valid for services within the corresponding Namespace (that is, the corresponding feature environment), and cannot affect requests recalled from the public infrastructure environment, so the route is one-way. In this case, the feature environment must contain the portal service of the call link to be tested, and the service containing the callback operation. For example, the feature to be tested is initiated by the interface operation, and the service providing the user interface is the portal service. Even if the service has not been modified, its mainline version should be deployed in the feature environment.

Through this mechanism, it is not difficult to implement the function that partially replaces the cluster service with the local service for debugging and development. If the cluster and the local host are both in the Intranet, just point the ExternalName type Service object to the local IP address and service port. Otherwise, you need to add a public network routing for the local service, and implement this function through dynamic domain name resolution.

At the same time, Yunxiao is gradually improving the Kubernetes-based feature environment solution, which will provide more comprehensive routing isolation support. It is worth mentioning that, due to the particularity of the public cloud, it is a challenge that must be overcome to join the local host to the cluster on the cloud during joint debugging. Therefore, Yunxiao has implemented the method of joining the local LAN host (without a public IP address) to the Kubernetes cluster in a different Intranet for joint debugging through the routing capability of the tunnel network + kube-proxy. The technical details will also be announced in the near future on the official Yunxiao WeChat account, so stay tuned.

While many people are still waiting for the arrival of the next wave of virtualization technology after virtual machines and containers, Alibaba has already provided an answer. The mentality of entrepreneurs makes people in Alibaba understand that they need to save every penny. In fact, it is not technology but imagination that limits innovation. The concept of service-level virtualization breaks through the traditional cognition of environmental replica, and resolves the balancing issue between cost and stability of test environments from a unique perspective.

As a special technical carrier, the value of the feature environment lies not only in the lightweight test environment management experience, but also in bringing a smooth way of working for every developer, which is actually "minimal but not simple".

Experience is the best teacher. Alibaba Yunxiao has helped enormously with the methodology for dealing with cooperation on large products. Industrial tasks and technical challenges, such as agile and quick product iterations, huge amounts of hosted data, high effective testing tools, distributed second-level structure, and large scope cluster deployment release are contributions offered by the internal Alibaba group teams, ecosystem partners, and cloud developers. We sincerely welcome colleagues from across the industry to discuss and communicate with us.

15 posts | 1 followers

FollowAlibaba Cloud Native Community - April 7, 2022

Alibaba Clouder - August 22, 2019

Alibaba Clouder - May 27, 2020

Alibaba Clouder - April 22, 2019

Alibaba Cloud Community - March 8, 2022

Alibaba Cloud Community - February 15, 2022

15 posts | 1 followers

Follow Mobile Testing

Mobile Testing

Provides comprehensive quality assurance for the release of your apps.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn More Alibaba Cloud Flow

Alibaba Cloud Flow

An enterprise-level continuous delivery tool.

Learn More Cloud Governance Center

Cloud Governance Center

Set up and manage an Alibaba Cloud multi-account environment in one-stop mode

Learn MoreMore Posts by Alibaba Tech