Disclaimer: This is a translated work of Qinxia's 漫谈分布式系统. All rights reserved to the original author.

SQL is a long-standing, special language. In relational databases, we have discovered and accumulated a lot of experience in performance optimization.

After introducing SQL to MapReduce and Spark, we have significantly improved development efficiency. At the same time, referring to the experience in relational databases, we may need to do performance optimization on more operations.

This article mentions that Shuffle is an operation that drags down performance and highlights Spark's efforts on Shuffle. These optimization effects will continue to play a role in Spark SQL (such as the group by), which we often write in SQL.

In addition to Shuffle, there is another operation in SQL that significantly slows down the performance: Join.

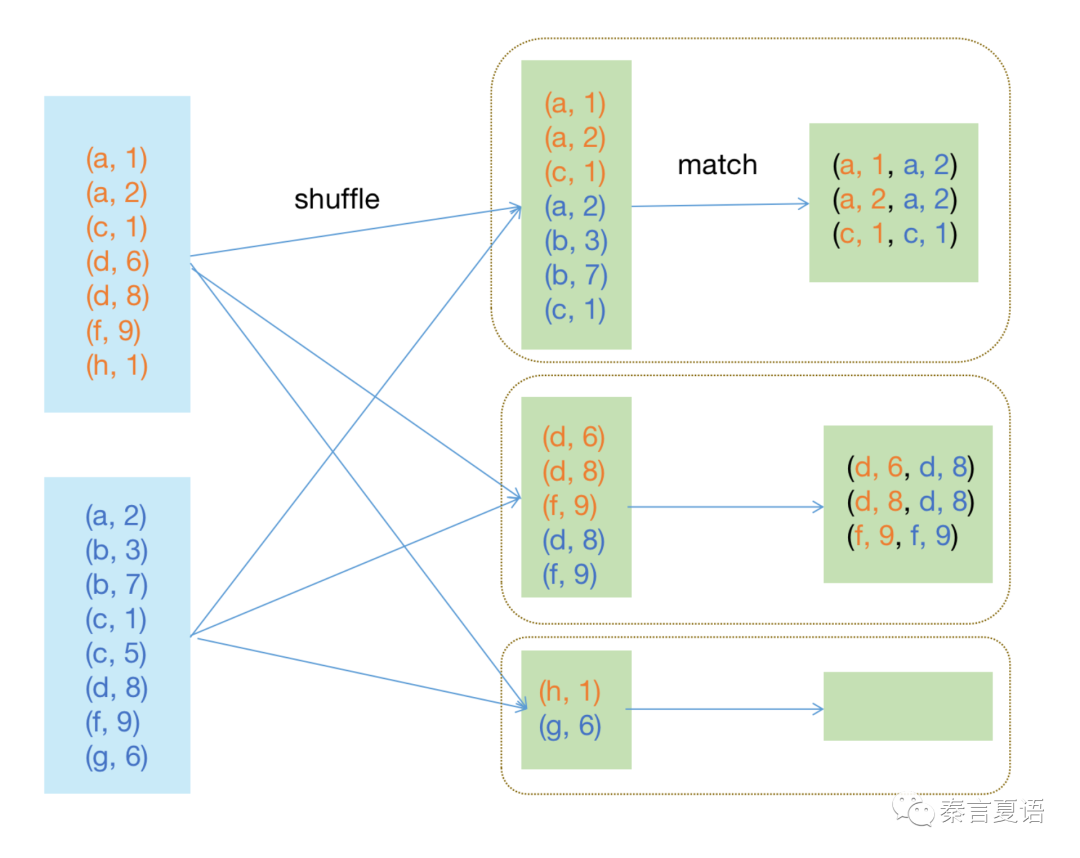

Let’s take the most common two-table equi join as an example. The diagram is listed below:

The equi join involves shuffling the two tables separately, grouping the same keys together, and matching and filtering out the data with the same value. The first step is shuffling the two tables separately, which has been optimized before. The second step is matching the data, which also involves a large amount of I/O, so it is likely to cause performance problems.

Therefore, the optimization of Join should focus on how to match data more efficiently after shuffling.

Let’s take an SQL statement for querying a transfer record as an example:

select

*

from

sender join transaction

on

sender.id=transaction.sender_idThe most intuitive way is to judge by a cycle. The following is the pseudo-code:

for i in sender:

for j in transaction:

if i.id == j.sender_id:

...The complexity of this Cartesian product algorithm is O(m*n), where m and n are the numbers of rows in the two tables, respectively.

It is inefficient, and no one will use this method in actual production. We often remind everyone that Join must bring conditions. Otherwise, you have to compute the Cartesian product.

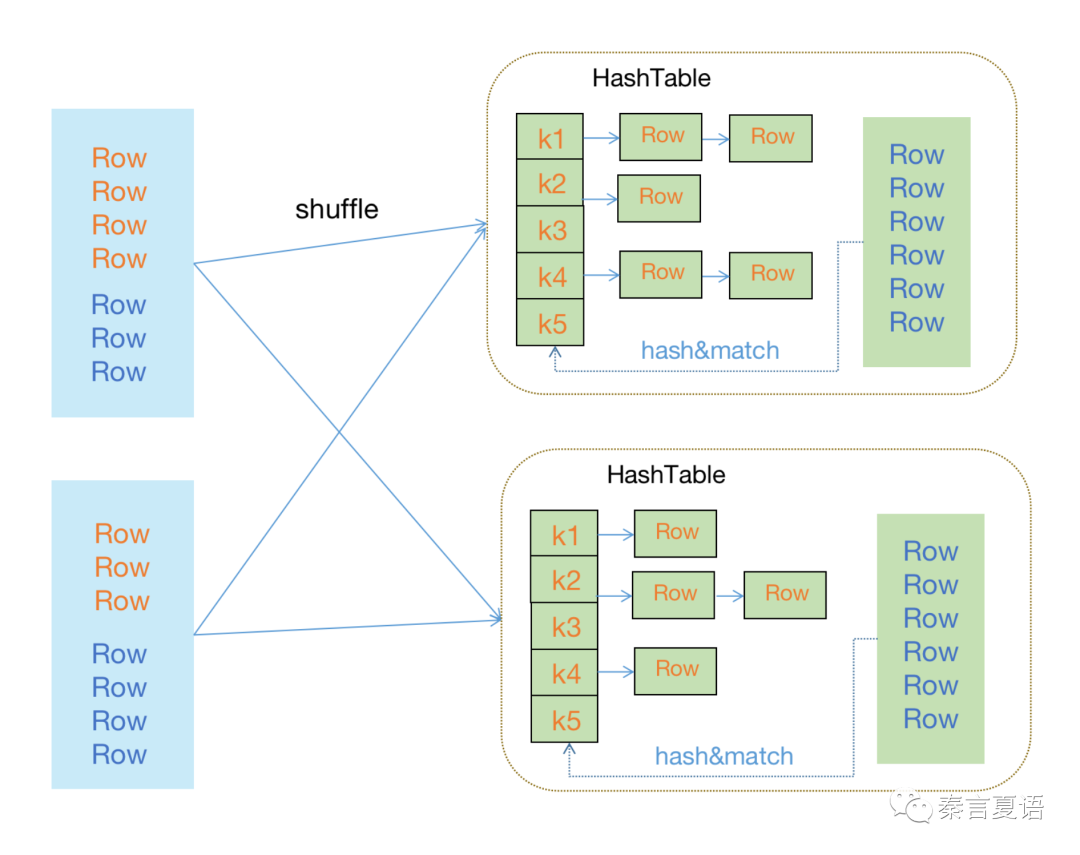

People who once were immersed in algorithms can quickly find that the fastest way to judge whether two elements are equal or whether an element exists is to use HashTable. It is also called Hash Join or Shuffled Hash Join.

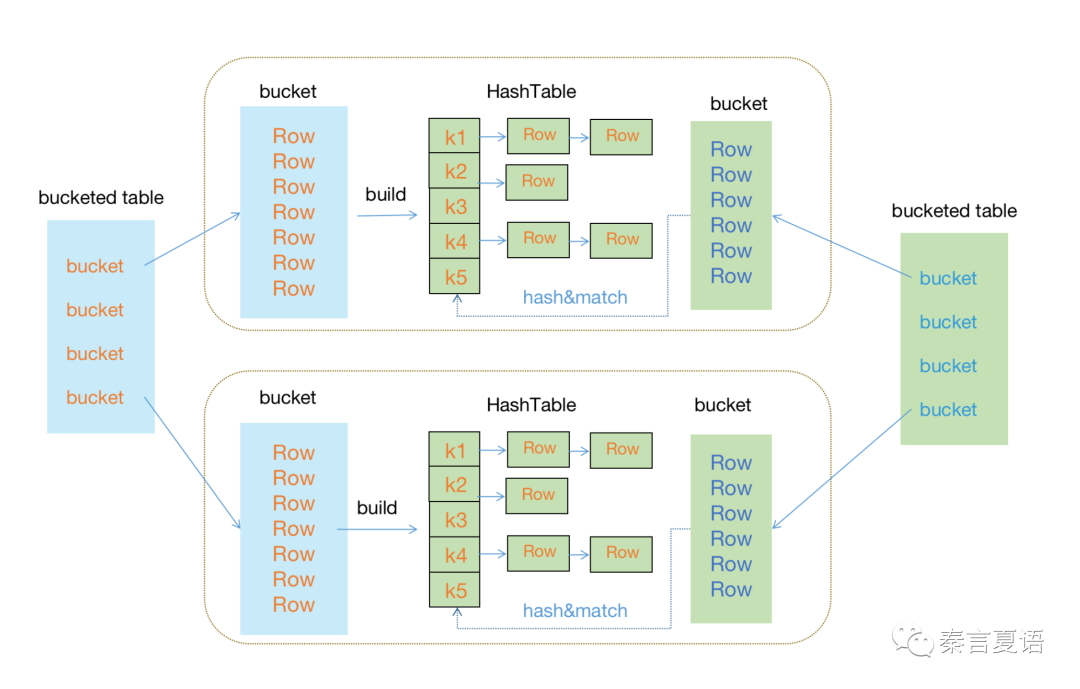

As shown in the figure above, Shuffled Hash Join is divided into three steps:

As we can see, Hash Join's algorithm complexity is O(m + n), which is much simpler than the Cartesian product.

In essence, Hash Join takes full advantage of the stability of Hash functions and is a typical example of space-time trade off.

However, when both tables are large, the space overhead will be exaggerated, the failure of OOM may occur, and the processing system may crash.

We need an algorithm that doesn't put all the join keys of a table in memory.

The complexity of Hash Join is already O(m+n) just by traversing two tables. If you want to do matching, you have to traverse the entire table.

This method doesn't seem to work.

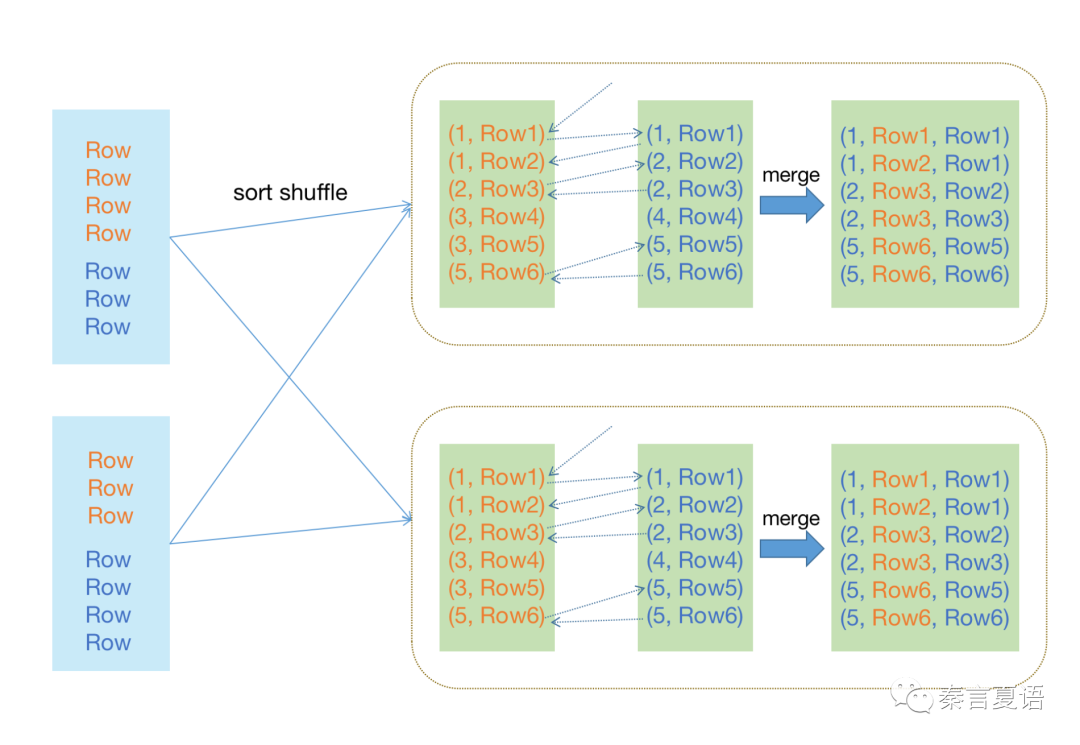

Let's think of it in a different way. Where does the Join data come from? It's the output of in Shuffle stage.

As we have mentioned in the article about Shuffle (Part 17 of this series), although Hive and Spark SQL support many Shuffle methods, Sort Shuffle is used in most cases.

In other words, the input of Join is sorted. We have to match the two sorted arrays.

There is no need to traverse the entire table. If the keys of the current row and the target row are not the same, you only need to continue to traverse until the key queried is larger than the key of the target row. Since the keys of the later rows are larger than those of the current row, there is no need to continue traversing.

As Sort Merge Join does not need to build a hash table in memory, it saves a large amount of memory overhead and provides a time complexity similar to Hash Join.

This allows Sort Merge Join to effectively handle the join between large tables, which many Join algorithms are not very good at.

With Hash Join and Sort-Merge Join, we can perform Join efficiently, no matter how big the table is. It seems to be a happy ending.

However, from the Sort-Merge Join example, we can see that the two steps of Shuffle and Match are not separated but affect each other.

Sort-Merge Join is an example of Match benefiting from Shuffle. On the other hand, is it possible that Match can also benefit Shuffle? After all, Shuffle affects performance too much.

However, we have already talked about Shuffle in detail before. It seems there is not much room for optimization, only if the Shuffle process can be eliminated.

Removing the Shuffle process is not nonsense. Both Join and Group By operations require shuffle. The purpose is to use the computing power brought by cluster scalability to process data in a distributed manner. The difference is that Join focuses on the multi-table scenario while Group By focuses on the single-table scenario.

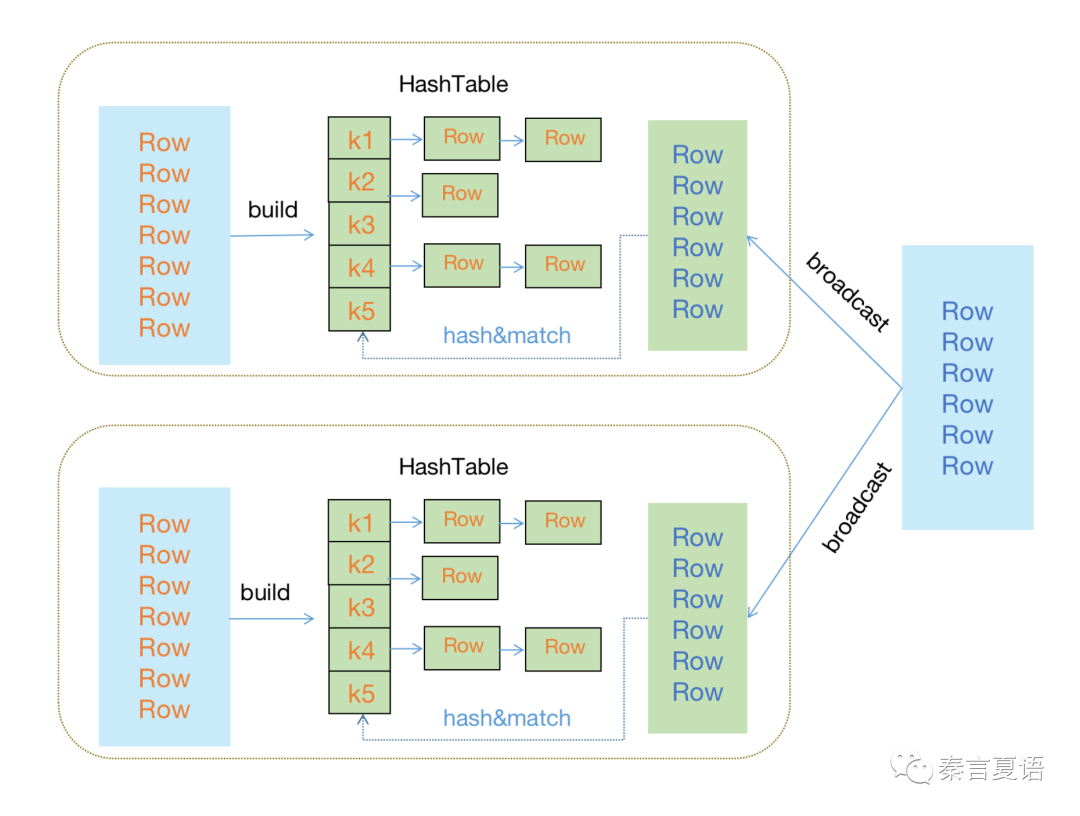

It is sufficient to shuffle one table to partition data.

At the same time, a correct process is essential, so we should send the entire table to all the shuffled partitions of the other table.

This method is only suitable for small tables. Otherwise, the I/O caused by replication and the memory overhead caused by building HashTable will not be lower than those caused by shuffle.

In order to improve performance further, small tables are not sent to each partition but to nodes or executors. This way, tasks on the same node or executor can reuse small tables.

After sending small tables, the subsequent join step is similar to the Hash Join step.

This algorithm is widely used. It is called Broadcast Join in Spark and Map Join in Hive. Compared with Hash Join and Sort-Merge Join in Reduce phase, Map Join directly completes data association and filtering in the Map phase.

Map Join works well, but it is only applicable to the Join between a large table and a small table. If it is a Join between one large table and another large table, only Sort-Merge Join can be used.

However, the Join between one large table and another large table is common and consumes a large number of resources. It would be great if Sort-Merge Join could also achieve the effect of Map Join in terms of resource saving.

The only way to perform Map Join without broadcasting the entire table to each node and putting it into memory is by only broadcasting the part of the data we need.

That means we can broadcast the needed data in corresponding partitions.

How do we know which partitions of one table correspond to which partitions of another table? How do we know in advance before the Join?

There is only one possibility that both tables partition the Join key in the same way.

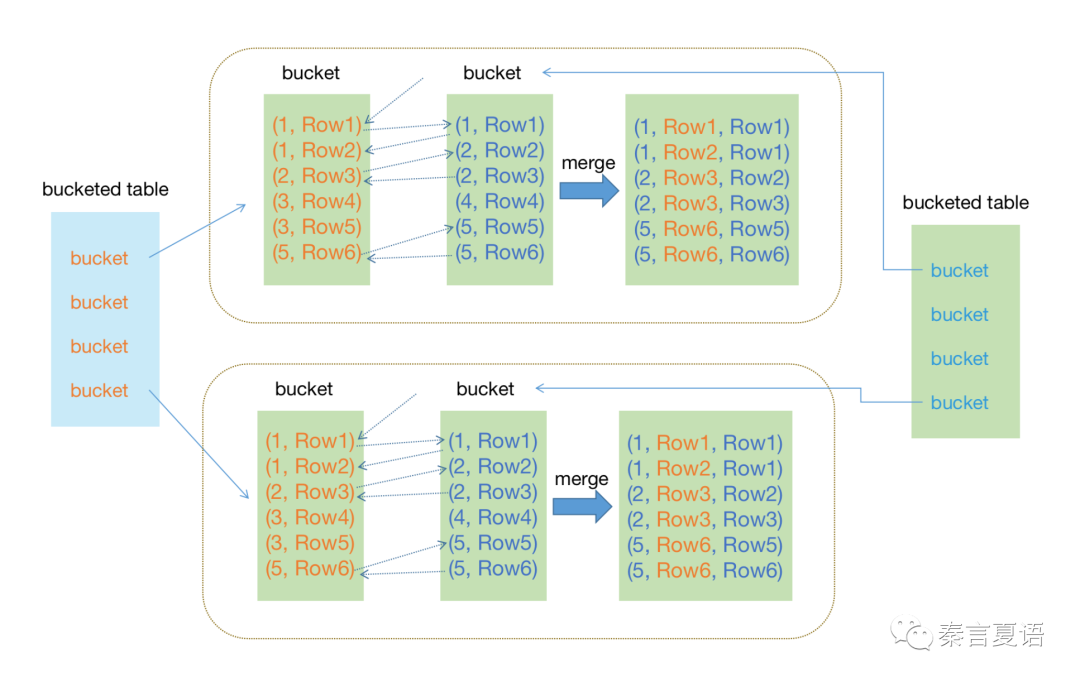

Thus, the concept of bucket is abstracted from this approach.

When data is written to the two tables, bucketing is performed on the Join key in a fixed number. This way, subsequent read operations can automatically complete Join in the Map phase instead of shuffling to the Reduce phase.

Bucket Join can be regarded as the bucket-level map Join, also called Bucket Map Join.

However, if the buckets of the two tables are very large, we can refer to the previous thinking and change the method of matching data from hash algorithm to sort merge, thus having the Sort Merge Bucket Join or Sort Merge Bucket Map Join (SMB Map Join).

The following lists the key points of this article:

Currently, Hive and Spark SQL support several of the main Join methods above. You can view the source code for more information. We recommend focusing on the XXXJoinOperator class in Hive and the XXXJoinExec class in Spark SQL.

However, it should be noted that the Join of Hive and Spark SQL is not completely compatible. Bucket Join is the most typical example. Both Hive and Spark SQL support bucketing. However, each bucket has a different number of files and uses different hash algorithms. Some modifications are required to make the two compatible.

You can set various parameters and threshold values to ENABLE to automatically select the Join types or specify the Join type using hints. We focus on the ideas in this article, and the specific usage will not be repeated.

The common data skew problem and Skew Join can be solved automatically. This kind of Join is not the general Join, and it is not described in detail here. If you are interested, you can research and learn about it.

SQL is really powerful. There are many operations that can be optimized besides Join.

In the next article, let's look at another important SQL optimization method: rule-based optimization (RBO).

This is a carefully conceived series of 20-30 articles. I hope to give everyone a core grasp of the distributed system in a storytelling way. Stay tuned for the next one!

Learning about Distributed Systems – Part 18: Run AND Write Fast

Learning about Distributed Systems - Part 20: Rule-Based Optimization (RBO)

64 posts | 59 followers

FollowAlibaba Clouder - April 21, 2020

Alibaba Cloud_Academy - July 24, 2023

Alibaba Clouder - April 26, 2020

ApsaraDB - November 17, 2020

Alibaba Cloud_Academy - October 7, 2023

Alibaba Cloud_Academy - July 25, 2023

64 posts | 59 followers

Follow Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba Cloud_Academy