By Wang Yi (Weile)

Let's get familiar with Java 8 Lambda. This article explores the design and implementation of Lambda from the source code level.

First, look at the following sample code:

static class A {

@Getter

private String a;

@Getter

private Integer b;

public A(String a, Integer b) {

this.a = a;

this.b = b;

}

}

public static void main(String[] args) {

List<Integer> ret = Lists.newArrayList(new A("a", 1), new A("b", 2), new A("c", 3)).stream()

.map(A::getB)

.filter(b -> b >= 2)

.collect(Collectors.toList());

System.out.println(ret);

}In the preceding code, there are a few key steps.

ArrayList.stream calls the Collector.stream method.

default Stream<E> stream() {

return StreamSupport.stream(spliterator(), false);

}The spliterator() method generates the IteratorSpliterator object. Spliterator means an iterator that can be split. This is mainly used for parallel operations in parallelStream in Lambda. Stream is called in the preceding example, so parallel=false.

StreamSupport.stream finally generates the ReferencePipeline.Head object.

public static <T> Stream<T> stream(Spliterator<T> spliterator, boolean parallel) {

Objects.requireNonNull(spliterator);

return new ReferencePipeline.Head<>(spliterator,

StreamOpFlag.fromCharacteristics(spliterator),

parallel);

}The Head class is derived from ReferencePipeline and represents the head node in Lambda's pipeline.

With this Head object, calling .map is calling the base class ReferencePipeline.map method.

public final <R> Stream<R> map(Function<? super P_OUT, ? extends R> mapper) {

Objects.requireNonNull(mapper);

return new StatelessOp<P_OUT, R>(this, StreamShape.REFERENCE,

StreamOpFlag.NOT_SORTED | StreamOpFlag.NOT_DISTINCT) {

@Override

Sink<P_OUT> opWrapSink(int flags, Sink<R> sink) {

return new Sink.ChainedReference<P_OUT, R>(sink) {

@Override

public void accept(P_OUT u) {

downstream.accept(mapper.apply(u));

}

};

}

};

}It returns a StatelessOp, which represents a stateless operator. This class is a subclass of ReferencePipeline. You can see its constructor. The first parameter "this" indicates that the Head object is used as the upstream of the StatelessOp object. The StatelessOp.opWrapSink method will be discussed later.

Then, call the StatelessOp.filter method, which returns to the ReferencePipeline.filter method.

public final Stream<P_OUT> filter(Predicate<? super P_OUT> predicate) {

Objects.requireNonNull(predicate);

return new StatelessOp<P_OUT, P_OUT>(this, StreamShape.REFERENCE,

StreamOpFlag.NOT_SIZED) {

@Override

Sink<P_OUT> opWrapSink(int flags, Sink<P_OUT> sink) {

return new Sink.ChainedReference<P_OUT, P_OUT>(sink) {

@Override

public void begin(long size) {

downstream.begin(-1);

}

@Override

public void accept(P_OUT u) {

if (predicate.test(u))

downstream.accept(u);

}

};

}

};

}As you can see, a StatelessOp object is generated with a changed upstream.

Finally, call StatelessOp.collect and go back to the ReferencePipeline.collect method.

public final <R, A> R collect(Collector<? super P_OUT, A, R> collector) {

A container;

if (isParallel()

&& (collector.characteristics().contains(Collector.Characteristics.CONCURRENT))

&& (!isOrdered() || collector.characteristics().contains(Collector.Characteristics.UNORDERED))) {

container = collector.supplier().get();

BiConsumer<A, ? super P_OUT> accumulator = collector.accumulator();

forEach(u -> accumulator.accept(container, u));

}

else {

container = evaluate(ReduceOps.makeRef(collector));

}

return collector.characteristics().contains(Collector.Characteristics.IDENTITY_FINISH)

? (R) container

: collector.finisher().apply(container);

}In the previous steps, .map and .filter methods create StatelessOp objects, but the collect method is different. If you are familiar with Spark/Flink, you will know that collect is an action/sink. If collect is called, the execution of each operator on this stream will be triggered. This is lazy execution. Among all operations, only the operator that encounters the action will start to execute.

The parallel=false of this stream is mentioned earlier, so the actual execution logic is listed below:

A container = evaluate(ReduceOps.makeRef(collector));

return collector.characteristics().contains(Collector.Characteristics.IDENTITY_FINISH)

? (R) container

: collector.finisher().apply(container);

}Before entering the evaluate method, look at ReduceOps.makeRef(collector), which is wrapped by the CollectorImpl instance generated based on Collectors.toList, and return a TerminalOp object (which is ReduceOp).

public static <T, I> TerminalOp<T, I>

makeRef(Collector<? super T, I, ?> collector) {

Supplier<I> supplier = Objects.requireNonNull(collector).supplier();

BiConsumer<I, ? super T> accumulator = collector.accumulator();

BinaryOperator<I> combiner = collector.combiner();

class ReducingSink extends Box<I>

implements AccumulatingSink<T, I, ReducingSink> {

@Override

public void begin(long size) {

state = supplier.get();

}

@Override

public void accept(T t) {

accumulator.accept(state, t);

}

@Override

public void combine(ReducingSink other) {

state = combiner.apply(state, other.state);

}

}

return new ReduceOp<T, I, ReducingSink>(StreamShape.REFERENCE) {

@Override

public ReducingSink makeSink() {

return new ReducingSink();

}

@Override

public int getOpFlags() {

return collector.characteristics().contains(Collector.Characteristics.UNORDERED)

? StreamOpFlag.NOT_ORDERED

: 0;

}

};

}As shown in the code, the implementation of the collector is called directly. It should be noted that ReducingSink is derived from Box, which has a state member that represents the computing state. ReducingSink uses this state to perform the combine and accumulate operations (which is a list).

Returning to the evaluate method, it calls:

terminalOp.evaluateSequential(this, sourceSpliterator(terminalOp.getOpFlags()));Here, "this" is the ReferencePipeline of the final stage, namely StatelessOp. We call it ReferencePipeline$2, which is a pipeline that has undergone two operator operations. For sourceSpliterator, the spliterator of sourceStage is obtained, which is the spliterator of the top head.

ReduceOp.evaluateSequential:

public <P_IN> R evaluateSequential(PipelineHelper<T> helper,

Spliterator<P_IN> spliterator) {

return helper.wrapAndCopyInto(makeSink(), spliterator).get();

}The helper is ReferencePipeline$2. Here, makeSink is the overloaded method of ReducingSink.

ReferencePipeline.wrapAndCopyInto is implemented in its parent class AbstractPipeline.

copyInto(wrapSink(Objects.requireNonNull(sink)), spliterator);

return sink;wrapSink code:

final <P_IN> Sink<P_IN> wrapSink(Sink<E_OUT> sink) {

Objects.requireNonNull(sink);

for ( @SuppressWarnings("rawtypes") AbstractPipeline p=AbstractPipeline.this; p.depth > 0; p=p.previousStage) {

sink = p.opWrapSink(p.previousStage.combinedFlags, sink);

}

return (Sink<P_IN>) sink;

}As you can see, this is to call the opWrapSink method of each pipeline from back to front, which is the mode of responsibility chain.

Next is the copyInto method, which will give the real execution logic.

final <P_IN> void copyInto(Sink<P_IN> wrappedSink, Spliterator<P_IN> spliterator) {

Objects.requireNonNull(wrappedSink);

if (!StreamOpFlag.SHORT_CIRCUIT.isKnown(getStreamAndOpFlags())) {

wrappedSink.begin(spliterator.getExactSizeIfKnown());

spliterator.forEachRemaining(wrappedSink);

wrappedSink.end();

}

else {

copyIntoWithCancel(wrappedSink, spliterator);

}

}It will go into this part of the logic:

wrappedSink.begin(spliterator.getExactSizeIfKnown());

spliterator.forEachRemaining(wrappedSink);

wrappedSink.end();The most important thing here is the middle line. Since the Collection reference held by the spliterator is ArrayList, it will call the ArrayList.forEachRemaining method.

public void forEachRemaining(Consumer<? super E> action) {

// ...

if ((i = index) >= 0 && (index = hi) <= a.length) {

for (; i < hi; ++i) {

@SuppressWarnings("unchecked") E e = (E) a[i];

action.accept(e);

}

if (lst.modCount == mc)

return;

}

// ...The action parameter here is the Sink encapsulated by the chain of responsibility (also a subclass of Consumer).

When action.accept is called here, the accept of each operator will be called layer by layer through the chain of responsibility. Start with the accept of map:

@Override

Sink<P_OUT> opWrapSink(int flags, Sink<R> sink) {

return new Sink.ChainedReference<P_OUT, R>(sink) {

@Override

public void accept(P_OUT u) {

downstream.accept(mapper.apply(u));

}

};

}As you can see, it calls mapper.apply and then passes the result directly to downstream.accept, which is the accept of the filter. Then, it comes to ReducingSink.accept, which adds a result element to the state. Thus, after forEach is executed, there will be a result.

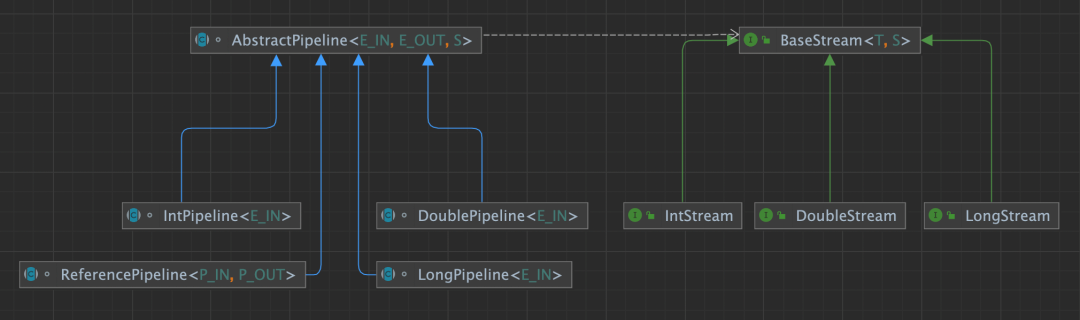

After the preceding process, let's look at part of the class design in Lambda. First, let's look at Stream. Its base class is BaseStream, which provides the following interfaces:

public interface BaseStream<T, S extends BaseStream<T, S>>

extends AutoCloseable {

/**

* Return the iterator of the element in the stream.

*/

Iterator<T> iterator();

/**

* Return the spliterator of the element in the stream for parallel execution.

*/

Spliterator<T> spliterator();

/**

* Whether parallel

*/

boolean isParallel();

/**

* Return the serial stream, which is to force parallel=false.

*/

S sequential();

/**

* Return a parallel stream, which is to force parallel=true

*/

S parallel();

// ...

}This interface is inherited by streams, such as IntStream, LongStream, and DoubleStream. They are interfaces that provide filter, map, mapToObj, distinct, and other operators based on BaseStream. However, these operators are type-limited (such as IntStream.filter), which accepts IntPredicate instead of conventional Predicate. The map method accepts IntUnaryOperator.

IntStream and LongStream are all interfaces, which are only used to describe operators. Their implementations are all based on Pipeline. The base class is AbstractPipeline, and it has several key member variables:

/**

* The top pipeline, which is Head.

*/

private final AbstractPipeline sourceStage;

/**

* Direct Upstream Pipeline

*/

private final AbstractPipeline previousStage;

/**

* Direct Downstream Pipeline

*/

@SuppressWarnings("rawtypes")

private AbstractPipeline nextStage;

/**

* Pipeline Depth

*/

private int depth;

/**

* The Spliterator of the Head

*/

private Spliterator<?> sourceSpliterator;

// ...This base class also provides the basic implementation of Pipeline, as well as the implementation of BaseStream and PipelineHelper interfaces (such as evaluate, sourceStageSpliterator, wrapAndCopyInto, and wrapSink).

Similarly, subclasses derived from AbstractPipeline are IntPipeline, LongPipeline, DoublePipeline, ReferencePipeline, etc. You can understand the first three easily. They provide Lambda operations based on primitive types (and all implement corresponding XXStream interfaces), while ReferencePipeline provides object-based Lambda operations.

The class hierarchy is listed below:

Note: These subclasses are abstract. Each pipeline has three subclasses: Head, StatelessOp, and StatefulOp. They are used to describe pipeline's head node, stateless intermediate operator, and stateful intermediate operator.

Head is a non-abstract class. StatelessOp is an abstract class. It dynamically creates its anonymous subclasses and implements the opWrapSink method in operators (such as map, filter, and mapToObj).

With this design, except for collect, the returned results of all operators are subclasses of Stream. In IntPipeline, map, flatMap, filter, etc. return IntStream. Even if their implementation may be StatelessOp, Head, etc., they all provide a unified interface to the outside. At the same time, since the implementation of each operator in Lambda is dynamic (such as A::getB, b -> b>=2, etc.), the logic is dynamically encapsulated by overloading the opWrapSink method for each operator.

At the same time, after separating the design of XXStream and XXPipeline, the simplicity of the Stream interface (the interface revealed to the user) can be maintained. Otherwise, if BaseStream is made into an abstract class and AbstractPipeline-related logic is moved to it, Stream will become bloated, and users will be confused when using it at the API level.

Where the pipeline is created is unified into the StreamSupport class, which is a large factory class. Although stream methods are provided in ArrayList, Arrays, and other classes, StreamSupport is finally called to create an instance of Pipeline. Usually, the XXPipeline.Head object is created, and other lambda operators are added through this object.

Next, let's look at a relatively complex example, the scenario of dual-stream concat, with the following code.

static class Mapper1 implements IntUnaryOperator {

@Override

public int applyAsInt(int operand) {

return operand * operand;

}

}

static class Filter1 implements IntPredicate {

@Override

public boolean test(int value) {

return value >= 2;

}

}

static class Mapper2 implements IntUnaryOperator {

@Override

public int applyAsInt(int operand) {

return operand + operand;

}

}

static class Filter2 implements IntPredicate {

@Override

public boolean test(int value) {

return value >= 10;

}

}

static class Mapper3 implements IntUnaryOperator {

@Override

public int applyAsInt(int operand) {

return operand * operand;

}

}

static class Filter3 implements IntPredicate {

@Override

public boolean test(int value) {

return value >= 10;

}

}

public static void main(String[] args) {

IntStream s1 = Arrays.stream(new int[] {1, 2, 3})

.map(new Mapper1())

.filter(new Filter1());

IntStream s2 = Arrays.stream(new int[] {4, 5, 6})

.map(new Mapper2())

.filter(new Filter2());

IntStream s3 = IntStream.concat(s1, s2)

.map(new Mapper3())

.filter(new Filter3());

int sum = s3.sum();

}In this code, IntStream: s1 and s2 are created separately. Then, perform the concat operation to generate s2 and call the sum operation to reduce.

Code analysis starts with sink, and reduce is similar to the previous collect. Based on the s3 stream, it is executed in the AbstractPipeline.evaluate method.

terminalOp.evaluateSequential(this, sourceSpliterator(terminalOp.getOpFlags()));Here, terminalOp is the sum ReduceOp, and the sourceSpliterator is Streams.ConcatSpliterator, which is the wrapAndCopyInto method to call the s3 pipeline.

final <P_IN, S extends Sink<E_OUT>> S wrapAndCopyInto(S sink, Spliterator<P_IN> spliterator) {

copyInto(wrapSink(Objects.requireNonNull(sink)), spliterator);

return sink;

}Here, wrapSink will string the operator in s3 with the final reduce:

Head(concated s1 + s2 stream) -> Mapper3 -> Filter3 -> ReduceOp(sum)

So far, we only see the logic of s3, so what's the logic of the mapper and filter of s1 and s2? Let's look at the following copyInto method:

final <P_IN> void copyInto(Sink<P_IN> wrappedSink, Spliterator<P_IN> spliterator) {

Objects.requireNonNull(wrappedSink);

if (!StreamOpFlag.SHORT_CIRCUIT.isKnown(getStreamAndOpFlags())) {

wrappedSink.begin(spliterator.getExactSizeIfKnown());

spliterator.forEachRemaining(wrappedSink);

wrappedSink.end();

// ...As mentioned before, the spliterator here is the Streams.ConcatSpliterator object. Here is the Streams.ConcatSpliterator.forEachRemaining implementation:

public void forEachRemaining(Consumer<? super T> consumer) {

if (beforeSplit)

aSpliterator.forEachRemaining(consumer);

bSpliterator.forEachRemaining(consumer);

}Here, two different streams are distinguished, and the spliterator of each stream calls the forEachRemaining method separately. The spliterator here is IntWrappingSpliterator, which is an encapsulation of s1/s2 and has two key members:

// The Original Pipeline of the Package

final PipelineHelper<P_OUT> ph;

// The Spliterator of the Original Pipeline

Spliterator<P_IN> spliterator;So, go to the IntWrappingSpliterator.foreachMaining method:

public void forEachRemaining(IntConsumer consumer) {

if (buffer == null && !finished) {

Objects.requireNonNull(consumer);

init();

ph.wrapAndCopyInto((Sink.OfInt) consumer::accept, spliterator);

finished = true;

}

// ...As you can see, the wrapAndCopyInto method of the original pipeline is called again, and the consumer here is the logic of s3. This recursively returns to:

AbstractPipeline.wrapAndCopyInto -> AbstractPipeline.wrapSink-> AbstractPipeline.copyIntoIn the method, in the wrapSink at this time, the current pipeline is s1/s2. All operators under s1/s2 will be called AbstractPipeline.opWrapSink and connected in series. Taking s1 as an example:

Head(array[1,2,3]) -> Mapper1 -> Filter1 -> Mapper3 -> Filter3 -> ReduceOp(sum)As such, the s1 stream and s3 stream are connected for execution, and s2 and s3 streams are connected for execution.

Techniques to Construct String Objects and Access Internal Members Quickly

1,338 posts | 469 followers

FollowAlibaba Cloud Community - November 28, 2023

Alibaba Cloud Native Community - December 24, 2025

Alibaba Cloud Community - March 27, 2023

OpenAnolis - July 8, 2022

Alibaba Cloud Native Community - April 15, 2025

Alibaba Cloud Serverless - August 4, 2021

1,338 posts | 469 followers

Follow Web Hosting Solution

Web Hosting Solution

Explore Web Hosting solutions that can power your personal website or empower your online business.

Learn More Web Hosting

Web Hosting

Explore how our Web Hosting solutions help small and medium sized companies power their websites and online businesses.

Learn More EMAS Superapp

EMAS Superapp

Build superapps and corresponding ecosystems on a full-stack platform

Learn More Web App Service

Web App Service

Web App Service allows you to deploy, scale, adjust, and monitor applications in an easy, efficient, secure, and flexible manner.

Learn MoreMore Posts by Alibaba Cloud Community