By Li Jinsong and Hu Zheng; edited by community volunteers Yang Weihai and Li Peidian

This article describes solutions and principles of real-time Change Data Capture (CDC) data read/write in a data lake architecture. It discusses:

1) Common CDC analysis solutions

2) Reasons for Flink + Iceberg

3) Means of real-time read/write

4) Future plan

This part discusses how to input CDC or upserted data and then output the results of databases or storage for Online Analytical Processing (OLAP) analysis of big data.

Typical input data types are CDC data and upserted data. CDC data is used for databases and continuously generates a changelog. Stream computing generates the upserted data and is supported in Flink 1.12.

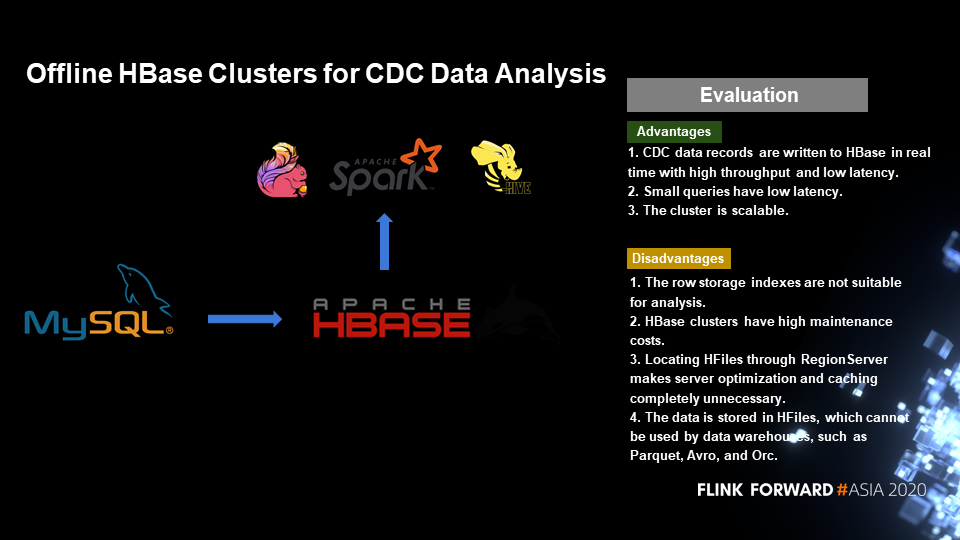

The first general solution is to process the CDC or upserted data through Flink and write the data to HBase in real time. HBase is an online database that provides online real-time point queries. Users can quickly write data into HBase and initiate small queries. Besides, HBase clusters are scalable.

This solution is the same as the common real-time point queries. What are the defects of using HBase in OLAP query analysis?

First, HBase is a database designed for point queries. It is an online service, and its row storage indexes are not suitable for analysis tasks. In a typical data warehouse design, row storage is required for effective compression and queries. Second, the maintenance costs of HBase clusters are high. Third, the data format of HBase is stored in HFiles, which typical data warehouse formats of big data — such as Parquet, Avro, and Orc — cannot use conveniently.

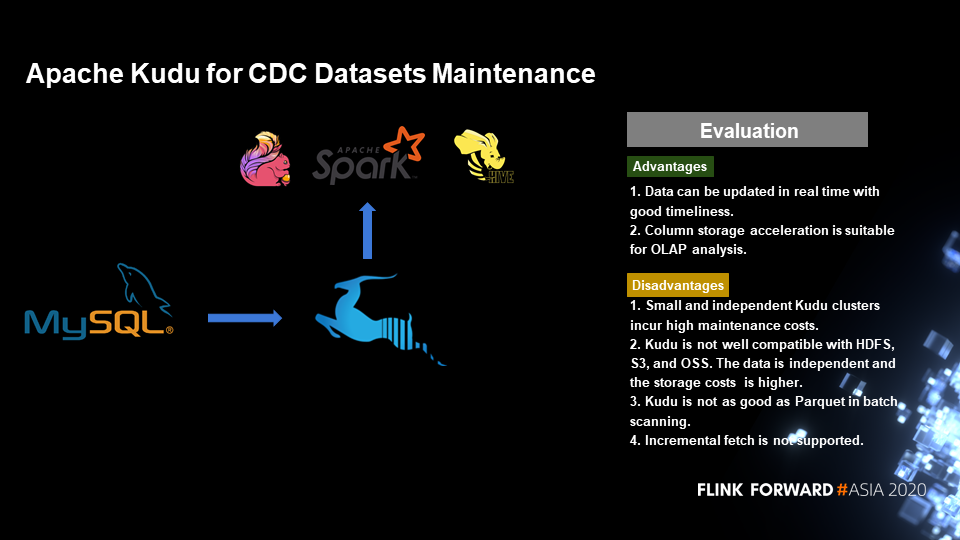

To deal with the weak analysis capability of HBase, Apache Kudu emerged several years ago in the community. The project uses column storage while providing point queries of HBase. Therefore, the column storage acceleration of Kudu makes it suitable for OLAP analysis.

What are the disadvantages of this solution?

Kudu is a small and independent data storage engine that incurs high maintenance costs. Besides, it is not well compatible with Hadoop Distributed File System (HDFS), Simple Storage Service (S3), and Object Storage Service (OSS).

Kudu retains the point query capability. Consequently, its batch scanning performance is not as good as that of Apache Parquet. It also provides inadequate support for delete operations and does not support the incremental fetch.

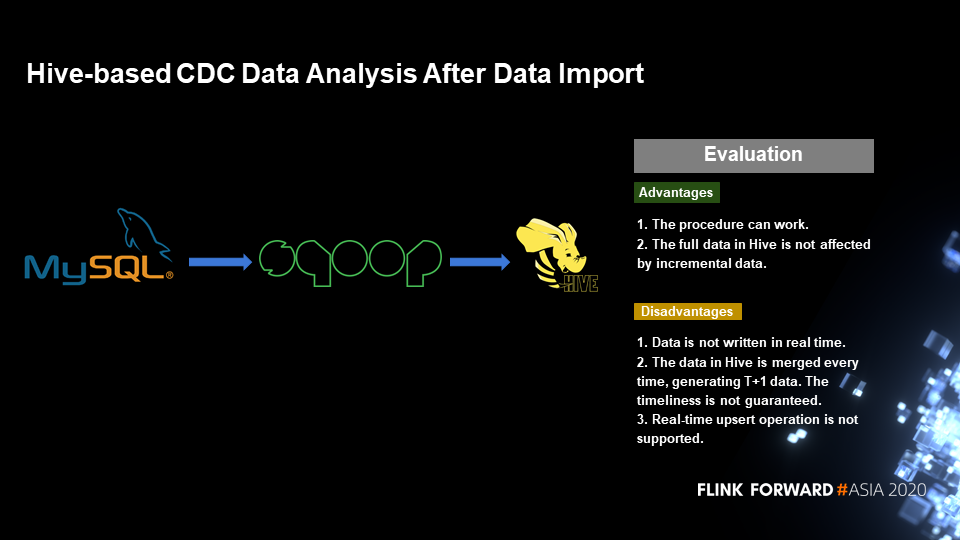

The third solution is to write MySQL data to Hive, which is common in data warehouses. First, maintain a full partition and then make an incremental partition every day. At last, write data to the incremental partition. Then, merge the data into two partitions and write to a new partition. Incremental partitions do not affect the full partition in Hive. Only after merging the data in both incremental and full partitions, users can query the new partition. Such appended data in column storage mode is suitable for analysis.

What are the shortcomings of this solution?

Merging incremental data and full data has latency. Data is not written in real time but once a day typically, which means that the data volume is T+1. So, the timeliness is poor, and real-time data upsert is unsupported. All data must be reread/re-write for each merge, resulting in low efficiency and resource wastage.

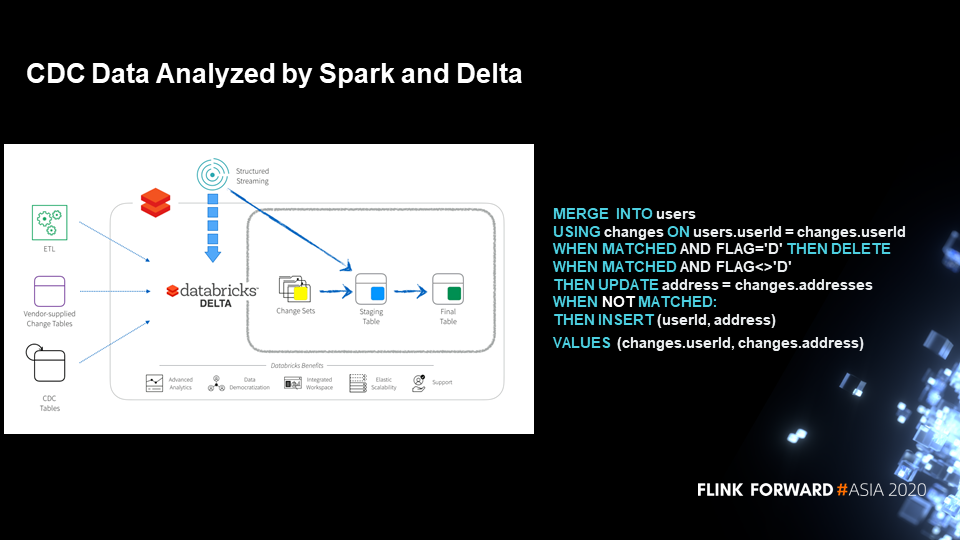

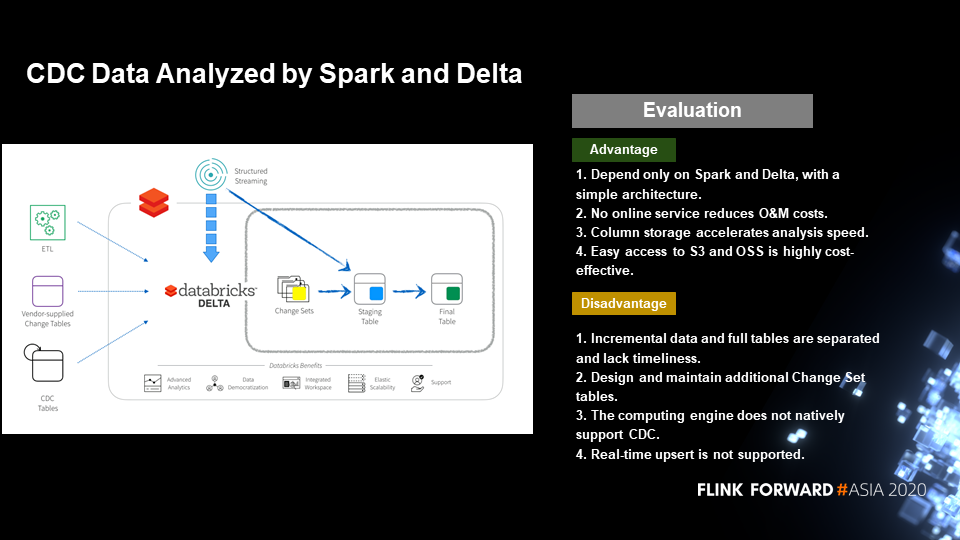

The combination of Spark and Delta provides the MERGE INTO syntax when analyzing CDC data, which simplifies the syntax of Hive. This combination does not manage data partitions but files as the new architecture for data lakes, such as Iceberg and Hudi. Therefore, the optimized MERGE INTO syntax only scans and rewrites changed files, which is quite efficient.

This solution only relies on the Spark + Delta architecture with no online services or column storage. Moreover, the data analysis based on this solution is speedy, and the optimized MERGE INTO syntax performance is high.

This solution is a copy-on-write solution for business, which only requires copying a small number of files with low latency. Theoretically, if the updated data does not considerably overlap with the existing data, the latency can be reduced from days to hours without affecting the performance.

Compared to the Hive-based upserted data processing, this solution is relatively better. However, data processing with hour-level latency is not as efficient as real-time processing. The biggest drawback of this solution is that the merging has some overheads during the copy-on-write procedure, so the latency cannot be too low.

The preceding section introduced all the existing solutions. It is necessary to emphasize that the upsert operation is important. In data lake solutions, the upsert operation is a key technical point to realize quasi-real-time and real-time lake migration of databases.

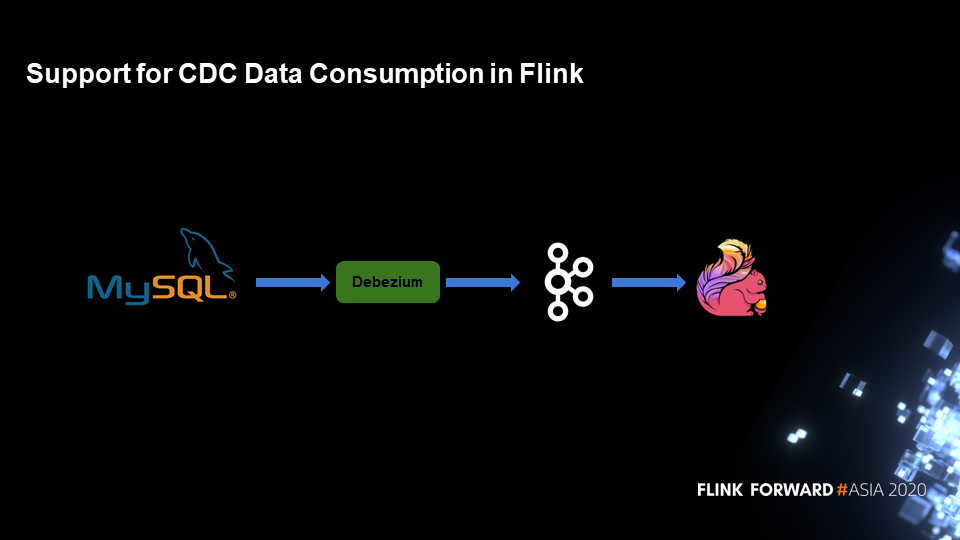

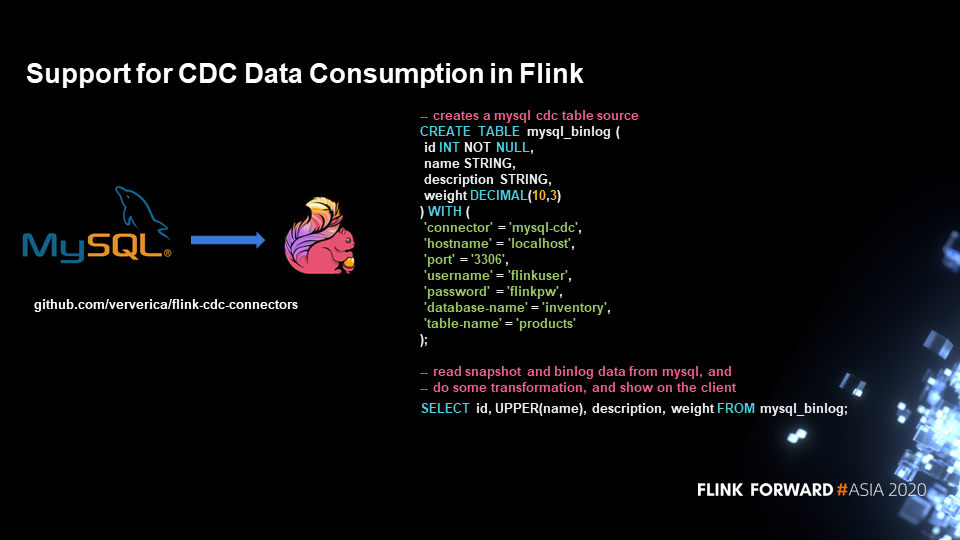

Flink natively supports CDC data consumption. In the previous Spark + Delta solution, the MERGE INTO syntax requires the user to perceive the attribute concept of CDC data and then write it to the merging syntax. However, Flink supports CDC data natively, so the user only needs to declare a Debezium or other CDC data formats. The SQL statements in Flink do not need to perceive any attribute of CDC or upserted data. Moreover, Flink uses a hidden column to identify CDC data. Hence, it is easy for users to use Flink.

As the following figure shows, during CDC data processing, Flink only declares a DDL statement of MySQL BinLog. In contrast, the subsequent SELECT statement does not have to perceive the CDC data attributes.

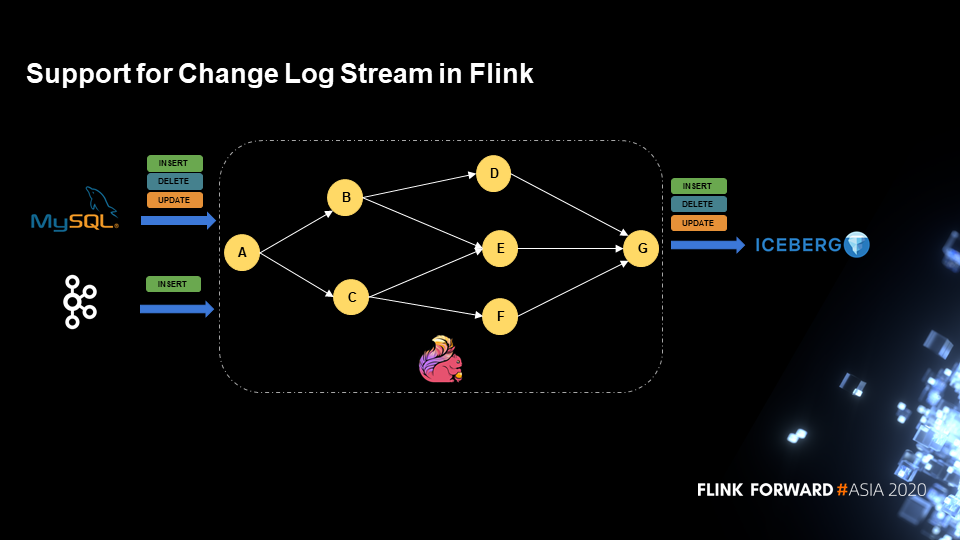

The following figure shows that Flink natively supports Change Log Stream. After Flink is used in a Change Log Stream, the topology does not need to care about the SQL statements of the Change Log flag. The topology is defined entirely based on the actual business logic without perceptions of the Change Log flag until it is finally written to Iceberg.

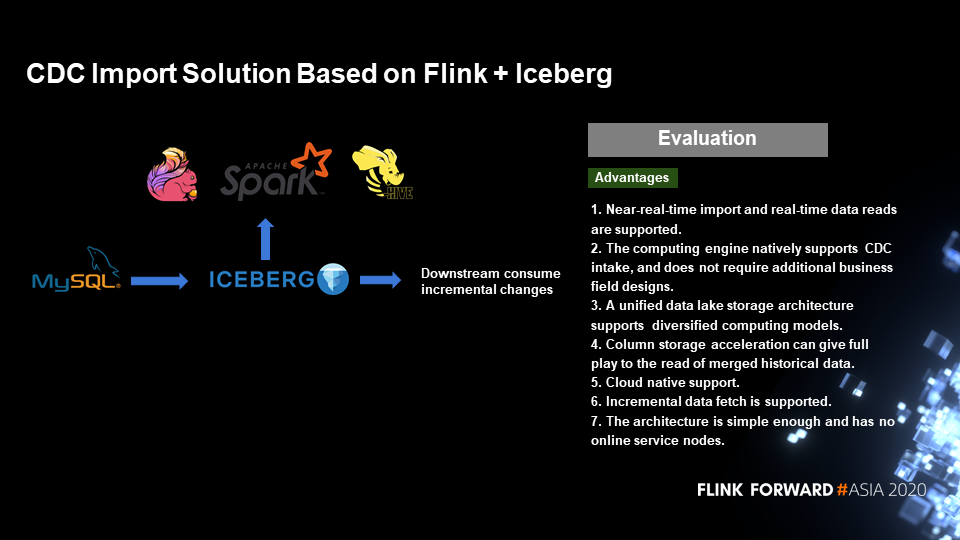

What are the advantages of the CDC data import solution based on Flink + Iceberg?

Compared to the previous solutions, both copy-on-write and merge-on-read technologies have suitable scenarios focusing on different aspects. In updating part of files, copy-on-write can efficiently rewrite some of the files and generate appended full data sets. It is also the fastest in data analysis.

Merge-on-read directly appends the data and the CDC flag to Iceberg. The incremental data is efficiently merged with previous full data based on a specific format. This enables the near-real-time import and real-time data read. Flink SQL of this solution natively supports the intake of CDC data and does not require additional business field designs.

Iceberg is a unified data lake storage architecture that supports various computing models and engines for analysis, including Spark, Presto, and Hive. The generated files are stored in column storage mode for later analysis. Iceberg is designed for data lakes based on snapshots and supports incremental read. The Iceberg architecture is simple enough and has no online service nodes. Besides, Iceberg is a table-type middleware that allows the upstream platforms to customize their logic and services.

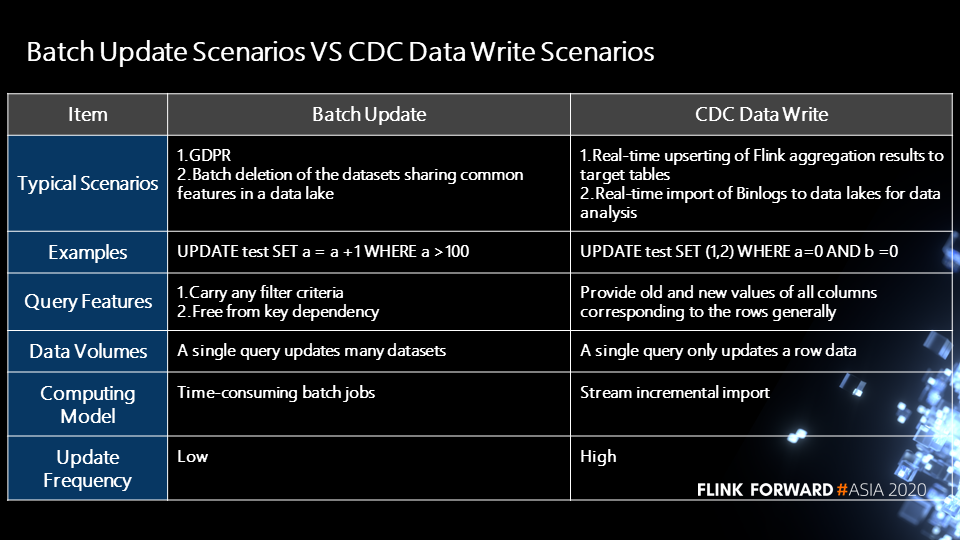

The following part describes two scenarios of batch updates in data lakes.

In addition to the above two batch update scenarios, CDC data write also involves two scenarios that adopt Flink. The first scenario is that the upstream Binlog can be quickly written into the data lake, and then different analysis engines use it for analysis. The second scenario is to use Flink to perform some aggregation operations. The output stream is the upsert-type data stream that also needs to be written to the data lake or the downstream system for real-time analysis. The following figure shows the SQL statements in the CDC data write scenario, which uses a single SQL statement to update a data row. For this statement, the data is updated frequently in a stream and incremental manner.

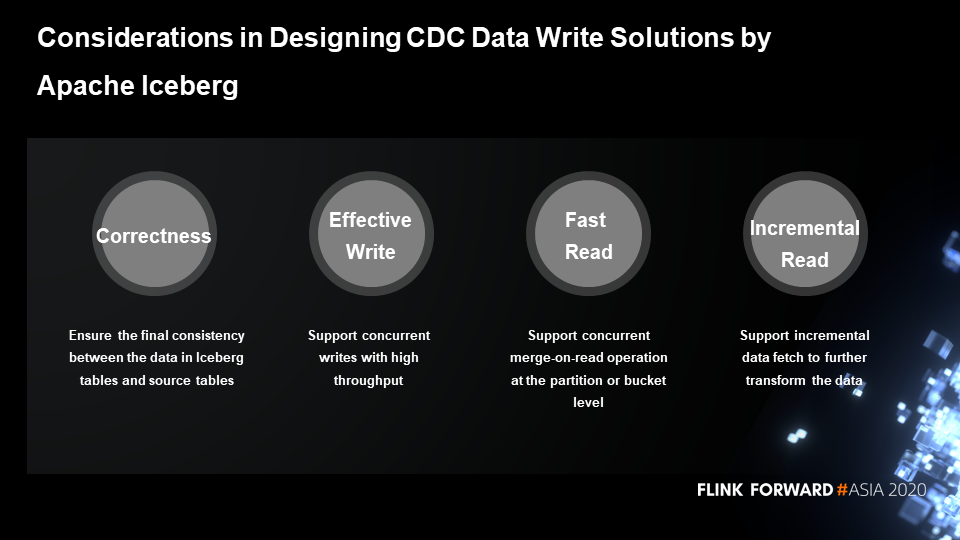

This part describes the considerations in designing CDC data write solutions using Iceberg.

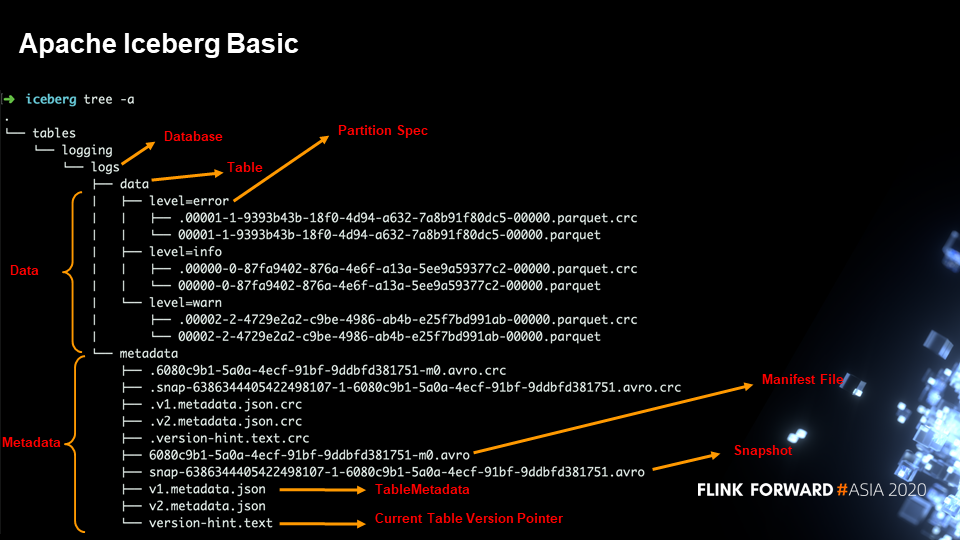

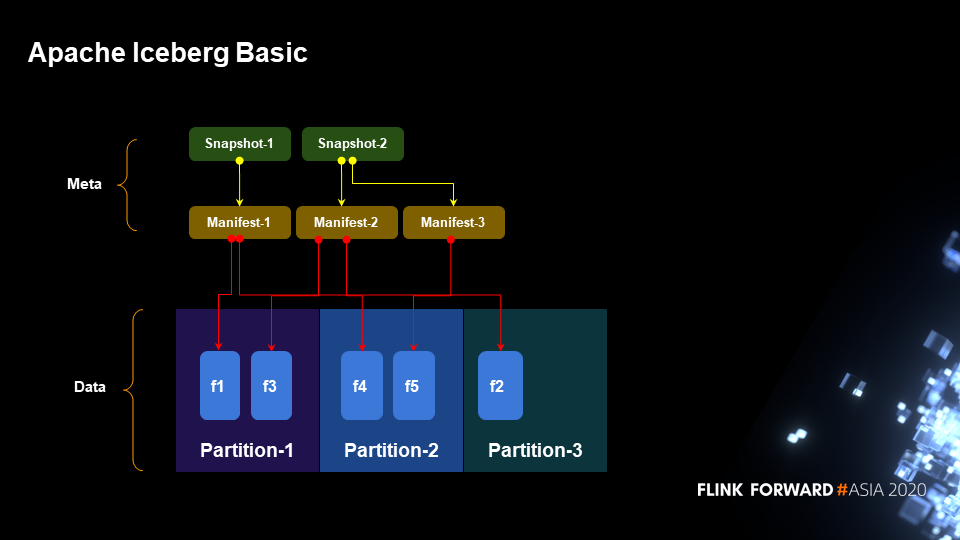

Before introducing the details of the specific solution, it is necessary to learn the layout of Iceberg in the file system. Generally, Iceberg contains two types of files: The first one is the data files, such as Parquet files in the following figure. Each data file corresponds to a check file (.crc file). The second one is the table metadata files, including Snapshot files (snap-.avro), Manifest files (.avro), and TableMetadata files (*.json).

The following figure shows the mapping relationship among files in Snapshot, Manifest, and Partition in Iceberg, which contains three Partitions. The first Partition has two files called f1 and f3. The second partition has two files called f4 and f5, and the third partition has one file called f2. A Manifest file is generated for each write operation that records the mapping relationship between the written file and the Partition. The Snapshot file in the upper layer provides the Manifest file quick access to the full data of the entire table and records multiple Manifests. For example, the second snapshot contains Manifest2 and Manifest3.

This part describes the design of insert, update, and delete operations in Iceberg.

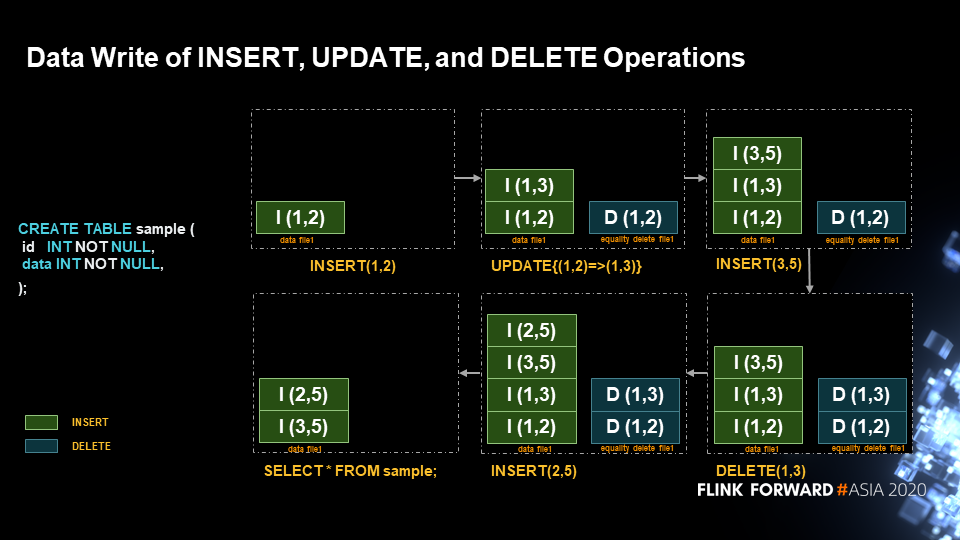

The following SQL statement operates the table that contains two fields called id and data, which are int type. The data stream operation that the figure shows is performed in a transaction. First, a record (1,2) is inserted and then updated to (1,3). In Iceberg, the update operation is split into the delete and insert operations.

The reason is that Iceberg is a stream/batch unified storage layer. By splitting the update operation into delete and insert operations, Iceberg can ensure the consistency of the read paths when updating data in stream and batch processing. Take Hive as an example in the case of batch deletion. It writes the file offsets of rows to be deleted to the delta file and then performs the merge operation based on merge-on-read. This method is faster in data processing. When merging data, Hive uses position to map the original file and delta file. By doing so, Hive can quickly obtain all records not deleted.

Next, insert the record (3,5), delete the record (1,3), and insert the record (2,5). The final query returns the record (3,5) and (2,5).

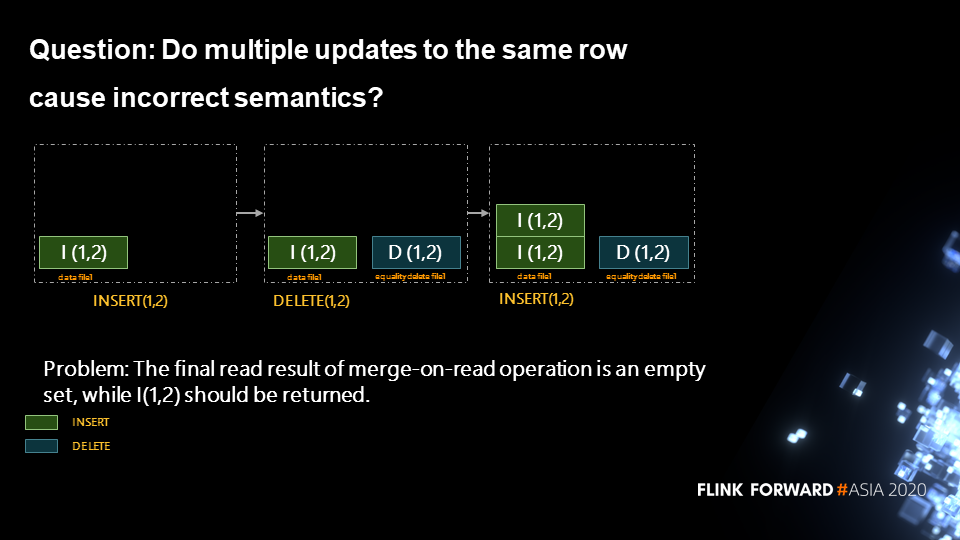

The above operation seems simple, but there are some semantic problems in the implementation. In the following figure, a transaction first performs an insert operation of the record (1,2). This operation writes INSERT(1,2) into data file1 and then performs the delete operation of the record (1,2). Then, the delete operation writes DELETE(1,2) into equality delete file1 and performs insert operation on the record (1,2). Next, this insert operation writes INSERT(1,2) into data file1 and then queries the record.

Under normal circumstances, the query result should be the record INSERT(1,2). In the implementation, the DELETE(1,2) operation cannot know which row in data file1 is deleted, so both two INSERT(1,2) records are deleted.

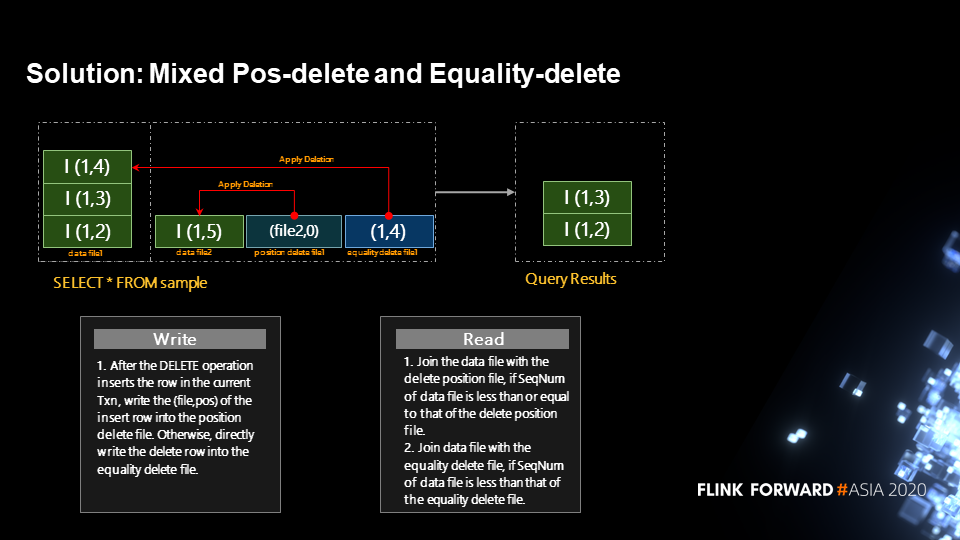

How to solve this problem? The current solution in the community is Mixed position-delete and equality-delete. Equality-delete specifies one or more columns to be deleted. Position-delete deletes records based on the file path and row number. By combining the two methods, a user can ensure the correctness of the delete operations.

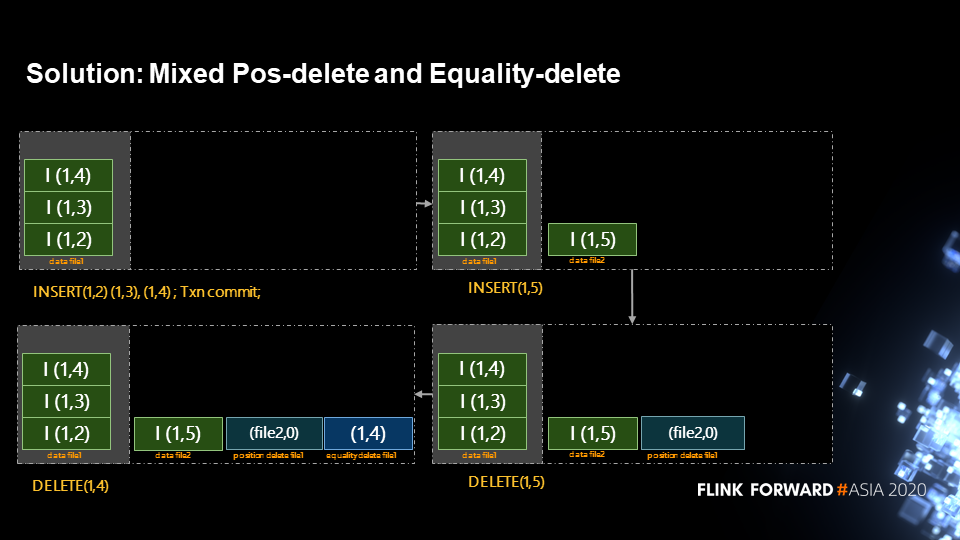

As the following figure shows, three rows of records are inserted into the first transaction: INSERT(1,2), INSERT(1,3), and INSERT(1,4); then, the transaction is committed. Next, a new transaction is enabled and a row of data (1,5) is inserted. The new transaction creates data file2, writes record INSERT(1,5) to the file, and performs the delete operation on the record (1,5). During the actual writing of the DELETE record:

After understanding the write process, it is time to learn the read process. As the following figure shows, the record (file2,0) in position delete file only needs to be joined with the record in the data file of the current transaction. The record (1,4) in equality delete file needs to be joined with the record in the data file of the current transaction. The final results are INSERT(1,3) and INSERT(1,2) records, and the correctness of the process is guaranteed.

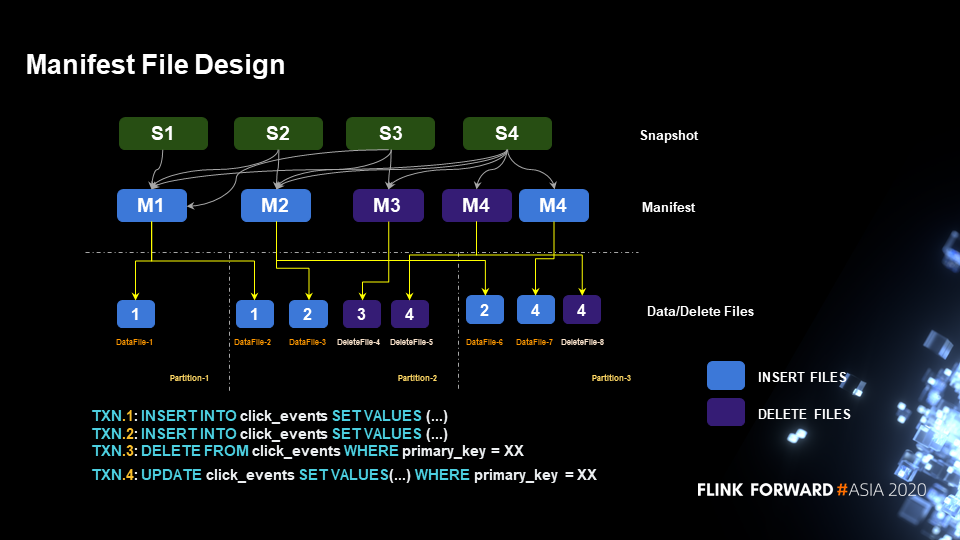

This article described the insert, update, and delete operations above. However, when designing the task execution plan, some design is also made for Manifest to quickly find data files. Data is divided by data size to make sure that the data processed by each task is distributed as evenly as possible.

As the following figure shows, there are four transactions. The first two transactions perform insert operations, corresponding to M1 and M2. The third performs the delete operation, corresponding to M3. The fourth performs the update operation and involves the data manifest and delete manifest files.

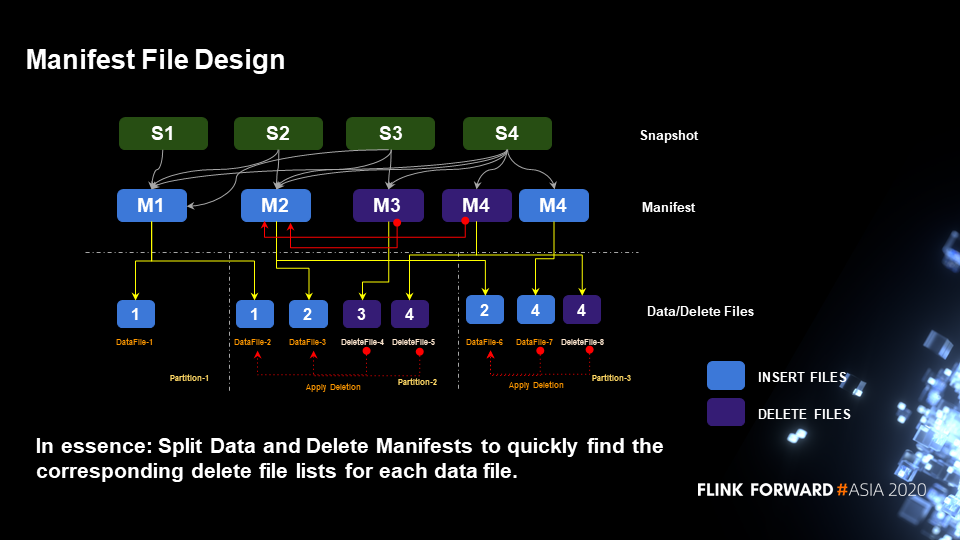

Why the Manifest file is split into data manifest and delete manifest files? Essentially, the corresponding delete file list can be quickly found for each data file. When performing read in the partition-2, the data in deletefile-4, datafile-2, and datafile-3 are joined. It is the same for the data in deletefile-5, datafile-2, and datafile-3, as the following figure shows.

Take datafile-3 as an example. The delete file list contains deletefile-4 and deletefile-5. To quickly find the corresponding delete file list, a user can use queries based on the Manifest. After the Manifest file is split into data manifest and delete manifest files, M2 (data manifest), M3, and M4 (delete manifest) are joined. Thus, a user can obtain the delete file list corresponding to the data file.

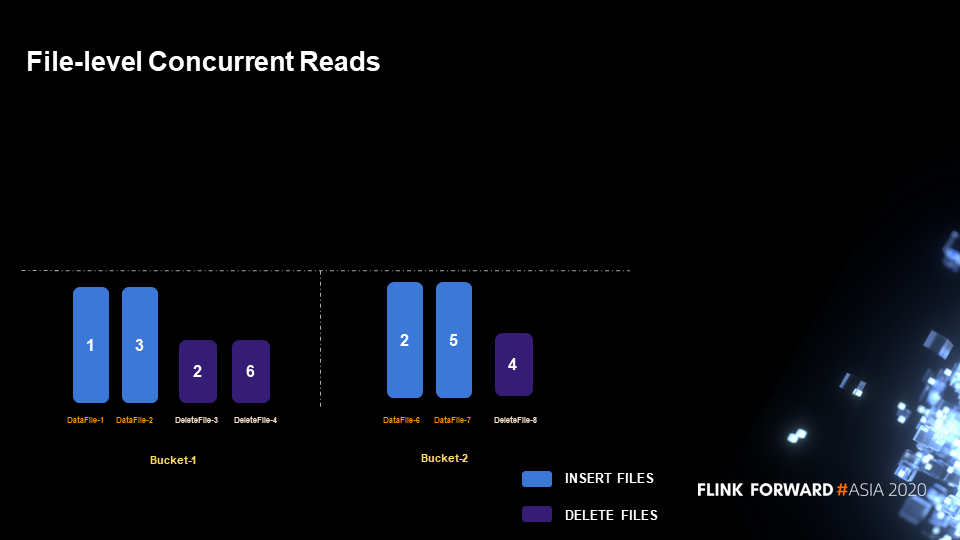

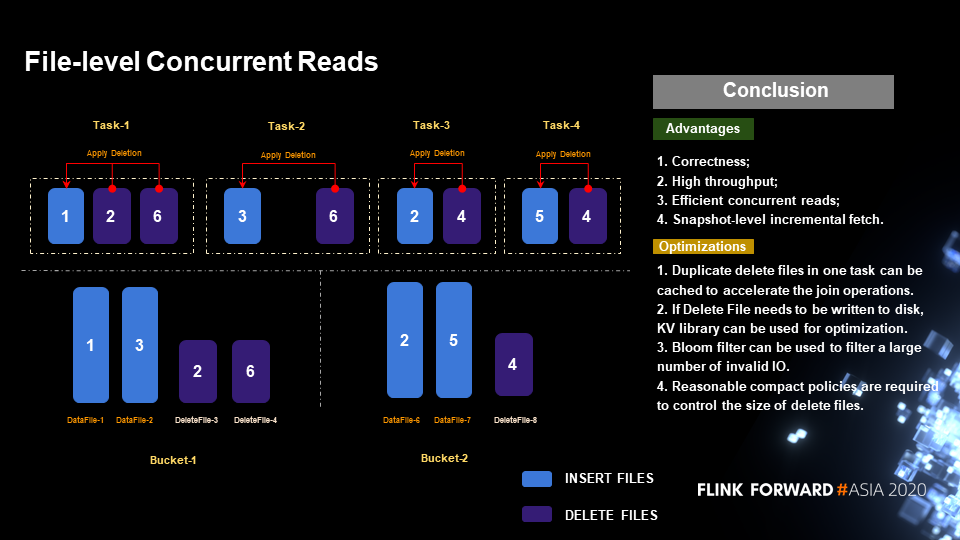

Another problem is the demand for highly concurrent reads, which is excellent in Iceberg. Iceberg achieves concurrent reads at the file level and even at finer-grained segments in a file. For example, a 256-MB file can be concurrently read in 128 MB respectively. The following figure shows the layout of insert and delete files in two buckets.

By comparing the Manifest, it is obvious that the delete file list of datafile-2 only contains deletefile-4. Thus, the two files can be executed as a separate task (Task-2 in the figure), and this method can also be applied to other files. By doing so, the data to be merged in each task is relatively balanced.

This solution ensures correctness and can achieve high-throughput, concurrent, and efficient read. In addition, snapshot-level incremental fetch can be implemented.

At present, this solution is still imperfect. Optimizations are still needed for the following aspects.

1) Cache data if duplicate delete files exist in the same task, which improves the join efficiency.

2) When the delete file is large and needs to be written to disk, users can use KV library for optimization that does not rely on external services or other complex indexes.

3) Users can design a Bloom filter to filter invalid I/O. The upsert operation commonly used in Flink generates a delete operation and an insert operation. Consequently, the sizes of data files and delete files in Iceberg are nearly the same, which results in lower join efficiency. To solve this problem, users can utilize the Bloom filter to filter out delete operations that have not previously inserted data when upserting the data. In other words, if the data has not been previously inserted, there is no need to write the delete record to the delete file. Thus, the upsert efficiency is greatly improved.

4) Some backend compaction policies are required to control the size of the deleted files. Lower delete file quantity leads to higher analysis efficiency. Of course, these policies do not affect normal read/write.

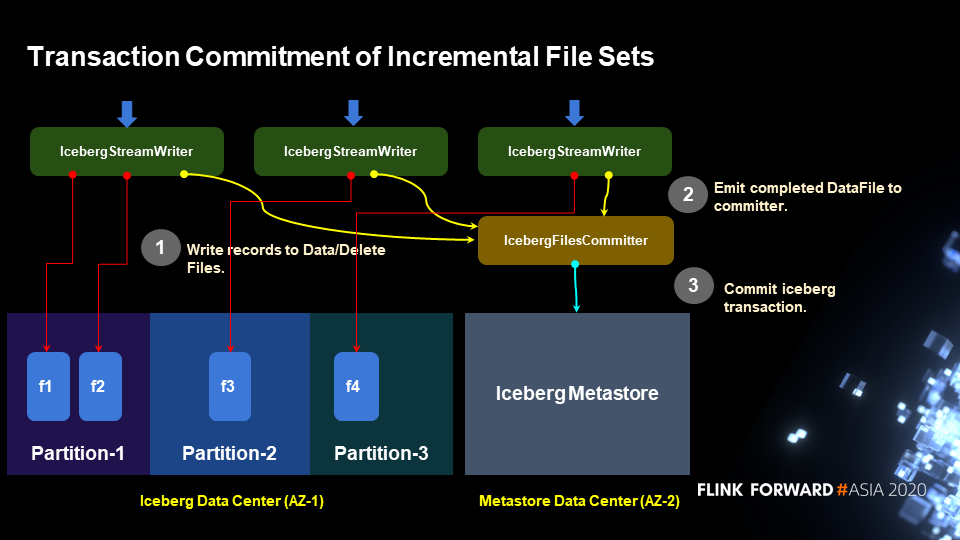

The following figure shows how to write data for user reads based on the Iceberg semantics. The process is mainly divided into two phases involving data and metastore. In the first phase, the operator IcebergStreamWriter writes data, but the metadata information of the written data is not written into the metastore. So, it is not visible to the external. In the second phase, the operator IcebergFileCommitter collects data files and ultimately writes data by committing the transaction.

Iceberg does not depend on any other third-party services. Hudi has some service abstractions, such as abstracting metastore as an independent Timeline, which may rely on some independent indexes or even other external services.

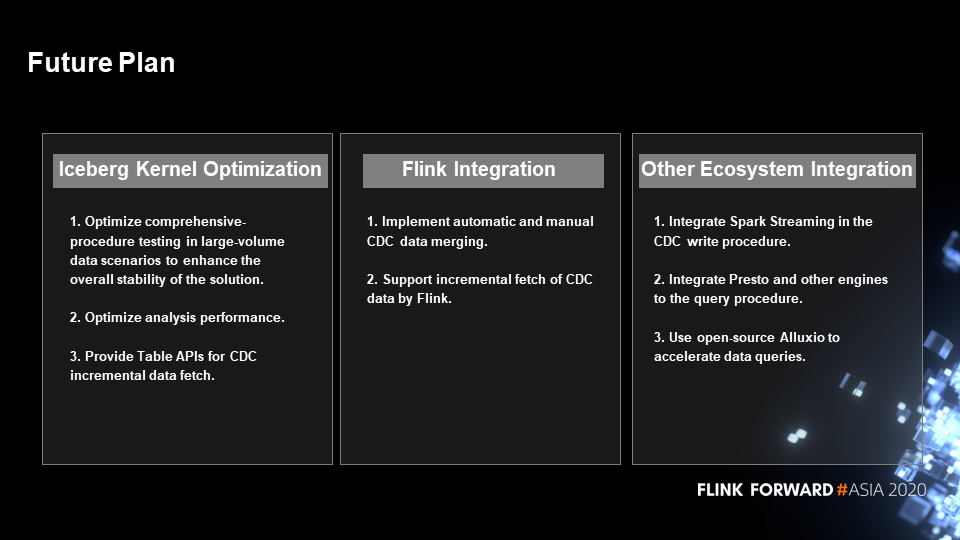

In the future, some optimizations will be conducted for the Iceberg kernel, including the comprehensive-procedure stability testing and performance optimization involved in the solution. Some Table APIs for CDC incremental data fetch will also be provided.

In terms of the integration with Flink, the manual and automatic merging of CDC data will be supported. Incremental fetch of CDC data by Flink will also be supported.

As to the integration with other ecosystems, Iceberg will integrate engines, such as Spark and Presto. Furthermore, Alluxio will also be integrated to accelerate data queries.

Flink + Iceberg: How to Construct a Whole-scenario Real-time Data Warehouse

Flink: How to Optimize SQL Performance Using Multiple-input Operators

206 posts | 56 followers

FollowApache Flink Community China - May 14, 2021

Apache Flink Community China - June 8, 2021

Apache Flink Community - June 11, 2024

Apache Flink Community - August 21, 2025

Apache Flink Community - May 10, 2024

Apache Flink Community - July 5, 2024

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Apache Flink Community