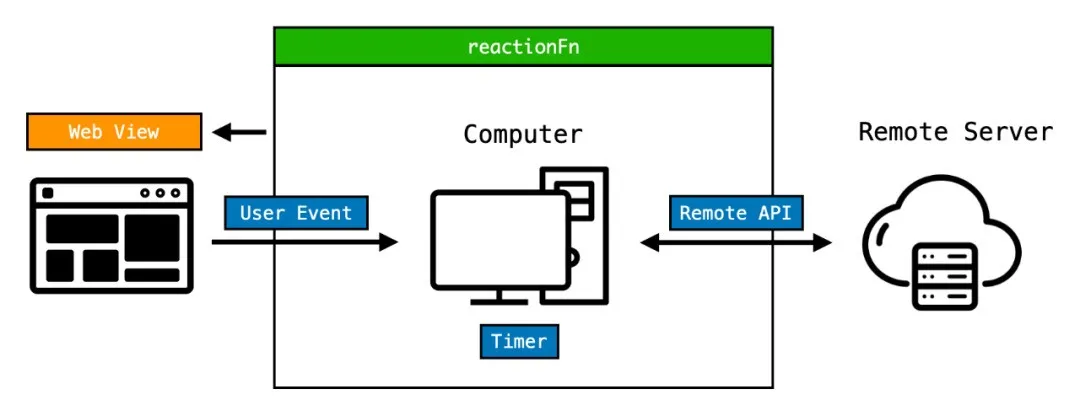

A question may be posed in the process of frontend development, "What is frontend development?" In my opinion, the essence of frontend development is to realize the correct response of the web page view to related events. There are three keywords, web page view, correct response, and related events.

Related events may include page clicking, mouse moving, timer, and server request. Correct responding means some statuses need to be modified according to related events. Web page view is the most familiar part during frontend development.

From this point of view, the formula is listed below:

View = reactionFn(Event)In frontend development, events that need to be processed can be classified into the following three types:

In this way, the formula is listed below:

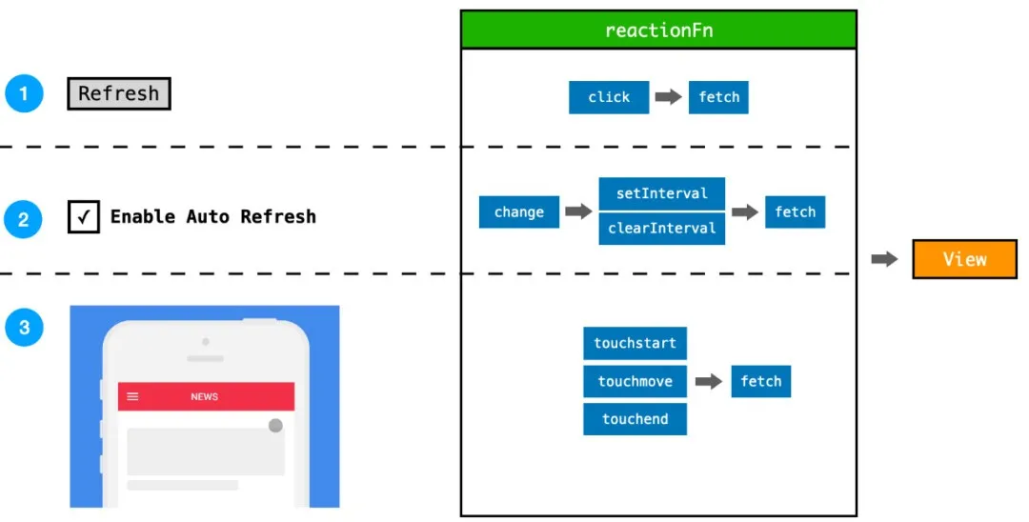

View = reactionFn(UserEvent | Timer | Remote API)The section below uses a new website to understand the relationship between the formula and frontend development. The website has three requirements:

From the frontend perspective, the three requirements respectively correspond to:

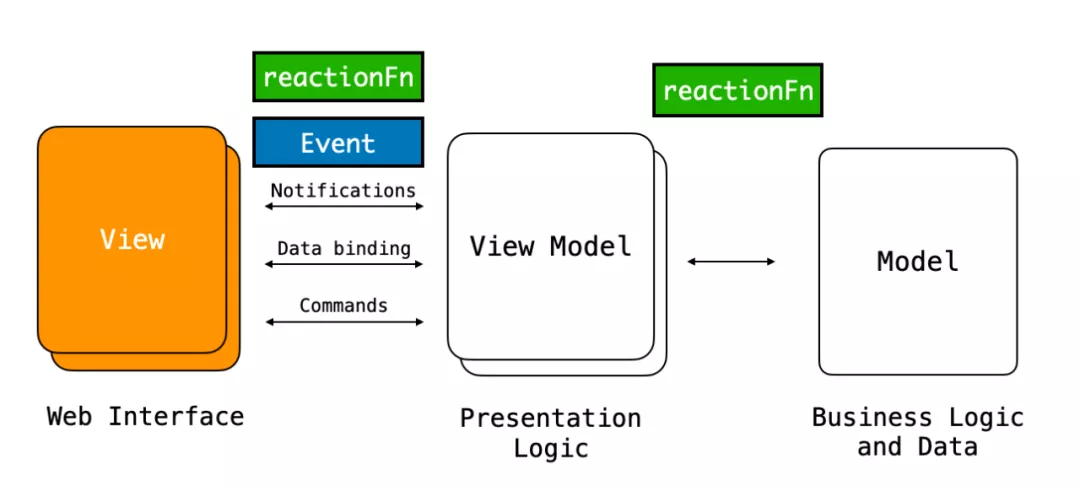

In the MVVM mode, the preceding response function (reactionFn) is executed between the Model and the ViewModel or between the View and the ViewModel. The Event is processed between the View and the ViewModel.

MVVM mode can effectively abstract the View layer and the data layer, but the response function (reactionFn) will be scattered in different conversion processes. This makes it difficult to track the data empowerment and collection processes accurately. In addition, since the processing of the Event is closely related to the View in the model, it is difficult to reuse the logic of Event processing between the View and the ViewModel.

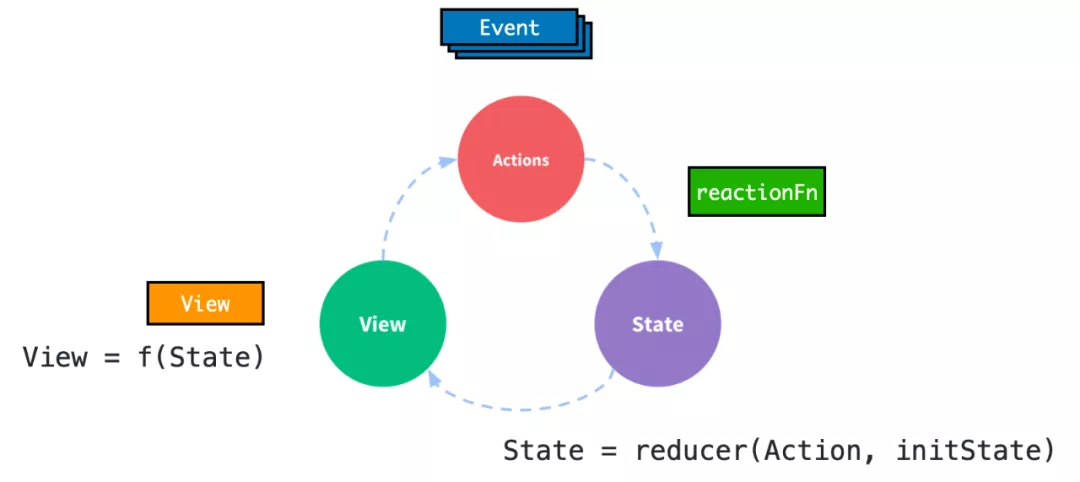

In the simplest Redux model, a combination of several events corresponds to an Action. The reducer function can directly correspond to the reactionFn.

However, in the Redux model:

Wikipedia describes reactive programming as "…a declarative programming paradigm oriented to the data stream and change transmission. This means that static or dynamic data streams can be easily expressed in programming languages. The relevant computing model automatically transmits changed values through the data stream."

From the perspective of data stream, the process of using the application is listed below:

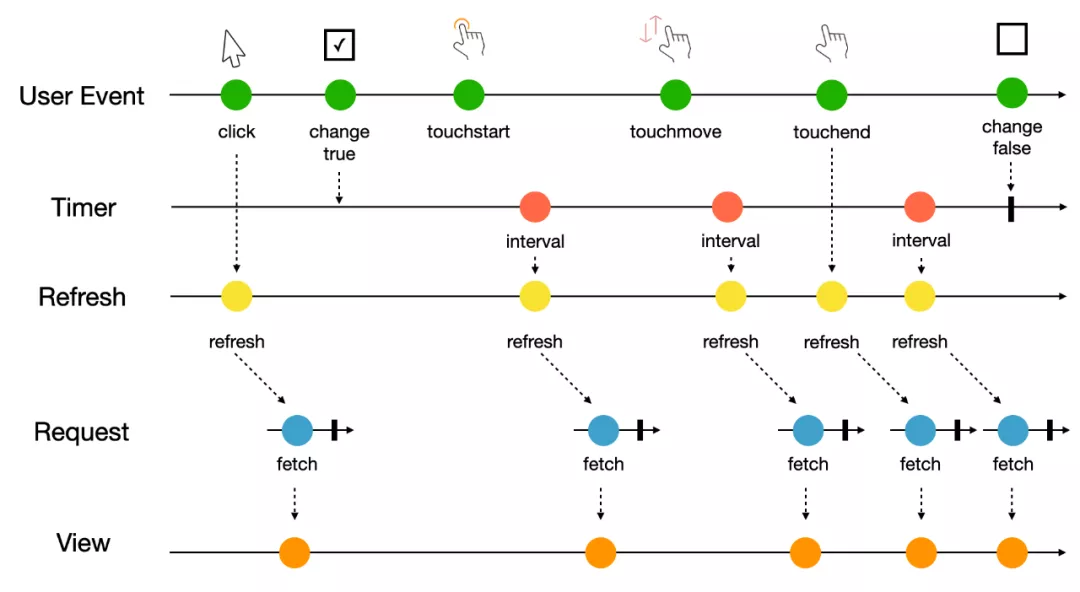

The process is listed on the following Marble diagram:

After splitting the logic shown in the preceding diagram, there will be three steps in the development of a news application by using reactive programming:

Detailed descriptions are listed below:

1) Click

This includes "click" data streams.

click$ = fromEvent<MouseEvent>(document.querySelector('button'), 'click');2) Select

This includes "change" data streams.

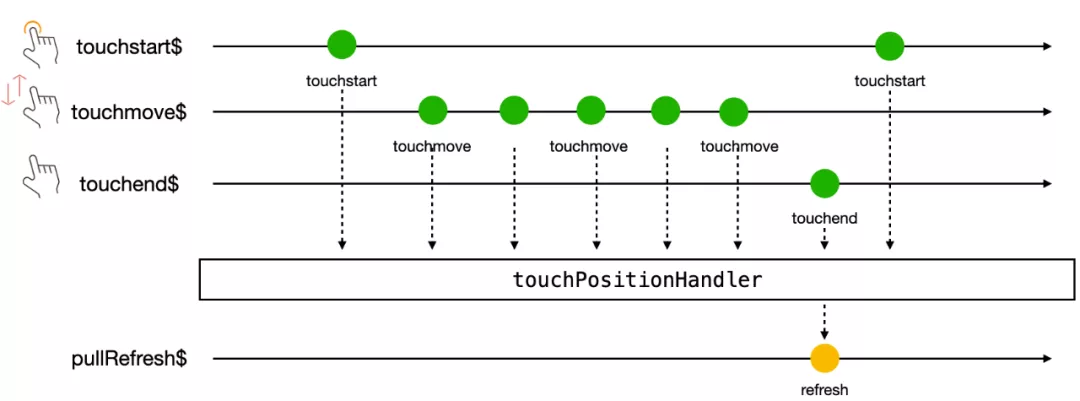

change$ = fromEvent(document.querySelector('input'), 'change');3) Pull Down

This includes three data streams: touchstart, touchmove, and touchend.

touchstart$ = fromEvent<TouchEvent>(document, 'touchstart');

touchend$ = fromEvent<TouchEvent>(document, 'touchend');

touchmove$ = fromEvent<TouchEvent>(document, 'touchmove');4) Scheduled Refreshing

interval$ = interval(5000);5) Server Request

fetch$ = fromFetch('https://randomapi.azurewebsites.net/api/users');Combine and Convert Data Streams

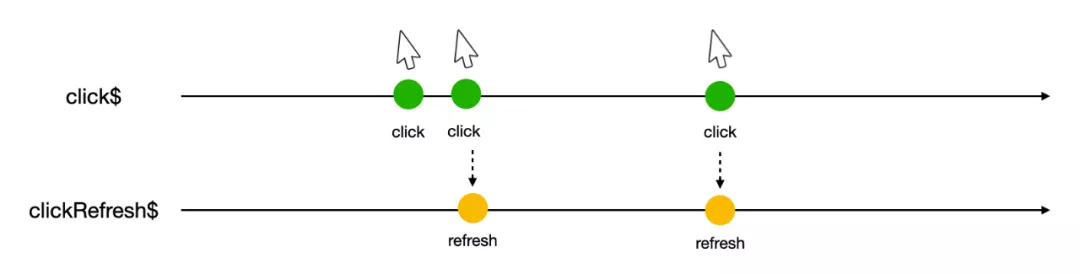

1) Click to refresh event stream

When clicking to refresh, multiple clicks will only refresh the last event stream for a short period. This can be achieved through the debounceTime operator of RxJS.

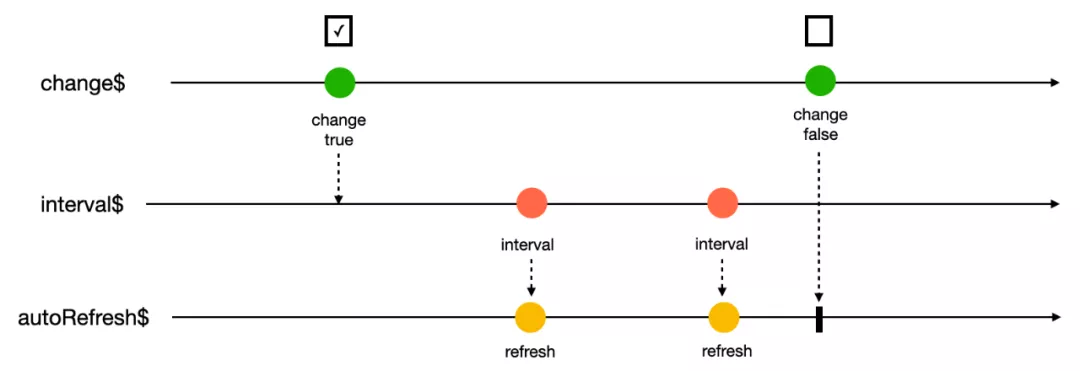

clickRefresh$ = this.click$.pipe(debounceTime(300));2) Automatically refresh streams

RxJS switchMap is used with the defined interval$ data stream.

autoRefresh$ = change$.pipe(

switchMap(enabled => (enabled ? interval$ : EMPTY))

);3) Pull down to refresh streams

Use the previously defined touchstart$, touchmove$, and touchend$ data streams.

pullRefresh$ = touchstart$.pipe(

switchMap(touchStartEvent =>

touchmove$.pipe(

map(touchMoveEvent => touchMoveEvent.touches[0].pageY - touchStartEvent.touches[0].pageY),

takeUntil(touchend$)

)

),

filter(position => position >= 300),

take(1),

repeat()

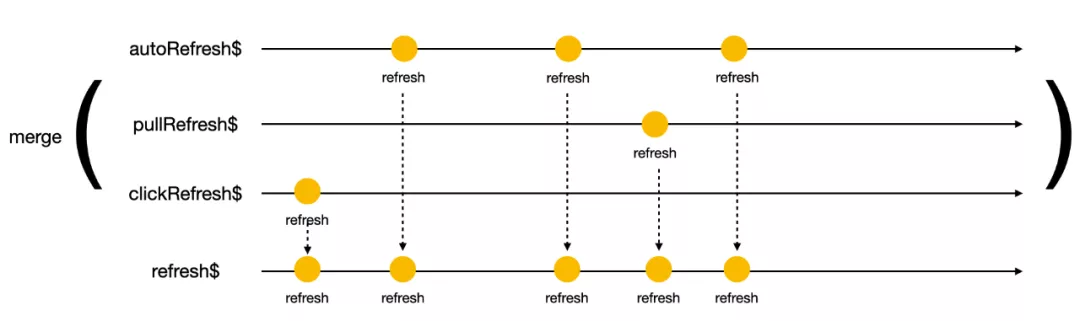

);Finally, the merge function is used to merge the defined clickRefresh$, autoRefresh$, and pullRefresh$ to obtain the refreshed data stream.

refresh$ = merge(clickRefresh$, autoRefresh$, pullRefresh$));Consume Data Streams and Update Views

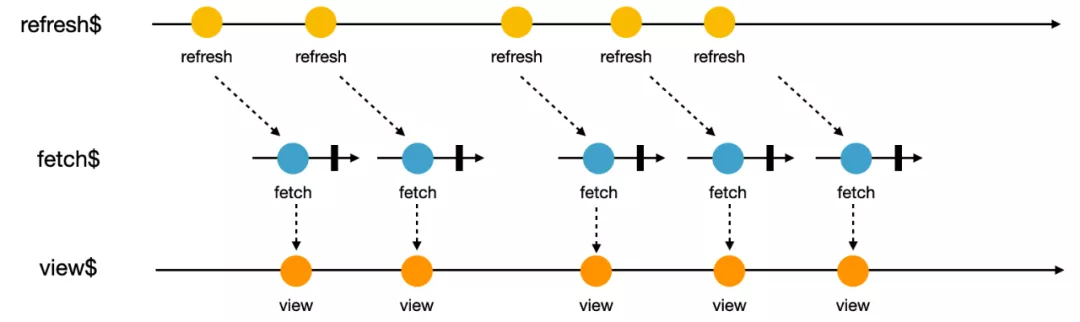

The refreshed data stream is directly tied to the fetch$ defined in the first step through switchMap. Then, the view data stream is obtained.

The view stream can be mapped to a view through an async pipe in the Angular framework:

<div *ngFor="let user of view$ | async">

</div>In other frameworks, "subscribe" can be used to obtain real data in the data stream and update the view.

At this point, the development of the news application is completed by using reactive programming. The sample code [1] is developed by Angular, with no more than 160 rows.

Here is a summary of the relationship between the three steps of using reactive programming to develop frontend applications and the formula in the first section:

View = reactionFn(UserEvent | Timer | Remote API)1) Define source data streams

This corresponds to "UserEvent | Timer | Remote API" and the corresponding functions in RxJS are listed below:

2) Combine and convert data streams

This corresponds to the "reactionFn" and the corresponding methods in RxJS are listed below:

3) Consume data streams and update views

This corresponds to "View." The following can be used in RxJS and Angular:

What are the advantages of reactive programming over MVVM or Redux?

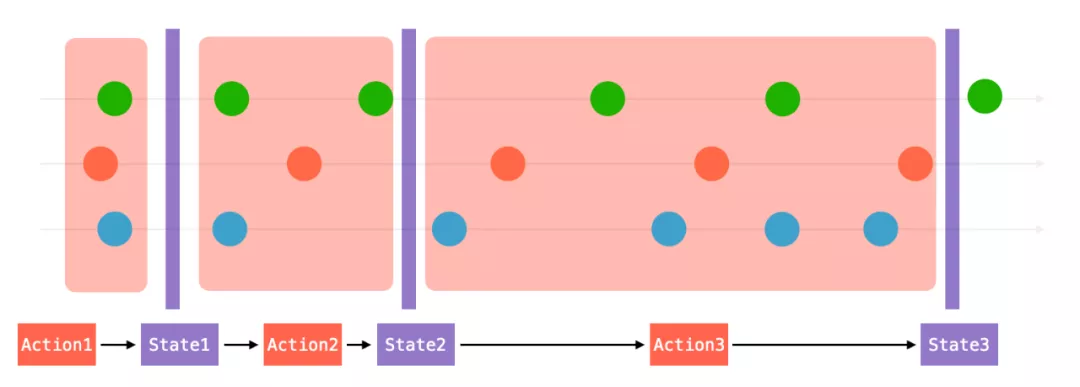

This makes it possible to track the sources of events and data changes accurately. If the timeline of RxJS's Marble diagram is blurred and a longitudinal aspect is added every time the view is updated, there will be two interesting things:

There is a sentence on the Redux official website, "If you have used RxJS, it is likely that you no longer need Redux."

The question is, "Do you need Redux if you already use Rx?" The simple answer says probably not. It's not hard to re-implement Redux in Rx. Some say it's a two-liner using theRx.scan() method.

At this point, can we further abstract the saying that the web page view can correctly respond to related events?

All events -- Find → Related events -- Make → Response

Events that occur in chronological order are essentially data streams. So, the above process can be transformed as the following:

Source data stream -- Convert → Intermediate data stream -- Subscribe → Consume data stream

This is the basic idea that reactive programming can work perfectly at the frontend. Is this idea only applied in frontend development?

The answer is no. This idea can also be widely applied in backend development and real-time computing.

Between the frontend and backend developers, there is a wall of information called REST API. The REST API isolates the responsibilities of the frontend and backend developers to improve the development efficiency. However, this wall has also separated the horizon of the frontend and backend developers. Let's break this wall of information and view the application of the same idea in real-time computing.

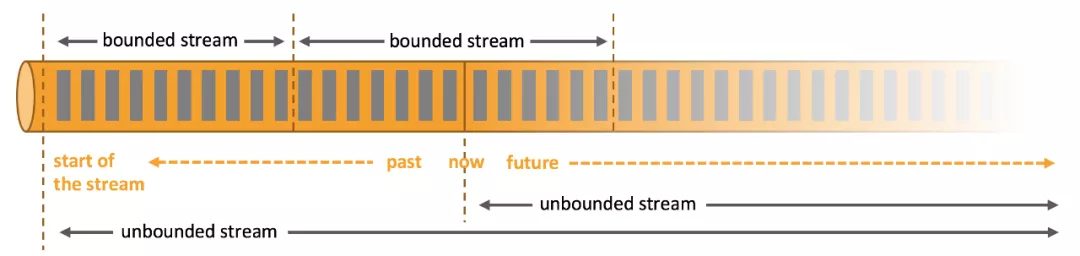

Let's take a look at Flink first. Apache Flink is an open-source streaming processing framework developed by the Apache Software Foundation. It is used for stateful computing over bounded and unbounded data streams. Its programming model of data streams can process a single event (event-at-a-time) on finite and infinite data sets.

In practices, Flink is typically used to develop the following three types of applications:

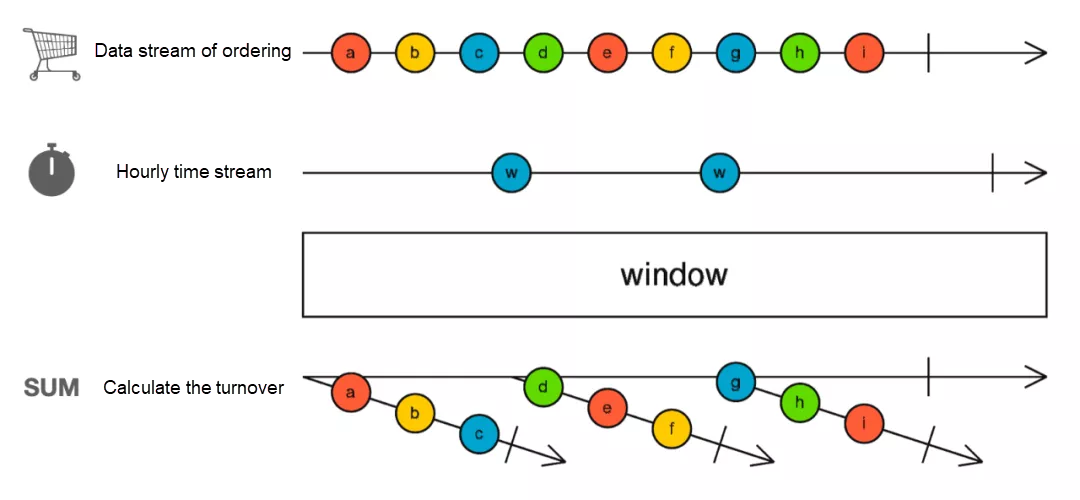

The calculation of hourly turnover on the e-commerce platform during Double 11 is an example here. Let's see if the solution mentioned above still works.

In this scenario, the user's ordering data should be obtained first. Then, the hourly transaction data is calculated and stored in the database for caching by Redis. Finally, the data is obtained through an API and displayed on the webpage.

The logic of data stream processing in this process is listed below:

Data stream of user orders - convert → Hourly transaction data stream - Subscribe → Writing to the database

It is consistent with the idea described in the previous section:

Source data stream -- Convert → Intermediate data stream -- Subscribe → Consume data stream

If the Marble diagram is used to describe this process, it may seem like the following figure. It seems that the window operator of RxJS can also be used to complete the same function. Is it really the case?

Real-time computing is much more complex than reactive programming in the frontend. Here are some examples:

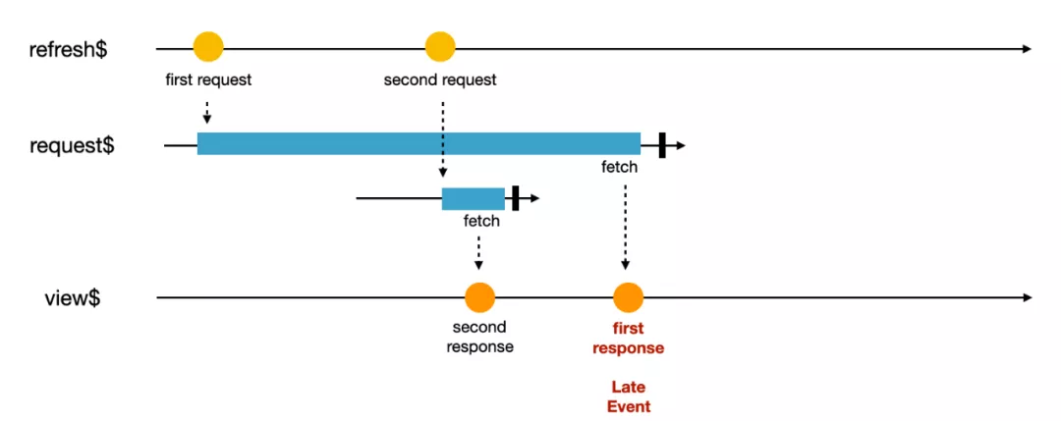

Event disorders occur in the frontend development process. In the most typical case, the earlier request receives the response later than the following requests, as shown in the following Marble diagram. There are many ways to deal with this situation at the frontend, but they will not be introduced in detail in this article.

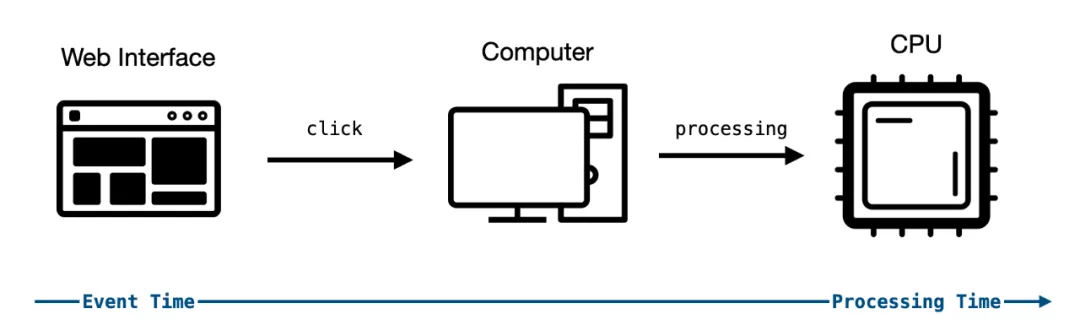

This part introduces the time disorder during data processing. In frontend development, there is a very important premise that reduces the complexity of frontend application development. The premise says frontend events are triggered and processed at the same time.

Let's imagine the user actions on the page, such as clicking and mouse moving, have become asynchronous events with an unknown response time. How complex will the frontend development be?

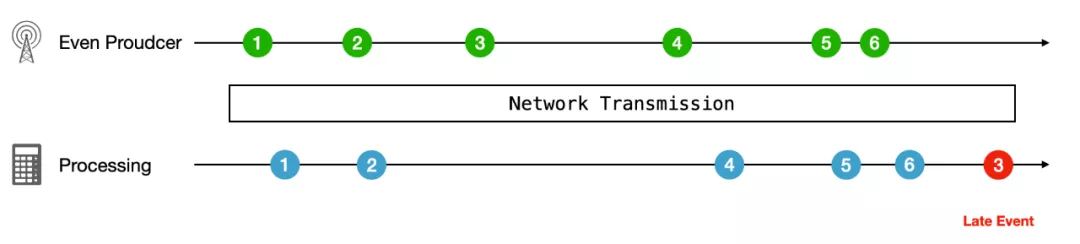

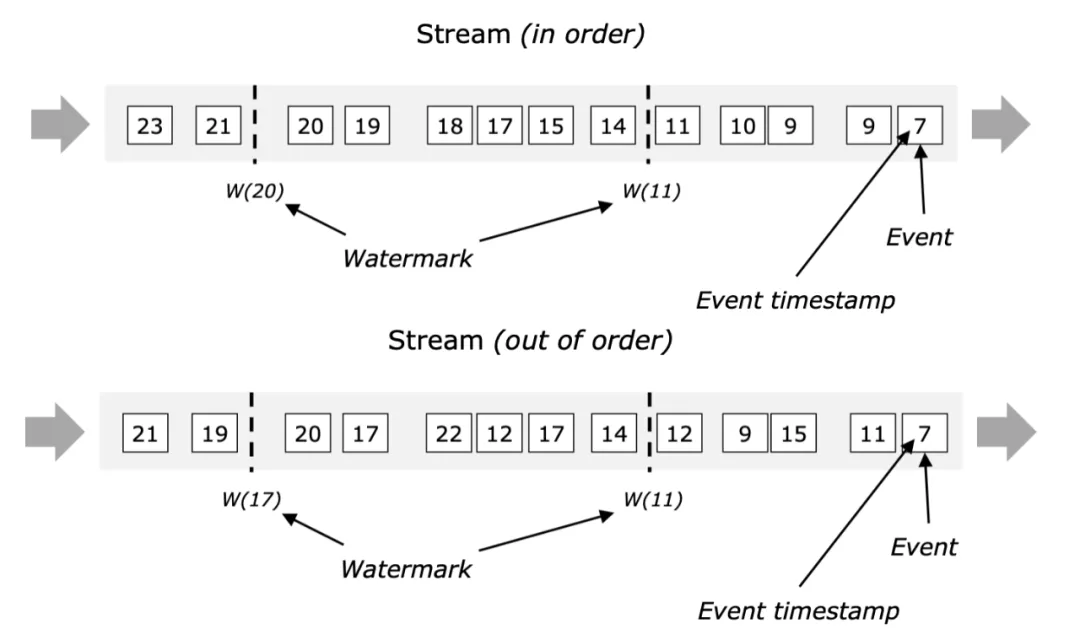

However, different event triggering and processing time is an important premise for real-time computing. Take the calculation of hourly turnover calculation as an example as well. When the original data stream is transmitted through layers, the sequence of data on the computing node may have been out of order.

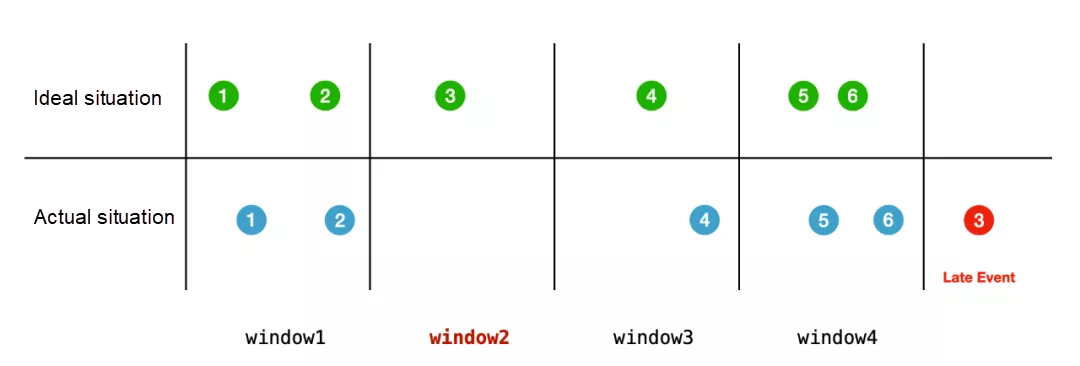

If the window is still divided according to the arrival time of the data, the final calculation result will be wrong:

The calculation should be completed after the late event arrived to obtain the correct calculation result of window2. However, there is a dilemma:

Flink introduced the Watermark mechanism to solve this problem. The Watermark mechanism decides when it is unnecessary to wait for the late event. This mechanism essentially provides a compromise solution for the accuracy and timeliness of real-time computing.

There is a vivid metaphor for Watermark. When studying at school, the teacher will close the door of the class and say, "Students that arrive later than the current time are considered late for class, and they will be punished." In Flink, the Watermark mechanism is the same as the teacher closing the door.

When RxJS is used in a browser, I wonder if you have considered a situation where the speed of generating observable data is faster than consuming by operator or observer. A large amount of unconsumed data is cached in the memory. This kind of situation is called back pressure. Fortunately, the data back pressure on the frontend will only occupy a large amount of browser memory, and there will be no more serious consequences.

However, in real-time computing, what is the possible solution if the issue above occurs?

For many streaming applications, data loss is unacceptable. Flink has designed a mechanism to avoid this:

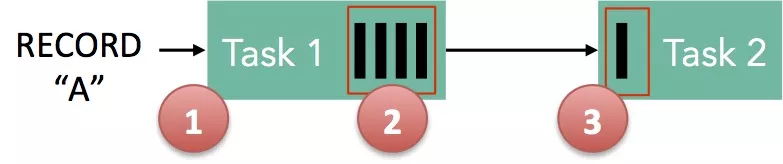

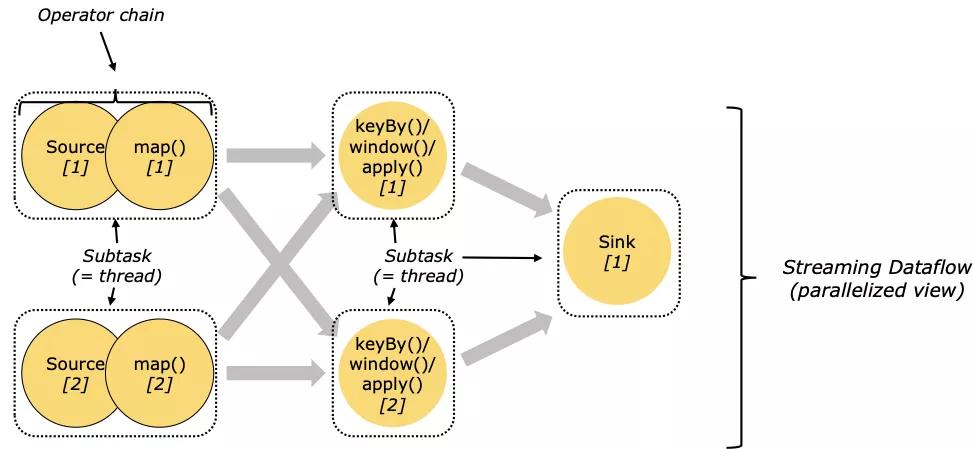

In real-time computing, billions of data records can be processed per second. Such data cannot be processed by a single server independently. In Flink, the operator's computing logic is executed with different subtasks on different task managers. However, when a machine fails, how can it handle the overall computing logic and state to ensure the correctness of the final calculation results?

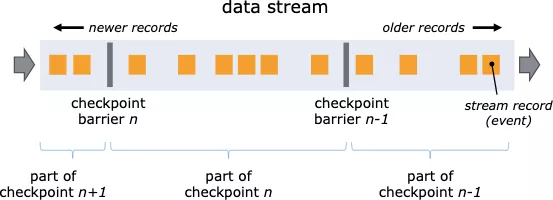

Flink introduces the checkpoint mechanism to restore the state and computing position of tasks. Checkpoint enables good fault tolerance. Flink uses a variant of the Chandy-Lamport algorithm, which is called asynchronous barrier snapshotting.

When Checkpoint is enabled, the offsets of all sources will be recorded, and numbered checkpoint barriers will be inserted into their streams. When passing through each operator, barriers will mark the stream part before and after each checkpoint.

When an error occurs, Flink restores the state based on the state stored in the checkpoint to ensure the correct final result.

Due to the limited space, this article only introduces a small part. However, the model is common in both reactive programming and real-time computing. We hope this article can give you some insight into data streams.

Source data stream -- Convert → Intermediate data stream -- Subscribe → Consume data stream1 posts | 0 followers

FollowAlibaba Clouder - February 2, 2021

Apache Flink Community China - January 9, 2020

Apache Flink Community - June 6, 2024

Apache Flink Community - May 9, 2025

Apache Flink Community - April 16, 2024

Apache Flink Community China - May 18, 2022

1 posts | 0 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More mPaaS

mPaaS

Help enterprises build high-quality, stable mobile apps

Learn More Media Solution

Media Solution

An array of powerful multimedia services providing massive cloud storage and efficient content delivery for a smooth and rich user experience.

Learn More