By Digoal

Many systems retain users' behavior logs, which include information like browsing behaviors, social behaviors, and operation behaviors. Typical application scenarios include SQL database auditing and behavior auditing of bastion hosts within enterprises. The previous best practice article uses PostgreSQL to store audit logs and creates full-text indexes in the fields that need to be retrieved.

A machine with an SSD can write up to 70,000 records per second (that is, 2.8 million full-text index entries can be created per second). When this performance metric is met, the CPU usage and disk I/O are maxed out.

In addition to full-text indexing, what other methods can be used for this purpose?

This article describes how to implement audit log retrieval and high-speed writes from a different perspective.

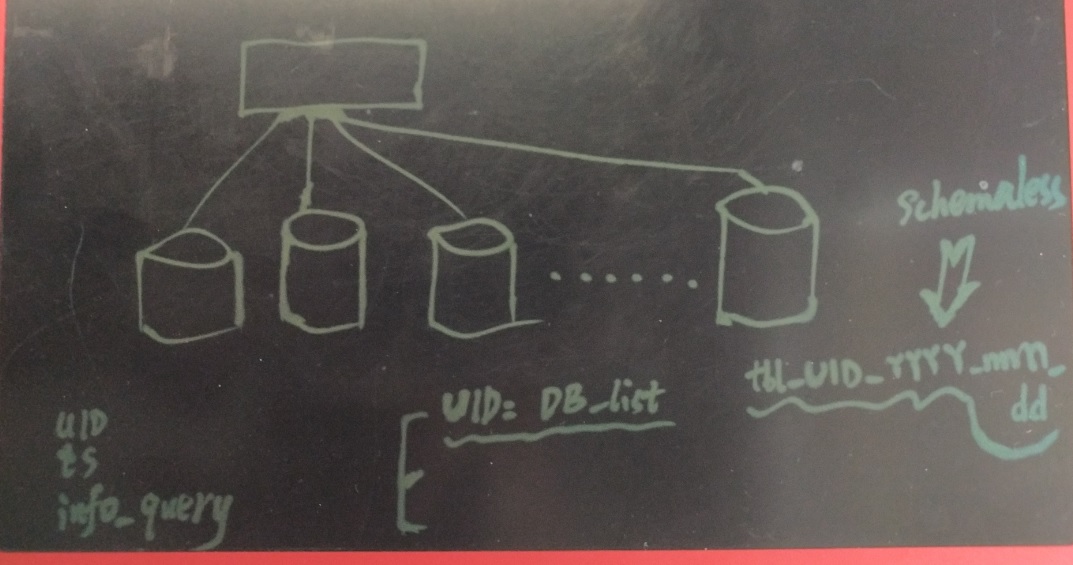

Audit logs have three dimensional query criteria: UID, time range, and term match.

1. UIDs represents user IDs and are used to identify behavior data of different users.

2. The TS field represents the a point in time at which a log is generated.

3. The behavior data field represents user behaviors.

Optimization ideas:

1. Include UID in a table name and create a table for each UID.

(Advantage: This can omit a field and save space. This can also avoid confusing data of different users at the time of data organization and prevent I/O from becoming excessively large when queries are performed, improving the query efficiency.)

(Disadvantages: Generating a table for each UID may lead to too many tables and large metadata. A structure change may involve too many tables.)

2. Use a BRIN index in the TS field. Heap storage and value order have a strong linear correlation because behavior data generated for each user is chronological.

3. Scramble data before storing data. Use the corresponding DB list of the metadata UID and randomly write data into corresponding DBs. When querying data, query all aggregated DBs by TS range to return the results. (The application layer is responsible for the merge sort operation. No indexes are used in the user behavior field.)

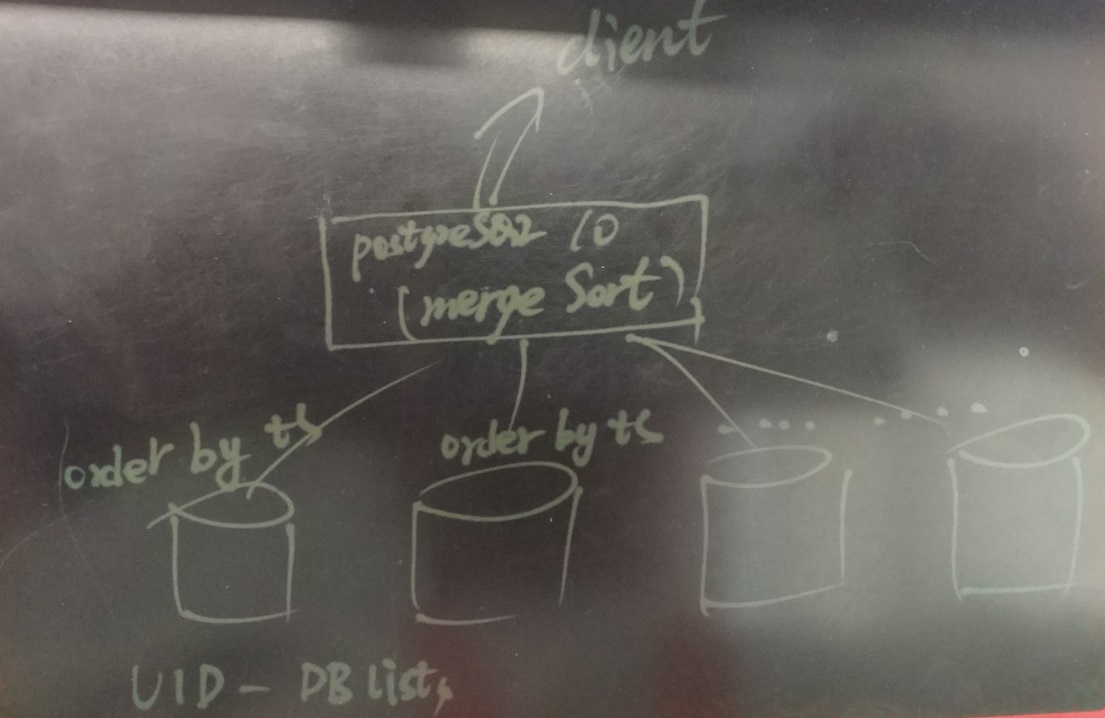

The merge sort feature is built in PostgreSQL 10. If you need to use a middle layer to implement "merge sort", PostgreSQL will be a good choice.

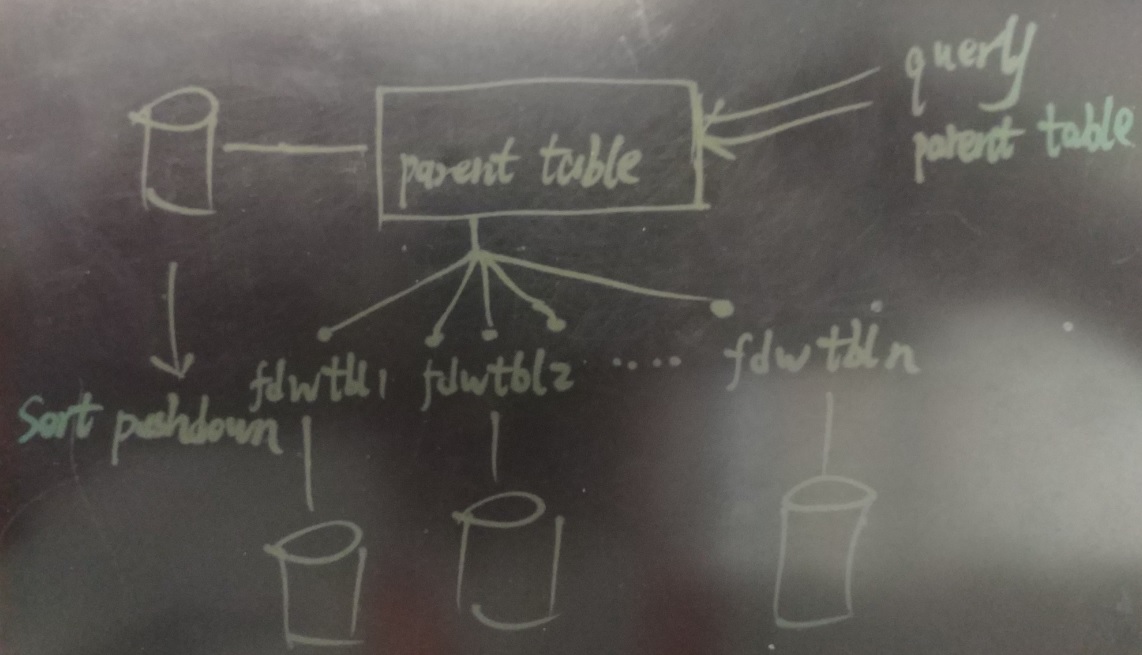

You just need to configure all the data sources as the fdw sub-table.

Example

postgres=# explain select * from bptest where ts between now()-interval '20 min' and now()-interval '10 min' order by ts;

QUERY PLAN

---------------------------------------------------------------------------------------------------------------------

Merge Append (cost=34.00..634325.20 rows=4808182 width=524)

Sort Key: bptest.ts

-> Index Scan using idx_bptest_ts on bptest (cost=0.14..3.15 rows=1 width=40)

Index Cond: ((ts >= (now() - '00:20:00'::interval)) AND (ts <= (now() - '00:10:00'::interval)))

-> Index Scan using bptest_32_20170522_ts_idx on bptest_32_20170522 (cost=0.30..4802.19 rows=49918 width=524)

Index Cond: ((ts >= (now() - '00:20:00'::interval)) AND (ts <= (now() - '00:10:00'::interval)))

-> Index Scan using bptest_64_20170522_ts_idx on bptest_64_20170522 (cost=0.30..4114.22 rows=42820 width=524)

Index Cond: ((ts >= (now() - '00:20:00'::interval)) AND (ts <= (now() - '00:10:00'::interval)))

-> Index Scan using bptest_34_20170522_ts_idx on bptest_34_20170522 (cost=0.30..3984.00 rows=41459 width=524)

Index Cond: ((ts >= (now() - '00:20:00'::interval)) AND (ts <= (now() - '00:10:00'::interval)))

-> Index Scan using bptest_37_20170522_ts_idx on bptest_37_20170522 (cost=0.30..4898.77 rows=50972 width=524)

Index Cond: ((ts >= (now() - '00:20:00'::interval)) AND (ts <= (now() - '00:10:00'::interval)))

-> Index Scan using bptest_31_20170522_ts_idx on bptest_31_20170522 (cost=0.30..4682.19 rows=48668 width=524)

..................... Solution 1:

If a GIN index is used to build full-text search, CPU and I/O resources are almost exhausted when the TPS reaches 60,000. BCACHE GC or slight I/O fluctuation will lead to significant performance change.

For more information about solution 1, refer to the first article.

Solution 2:

Use the UID and ts_prefix partitions to ensure that a user's data is stored in a heap and reduce the I/O overhead during the process of retrieving data.

Because the TS field has the time sequence property, a BRIN can be used to reduce the index size.

When data size reaches a certain level, the parallel query property is automatically triggered in PostgreSQL 10, improving the query performance.

Since UID data has been split into shards, performing a query requires two variables: TS and text match. Data assigned to each node is very small in size. Combining fuzzy match (instead of full-text retrieval) and multicore parallelism in PostgreSQL 10 can perfectly meet the query response latency requirement.

create table db_meta

(

dbid int primary key, -- A record for each database node, representing a database shard

groupid int -- Each shard belongs to a group

conn_info text -- Connection information (URL)

);

create table uid_mapping

(

uid int primary key, -- A user's unique identifier

dbgroupid int -- A database group, representing that data of a specific user is written into all shards in this group

); Behavior data is retained for a time period and then cleared.

If you think that this design method is too complicated, use all the databases as a large pool and randomly write all the users into this pool.

This design is similar to the design concepts of Greenplum and HAWQ. Greenplum adopts the big pool concept, while HAWQ adopts the grouping concept.

Main table structure:

create table bptest

(

ts timestamptz, -- Time when a behavior occurs

content text -- Behavior content

); The table name of each user is bptest_$uid_$yyyymmdd.

The structure and index remain the same as the main table.

Since the storage sequence for the TS field and the sequence of the values have a strong linear correlation, it is advised to use BRIN indexes.

BRIN indexes occupy hundreds of times smaller space than B-tree indexes while improving the performance of data writing.

create index idx_bptest_ts on bptest using brin(ts); -- Create BRIN indexes in the TS field Each shard belongs to a group, and data of each UID is randomly written into all the shards in a specified group.

If you think that this design method is too complicated, use all the databases as a large pool and randomly write all the users into this pool.

This design is similar to the design concepts of Greenplum and HAWQ. Greenplum adopts the big pool concept, while HAWQ adopts the grouping concept.

When querying behavior data of a specific UID, query the data in all the shards in parallel and perform the "merge sort" operation by the TS field and return.

"Merge sort" can be implemented either in databases or in the application layer.

If you want to implement "merge sort" at the database level, you can use postgres_fdw in PostgreSQL 10. Each shard of each UID corresponds to a FDW table and is mounted to the parent table of each UID.

When you perform queries in the parent table, "merge sort" will be used to order data by TS.

If you want to implement "merge sort" in the application layer, the method is similar to the previous implementation method — query all the shards of a UID in parallel (results of order by are returned in each shard) and use the "merge sort" method in the application layer to return results to the client.

Because each UID corresponds to multiple table bptest_$uid_$yyyymmdd values, we can design schemaless writing on the database side similar to MongoDB:

Insert tables, if any. Create and insert tables if there are no tables.

Create a function that automatically creates target tables.

create or replace function create_schemaless(target name) returns void as

$$

declare

begin

execute format('create table if not exists %I (like bptest including all)', target);

exception when others then

return;

end;

$$

language plpgsql strict; Create a function that inserts data and uses dynamic SQL. Invoke the table creation function to create tables if an error occurs indicating that there are no tables.

create or replace function ins_schemaless(uid int, ts timestamptz, content text) returns void as

$$

declare

target name := 'bptest_'||uid||'_'||to_char(ts,'yyyymmdd');

begin

execute format('insert into %I values (%L, %L)', target, ts, content);

exception

WHEN SQLSTATE '42P01' THEN

perform create_schemaless(target);

execute format('insert into %I values (%L, %L)', target, ts, content);

end;

$$

language plpgsql strict; The schemaless design on the database side is implemented at the cost of performance to some extent, because bind variables cannot be used.

We recommend that you implement the schemaless design in the business layer (automatically splitting and joining table names, automatically creating tables ) to improve performance.

Historical data can be cleared directly by dropping table shards (bptest_$uid_$yyyymmdd).

If data needs to be retained, you can write and persist data into OSS by using the OSS_FDW interface in Alibaba Cloud RDS for PostgreSQL. Use FDW when you need to read data.

For information about how to implement the separation of cloud storage and computation, see the following articles:

RDS PostgreSQL: use oss_fdw to read/write OSS

HybridDB PostgreSQL: use oss_fdw to read/write OSS

If audit logs need to be analyzed, you can write data in RDS for PostgreSQL into OSS and use HybridDB for PostgreSQL to analyze data.

Use the same server with 12 instances as in the previous article.

1. Configure environment variables

vi env_pg10.sh

export PS1="$USER@`/bin/hostname -s`-> "

export PGPORT=$(($1+1920))

export PGDATA=/disk$1/digoal/pgdata/pg_root$(($1+1920))

export LANG=en_US.utf8

export PGHOME=/home/digoal/pgsql10_8k

export LD_LIBRARY_PATH=$PGHOME/lib:/lib64:/usr/lib64:/usr/local/lib64:/lib:/usr/lib:/usr/local/lib:$LD_LIBRARY_PATH

export LD_RUN_PATH=$LD_LIBRARY_PATH

export DATE=`date +"%Y%m%d%H%M"`

export PATH=$PGHOME/bin:$PATH:.

export MANPATH=$PGHOME/share/man:$MANPATH

export PGHOST=127.0.0.1

export PGUSER=postgres

export PGDATABASE=postgres

alias rm='rm -i'

alias ll='ls -lh'

unalias vi 2. Initialize SQL

vi init.sql

-- Clean up the environment

drop table bptest cascade;

-- Initialize the parent table

create table bptest

(

ts timestamptz, -- Time when a behavior occurs

content text -- Behavior content

);

-- Create an index

-- create index idx_bptest_ts on bptest using brin(ts) with(pages_per_range=1); -- BRIN index in the TS field. If output results do not need to be ordered by TS, we recommend that you use a BRIN index

create index idx_bptest_ts on bptest using btree(ts) ; -- If output results do not need to be sorted by TS, we recommend that you use a B-tree index

-- Schemaless design, auto table creation function

create or replace function create_schemaless(target name) returns void as

$$

declare

begin

execute format('create table if not exists %I (like bptest including all) inherits(bptest)', target);

-- Here you can set the column statistic

exception when others then

return;

end;

$$

language plpgsql strict;

-- The function for writing data

create or replace function ins_schemaless(uid int, ts timestamptz, content text) returns void as

$$

declare

target name := 'bptest_'||uid||'_'||to_char(ts,'yyyymmdd');

begin

execute format('insert into %I values (%L, %L)', target, ts, content);

exception

WHEN SQLSTATE '42P01' THEN

perform create_schemaless(target);

execute format('insert into %I values (%L, %L)', target, ts, content);

end;

$$

language plpgsql strict;

-- The function for generating random strings. This function consumes a large number of CPU resources in this example

CREATE OR REPLACE FUNCTION public.gen_rand_str(integer)

RETURNS text

LANGUAGE sql

STRICT

AS $function$

select string_agg(a[(random()*6)::int+1],'') from generate_series(1,$1), (select array['a','b','c','d','e','f',' ']) t(a);

$function$;

-- Pagination evaluation function for evaluating how many records are returned. Avoid using count(*) to evaluate the exact value

CREATE FUNCTION count_estimate(query text) RETURNS INTEGER AS

$func$

DECLARE

rec record;

ROWS INTEGER;

BEGIN

FOR rec IN EXECUTE 'EXPLAIN ' || query LOOP

ROWS := SUBSTRING(rec."QUERY PLAN" FROM ' rows=([[:digit:]]+)');

EXIT WHEN ROWS IS NOT NULL;

END LOOP;

RETURN ROWS;

END

$func$ LANGUAGE plpgsql;

-- Random query performance evaluation function

CREATE or replace FUNCTION rand_query(

v_uid int, -- User ID

v_epoch int, -- Search beginning time

v_randts int, -- Search interval (seconds)

v_randlog text, -- Query criteria

v_cnt int -- Return a specific number of rows

) RETURNS void AS

$func$

DECLARE

tbl name := 'bptest_'||v_uid||'_'||to_char(('1970-01-01'::date+(v_epoch||' sec')::interval),'yyyymmdd');

sql text;

cnt int;

BEGIN

-- agination evaluation

sql := format('select * from %I where ts between %L and %L and content ~ %L order by ts', tbl, '1970-01-01'::date+(v_epoch||' sec')::interval, '1970-01-01'::date+((v_epoch+v_randts)||' sec')::interval, v_randlog);

select count_estimate(sql) into cnt;

raise notice 'cnt: %', cnt;

-- Use count(*) to return the exact number of pages

-- Return a specific number of records

sql := format('select * from %I where ts between %L and %L and content ~ %L order by ts limit %L', tbl, '1970-01-01'::date+(v_epoch||' sec')::interval, '1970-01-01'::date+((v_epoch+v_randts)||' sec')::interval, v_randlog, v_cnt);

execute sql;

-- You can transfer the code in the function to the application side and use a cursor to obtain data in order to consistency on each page

-- Use the reverse order to rejoin SQL (order by origin_time desc) if you want to return the last page.

exception when others then

return;

END

$func$ LANGUAGE plpgsql;Initialize each database instance

psql -f ./init.sql -p 1921

...

psql -f ./init.sql -p 1932 12 databases and 100 UIDs.

Write 10 million records into each database for each UID and write a total of 120 million records for each UID. Write a total of 12 billion records for all UIDs.

A random string of a specified length is generated using gen_rand_str function (gen_rand_str has a large amount of CPU overhead, and stress testing 2 use methods generated by other random values).

vi test.sql

\set uid random(1,100)

select ins_schemaless(:uid, now(), gen_rand_str(512)); Test scripts

vi test.sh

for ((i=1;i<13;i++))

do

. /home/digoal/env_pg10.sh ${i}

nohup pgbench -M prepared -n -r -P 3 -f ./test.sql -c 3 -j 3 -t 100000000 >/tmp/bptest_${i}.log 2>&1 &

done Write performance:

1. When using the BRIN index, the write rate is 94,700/s

2. When using the BTree index, the write rate is 79,000/s

3. Server resource overhead:

CPU:

Cpu(s): 90.7%us, 7.1%sy, 0.1%ni, 0.4%id, 0.1%wa, 0.0%hi, 1.6%si, 0.0%st

IOUITL:

SSD: 50 % 1. Most of the CPU overhead exists in functions that generate random strings, so CPU consumption is much lower in actual scenarios.

See below

perf top -ag

60953.00 6.0% ExecInterpExpr /home/digoal/pgsql10_8k/bin/postgres

33647.00 3.3% AllocSetAlloc /home/digoal/pgsql10_8k/bin/postgres

27560.00 2.7% advance_aggregates /home/digoal/pgsql10_8k/bin/postgres

22894.00 2.3% base_yyparse /home/digoal/pgsql10_8k/bin/postgres

21976.00 2.2% SearchCatCache /home/digoal/pgsql10_8k/bin/postgres

21768.00 2.2% array_seek /home/digoal/pgsql10_8k/bin/postgres

20957.00 2.1% appendBinaryStringInfo /home/digoal/pgsql10_8k/bin/postgres

19912.00 2.0% memcpy /lib64/libc-2.12.so

17058.00 1.7% array_get_element /home/digoal/pgsql10_8k/bin/postgres

14916.00 1.5% heap_form_minimal_tuple /home/digoal/pgsql10_8k/bin/postgres

13617.00 1.3% heap_fill_tuple /home/digoal/pgsql10_8k/bin/postgres

12201.00 1.2% __rint /lib64/libm-2.12.so

10938.00 1.1% palloc0 /home/digoal/pgsql10_8k/bin/postgres

10683.00 1.1% MemoryContextAllocZeroAligned /home/digoal/pgsql10_8k/bin/postgres

10591.00 1.0% ExecStoreMinimalTuple /home/digoal/pgsql10_8k/bin/postgres

10292.00 1.0% string_agg_transfn /home/digoal/pgsql10_8k/bin/postgres

10202.00 1.0% advance_transition_function /home/digoal/pgsql10_8k/bin/postgres

9973.00 1.0% core_yylex /home/digoal/pgsql10_8k/bin/postgres 2. Bcache Issue

Garbage collection by bcache has a very significant impact on I/O.

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

40207 root 20 0 0 0 0 R 100.0 0.0 34:47.86 [bch_gc-0b34a79a] The await time has reached the second level.

Device: rrqm/s wrqm/s r/s w/s rsec/s wsec/s avgrq-sz avgqu-sz await svctm %util

bcache11 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

bcache10 0.00 6054.00 1.00 105.00 8.00 48640.00 458.94 17.19 157.73 9.43 100.00

bcache9 0.00 2431.00 0.00 100.00 0.00 29408.00 294.08 4.22 102.87 9.86 98.60

bcache8 0.00 4013.00 0.00 85.00 0.00 37352.00 439.44 10.04 174.19 11.76 100.00

bcache7 0.00 1661.00 0.00 58.00 0.00 12952.00 223.31 2.34 53.47 17.02 98.70

bcache6 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

bcache5 0.00 48.00 0.00 71.00 0.00 44896.00 632.34 183.24 2124.06 14.08 100.00

bcache4 0.00 6506.00 0.00 211.00 0.00 56312.00 266.88 74.89 488.20 4.74 100.00

bcache3 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

bcache2 0.00 5502.00 0.00 103.00 0.00 44168.00 428.82 144.85 709.10 9.71 100.00

bcache1 0.00 12302.00 0.00 34.00 0.00 13464.00 396.00 84.11 877.03 29.41 100.00

bcache0 0.00 15148.00 0.00 260.00 0.00 42504.00 163.48 47.39 155.56 3.85 100.00 3. With a smooth checkpoint configured, there are no checkpoint problems and the sync time is very short.

2017-05-23 10:49:55.749 CST,,,25095,,59239d76.6207,9,,2017-05-23 10:24:54 CST,,0,LOG,00000,"checkpoint starting: time",,,,,,,,"LogCheckpointStart, xlog.c:8369",""

2017-05-23 10:50:25.434 CST,,,25095,,59239d76.6207,10,,2017-05-23 10:24:54 CST,,0,LOG,00000,"checkpoint complete: wrote 233036 buffers (5.6%); 0 WAL file(s) added, 0 removed, 131 recycled; write=29.250 s, sync=0.018 s, total=29.685 s; sync files=300, longest=0.004 s, average=0.000 s; distance=2145650 kB, estimate=2145650 kB",,,,,,,,"LogCheckpointEnd, xlog.c:8451",""

2017-05-23 10:54:55.529 CST,,,25095,,59239d76.6207,11,,2017-05-23 10:24:54 CST,,0,LOG,00000,"checkpoint starting: time",,,,,,,,"LogCheckpointStart, xlog.c:8369",""

2017-05-23 10:57:59.222 CST,,,25095,,59239d76.6207,12,,2017-05-23 10:24:54 CST,,0,LOG,00000,"checkpoint complete: wrote 191434 buffers (4.6%); 0 WAL file(s) added, 0 removed, 131 recycled; write=118.012 s, sync=59.816 s, total=183.693 s; sync files=300, longest=16.126 s, average=0.199 s; distance=1752021 kB, estimate=2106288 kB",,,,,,,,"LogCheckpointEnd, xlog.c:8451",""

2017-05-23 10:59:55.328 CST,,,25095,,59239d76.6207,13,,2017-05-23 10:24:54 CST,,0,LOG,00000,"checkpoint starting: time",,,,,,,,"LogCheckpointStart, xlog.c:8369",""

2017-05-23 11:00:25.350 CST,,,25095,,59239d76.6207,14,,2017-05-23 10:24:54 CST,,0,LOG,00000,"checkpoint complete: wrote 176278 buffers (4.2%); 0 WAL file(s) added, 0 removed, 107 recycled; write=29.688 s, sync=0.009 s, total=30.021 s; sync files=300, longest=0.003 s, average=0.000 s; distance=1630757 kB, estimate=2058734 kB",,,,,,,,"LogCheckpointEnd, xlog.c:8451",""

2017-05-23 11:04:55.467 CST,,,25095,,59239d76.6207,15,,2017-05-23 10:24:54 CST,,0,LOG,00000,"checkpoint starting: time",,,,,,,,"LogCheckpointStart, xlog.c:8369",""

2017-05-23 11:05:25.381 CST,,,25095,,59239d76.6207,16,,2017-05-23 10:24:54 CST,,0,LOG,00000,"checkpoint complete: wrote 232981 buffers (5.6%); 0 WAL file(s) added, 0 removed, 99 recycled; write=29.555 s, sync=0.013 s, total=29.914 s; sync files=300, longest=0.006 s, average=0.000 s; distance=2142180 kB, estimate=2142180 kB",,,,,,,,"LogCheckpointEnd, xlog.c:8451",""

2017-05-23 11:06:44.503 CST,,,36368,"127.0.0.1:44645",5923a744.8e10,1,"",2017-05-23 11:06:44 CST,,0,LOG,00000,"connection received: host=127.0.0.1 port=44645",,,,,,,,"BackendInitialize, postmaster.c:4178",""

2017-05-23 11:06:44.504 CST,"postgres","postgres",36368,"127.0.0.1:44645",5923a744.8e10,2,"authentication",2017-05-23 11:06:44 CST,13/223,0,LOG,00000,"connection authorized: user=postgres database=postgres",,,,,,,,"PerformAuthentication, postinit.c:272",""

2017-05-23 11:09:55.512 CST,,,25095,,59239d76.6207,17,,2017-05-23 10:24:54 CST,,0,LOG,00000,"checkpoint starting: time",,,,,,,,"LogCheckpointStart, xlog.c:8369",""

2017-05-23 11:09:56.221 CST,"postgres","postgres",36368,"127.0.0.1:44645",5923a744.8e10,3,"idle",2017-05-23 11:06:44 CST,,0,LOG,00000,"disconnection: session time: 0:03:11.717 user=postgres database=postgres host=127.0.0.1 port=44645",,,,,,,,"log_disconnections, postgres.c:4500","psql"

2017-05-23 11:10:25.512 CST,,,25095,,59239d76.6207,18,,2017-05-23 10:24:54 CST,,0,LOG,00000,"checkpoint complete: wrote 232090 buffers (5.5%); 0 WAL file(s) added, 0 removed, 131 recycled; write=29.489 s, sync=0.141 s, total=29.999 s; sync files=300, longest=0.033 s, average=0.000 s; distance=2136269 kB, estimate=2141589 kB",,,,,,,,"LogCheckpointEnd, xlog.c:8451","" Use another method to generate random strings

postgres=# select length(concat_ws('',random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random()));

length

--------

512

(1 row)

vi test.sql

\set uid random(1,100)

select ins_schemaless(:uid, now(), concat_ws('',random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random(),random())); Test scripts

vi test.sh

for ((i=1;i<13;i++))

do

. /home/digoal/env_pg10.sh ${i}

nohup pgbench -M prepared -n -r -P 3 -f ./test.sql -c 10 -j 10 -t 100000000 >/tmp/bptest_${i}.log 2>&1 &

done Write performance:

1. When using the BTree index, the write rate is 240,000/s

2. Server resource overhead:

CPU:

Cpu(s): 76.7%us, 14.6%sy, 0.1%ni, 2.3%id, 2.1%wa, 0.0%hi, 4.1%si, 0.0%st

IOUITL:

SSD: 67 % A single node has 21 million records.

postgres=# select count(*) from bptest_1_20170522;

count

----------

21106948

(1 row)

Time: 1776.889 ms (00:01.777) Query requirements:

1. Range query and output ordering

In 2.5 seconds, 4.62 million records are returned.

postgres=# explain (analyze,verbose,timing,costs,buffers) select * from bptest_1_20170522 where ts between '2017-05-22 19:04:19.05347+08' and '2017-05-22 20:04:19.05347+08' order by ts;

QUERY PLAN

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Index Scan using bptest_1_20170522_ts_idx on public.bptest_1_20170522 (cost=0.44..2373942.95 rows=4631011 width=524) (actual time=0.015..2326.653 rows=4622534 loops=1)

Output: ts, content

Index Cond: ((bptest_1_20170522.ts >= '2017-05-22 19:04:19.05347+08'::timestamp with time zone) AND (bptest_1_20170522.ts <= '2017-05-22 20:04:19.05347+08'::timestamp with time zone))

Buffers: shared hit=4639344

Planning time: 0.207 ms

Execution time: 2578.147 ms

(6 rows)

Time: 2578.789 ms (00:02.579) 2. Range query + full-text search query, and output ordering

In 8.5 seconds, 29,411.96 million records are returned at the same time.

postgres=# explain (analyze,verbose,timing,costs,buffers) select * from bptest_1_20170522

where ts between '2017-05-22 19:04:19.05347+08' and '2017-05-22 20:04:19.05347+08'

and content ~ 'abc' and content ~ 'bcd'

order by ts;

QUERY PLAN

--------------------------------------------------------------------------------------------------------------------------

Gather Merge (cost=1890834.11..1933136.32 rows=2870936 width=524) (actual time=6601.842..8136.187 rows=2941196 loops=1)

Output: ts, content

Workers Planned: 4

Workers Launched: 4

Buffers: shared hit=84046, temp read=102440 written=102588

-> Sort (cost=1890834.06..1892628.39 rows=717734 width=524) (actual time=6584.684..6804.063 rows=588239 loops=5)

Output: ts, content

Sort Key: bptest_1_20170522.ts

Sort Method: external merge Disk: 313080kB

Buffers: shared hit=347169, temp read=501609 written=502338

Worker 0: actual time=6582.649..6803.139 rows=588224 loops=1

Buffers: shared hit=66037, temp read=100242 written=100388

Worker 1: actual time=6590.768..6813.019 rows=587934 loops=1

Buffers: shared hit=66168, temp read=100191 written=100337

Worker 2: actual time=6579.297..6799.509 rows=587915 loops=1

Buffers: shared hit=66014, temp read=100172 written=100318

Worker 3: actual time=6569.191..6785.155 rows=578030 loops=1

Buffers: shared hit=64904, temp read=98564 written=98707

-> Parallel Bitmap Heap Scan on public.bptest_1_20170522 (cost=72481.78..1603389.84 rows=717734 width=524) (actual time=338.604..5182.340 rows=588239 loops=5)

Output: ts, content

Recheck Cond: ((bptest_1_20170522.ts >= '2017-05-22 19:04:19.05347+08'::timestamp with time zone) AND (bptest_1_20170522.ts <= '2017-05-22 20:04:19.05347+08'::timestamp with time zone))

Rows Removed by Index Recheck: 19

Filter: ((bptest_1_20170522.content ~ 'abc'::text) AND (bptest_1_20170522.content ~ 'bcd'::text))

Rows Removed by Filter: 336268

Heap Blocks: exact=7063 lossy=60173

Buffers: shared hit=347141

Worker 0: actual time=336.885..5215.415 rows=588224 loops=1

Buffers: shared hit=66030

Worker 1: actual time=337.105..5239.414 rows=587934 loops=1

Buffers: shared hit=66161

Worker 2: actual time=337.128..5213.433 rows=587915 loops=1

Buffers: shared hit=66007

Worker 3: actual time=337.078..5129.862 rows=578030 loops=1

Buffers: shared hit=64897

-> Bitmap Index Scan on bptest_1_20170522_ts_idx (cost=0.00..71764.05 rows=4631011 width=0) (actual time=336.215..336.215 rows=4622534 loops=1)

Index Cond: ((bptest_1_20170522.ts >= '2017-05-22 19:04:19.05347+08'::timestamp with time zone) AND (bptest_1_20170522.ts <= '2017-05-22 20:04:19.05347+08'::timestamp with time zone))

Buffers: shared hit=16810

Planning time: 1.996 ms

Execution time: 8560.577 ms

(39 rows)

Time: 8563.154 ms (00:08.563) 3. Page count evaluation

If the business allows, we recommended using the evaluation value. The accuracy of the evaluation value depends on the accuracy of the statistical information. By using alter table {table name} alter column {column name} SET STATISTICS 1000, the statistical accuracy of the column can be adjusted, which defaults to 100.

The comparison between the number of evaluation records and the actual number of records is as follows, which is accurate enough:

postgres=# \timing

Timing is on.

-- Number of evaluation records

postgres=# select count_estimate(

$$

select * from bptest_1_20170522 where ts between '2017-05-22 19:04:19.05347+08' and '2017-05-22 20:04:19.05347+08'

$$

);

count_estimate

----------------

4631011

(1 row)

Time: 0.733 ms

-- Actual number of records

postgres=# select count(*) from bptest_1_20170522 where ts between '2017-05-22 19:04:19.05347+08' and '2017-05-22 20:04:19.05347+08';

count

---------

4622534

(1 row)

Time: 1389.424 ms (00:01.389)

-- Number of evaluation records

postgres=# select count_estimate(

$$

select * from bptest_1_20170522

where ts between '2017-05-22 19:04:19.05347+08' and '2017-05-22 20:04:19.05347+08'

and content ~ 'abc' and content ~ 'bcd' and content ~ 'cdef'

$$

);

count_estimate

----------------

914755

(1 row)

Time: 3.713 ms

-- Actual number of records

postgres=# select count(*) from bptest_1_20170522

where ts between '2017-05-22 19:04:19.05347+08' and '2017-05-22 20:04:19.05347+08'

and content ~ 'abc' and content ~ 'bcd' and content ~ 'cdef';

count

--------

962780

(1 row)

Time: 7756.863 ms (00:07.757) 4. Paged query returns

Streaming returns, returning 10 lines only takes 0.562 milliseconds.

postgres=# begin;

BEGIN

Time: 0.170 ms

postgres=# declare cur cursor for select * from bptest_1_20170522

postgres-# where ts between '2017-05-22 19:04:19.05347+08' and '2017-05-22 20:04:19.05347+08'

postgres-# and content ~ 'abc' and content ~ 'bcd'

postgres-# order by ts;

DECLARE CURSOR

postgres=# fetch 10 from cur;

.........................

(10 rows)

Time: 0.562 ms

On the next page, fetch continues to obtain returns. If you want to fetch backward, use the SCROLL cursor.

postgres=# begin;

BEGIN

Time: 0.114 ms

postgres=# declare cur SCROLL cursor for select * from bptest_1_20170522

where ts between '2017-05-22 19:04:19.05347+08' and '2017-05-22 20:04:19.05347+08'

and content ~ 'abc' and content ~ 'bcd'

order by ts;

DECLARE CURSOR

Time: 2.362 ms

Fetch backward

postgres=# fetch BACKWARD 10 from cur;

Fetch forward

postgres=# fetch FORWARD 10 from cur; vi sel.sql

\set uid random(1,100)

\set epoch uses extract (epoch from min (actual data time))

\set tsinterval random(1800,86400)

\set cnt random(1000,10000)

select rand_query(:uid, :epoch, :tsinterval, substring(text(random()),3,6), :cnt);

vi sel.sh

for ((i=1;i<13;i++))

do

. /home/digoal/env_pg10.sh ${i}

nohup pgbench -M prepared -n -r -P 3 -f ./sel.sql -c 2 -j 2 -t 1000 >/tmp/bptest_sel_${i}.log 2>&1 &

doneResource usage when performing query and insert stress testing at the same time

Cpu(s): 84.3%us, 7.8%sy, 0.2%ni, 5.5%id, 0.7%wa, 0.0%hi, 1.4%si, 0.0%st

SSD UTIL: 67%

Mechanical disk UTIL: 8%Performance metrics

Write QPS: 100,000/s

Query QPS: 1.5/sES performs a full-text search on the fields of the audit log. The data is appended (not updated), so it can be associated with the audit log by using line numbers. Create an audit log + a line number (full-text index) in ES

Note that the index built by ES must be able to distinguish the corresponding table names in PG, otherwise, the line number of different tables will repeat.

If table names cannot be distinguished in ES, we recommend using the full-text index of an audit log + a globally unique ID to associate with the data.

Dragging data from PG to ES by using line numbers can omit a PK field and index, greatly improving the data writing performance of PG.

To drag data using line numbers, the query by data block needs to be supported.

– Drag 10,000 pieces at a time

create or replace function gen_tids(blkid int) returns tid[] as

$$

declare

res tid[] := '{}'::tid[];

begin

for x in blkid..(blkid+199) loop

select array_cat(res, array(

SELECT ('('||x||',' || s.i || ')')::tid

FROM generate_series(0, 50) AS s(i)

)

) into res;

end loop;

return res;

end;

$$

language plpgsql strict immutable; At the same time, watch out for and avoid the occurrence of a hole (missing index).

For example, do not query the last data block, which may result in a query hole unless the table is no longer written.

When data is written to PG in parallel, some data in a certain data block may not be submitted yet. However, if the program dragging the data reads this data block, it skips this block the next time, which results in a hole.

Drag data test scripts

Request to drag 10,000 pieces at a time

#!/bin/bash

for ((i=1;i<13;i++))

do

. /home/dege.zzz/env_pg10.sh ${i}

for ((i=1;i<=1000000;i=i+200))

do

echo "start: `date` $((50*$i-50))"

psql -c "explain (analyze,verbose,timing,costs,buffers) select * from rds_logs_1_20170607 where ctid = any (gen_tids($i));" >/dev/null

echo "end: `date` "

done

doneTo improve the performance of dragging data, we recommend that dragging and writing data be kept at a speed level whenever possible. This way, the written data is not flushed to the disk and can be hit in the cache, resulting in higher efficiency of dragging data.

1. Data volume:

For a single UID and a single node, the data volume is 21 million records a day (12 GB and 600 MB for the index). (For 100 nodes/shards, it is about 2.1 billion pieces of data per day for a single user.)

2.1. Write performance (gen_rand_str)

When using the BRIN index, the write rate is 94,700/s

When using the BTree index, the write rate is 79,000/s

2.2. Write performance (random())

When using the BTree index, the write rate is 240,000/s

3. Range query and output ordering

In 2.5 seconds, 4.62 million records are returned.

4. Range query + full-text search query, and output ordering

In 8.5 seconds, 2.94 million records are returned.

5. Page count evaluation

Precision: +- 5%

Response speed: about 1 ms

6. Precise page count

The precise page count is related to the actual amount of data and conditions. More than 1 second

7. Paged query

Range query + full-text search query, and output ordering: about 11 milliseconds for every 1,000 records obtained.

This is related to hit rate. In extreme cases, all records are processed and only the last record meets the conditions.

With jdbc or libpq, you can set up multiple instances of a single connection, by automatically selecting a readable and writable instance in sequence. (This is equivalent to the client automatically performing a failover.)

Configuration example: Assuming you have four database instances, you can configure four data sources as follows:

db1: host1:port1,host2:port2,host3:port3,host4:port4

db2: host2,port2,host3:port3,host4:port4,host1:port1

db3: host3:port3,host4:port4,host1:port1,host2,port2

db4: host4:port4,host1:port1,host2:port2,host3:port3 When any instance fails, each data source can still obtain the next available connection without blocking the write.

When the instance is restored, the preferred instance is still used.

This means availability can be maximized without needing a standby database.

In addition, after the abnormal instances are restored, they continue to be preferred without worrying about skew because no history is preserved. Time smooths out the skew.

Behavior and Audit Log Modeling: PostgreSQL Best Practice (1)

Optimizing Time Series Querying on Alibaba Cloud RDS for PostgreSQL

digoal - May 28, 2019

Alibaba Cloud Community - March 4, 2022

Alibaba Cloud MaxCompute - December 22, 2021

Alibaba Clouder - January 27, 2021

Alibaba Cloud New Products - August 20, 2020

Alibaba Clouder - July 31, 2018

ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More Simple Log Service

Simple Log Service

An all-in-one service for log-type data

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by digoal