By Feifei Li

As the Cloud Computing tide strikes, the traditional database market is facing a situation of reshuffling. The rise of a group of new forces, including cloud databases, has shaken the monopoly of traditional databases, and the Cloud Native database led by cloud vendors pushes this change to the maximum.

What changes will databases face in the cloud era? What are the unique advantages of Cloud Native databases? At the 2019 DTCC (Database Technology Conference China 2019), Dr. Feifei Li, the vice president of Alibaba, gave a wonderful presentation on the Next Generation of Cloud Native Database Technology and Trend.

Feifei Li (nickname: Fei Dao) is the vice president of Alibaba Group, a senior researcher, the chief database scientist at Alibaba DAMO Academy, the head of Database Products Division of Alibaba Cloud Intelligent Business Group, and a distinguished expert of ACM.

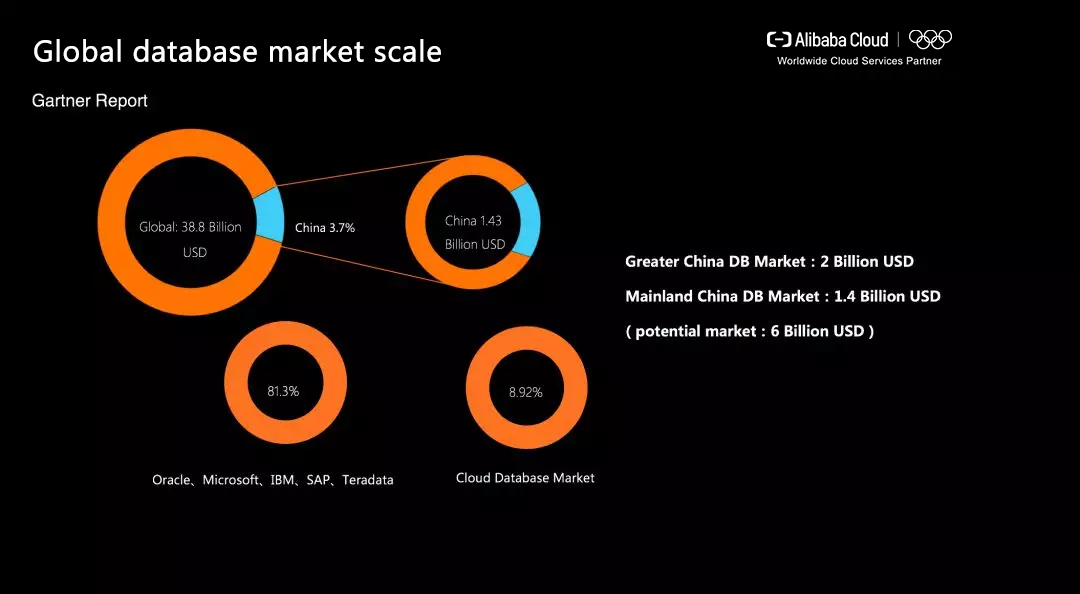

The following figure shows a Gartner report on the global database market share. The report shows that the current global database market share is about $40 billion, of which the database market share of China accounts for 3.7%, about $1.4 billion.

In terms of database market distribution, the five traditional database manufacturers, which are Oracle, Microsoft, IBM, SAP, and Teradata, account for 80%, and the cloud database accounts for nearly 10% of the share now and is growing rapidly every year. Therefore, Oracle and MongoDB are also vigorously deploying their competitive positions in the cloud database market.

According to the DB-Engines database market analysis, the database system is developing towards diversification, from the traditional TP relational database to the multi-source heterogeneous database form today. Currently, the database systems we are familiar with, such as commercial databases (like Oracle and SQL Server), and open-source databases (like MySQL and PostgreSQL), are still in the mainstream. However, some newer database systems, such as MongoDB and Redis, have opened up a new track. The traditional sales method for database license is gradually going downhill, while the popularity of open-source and cloud database license is continuously increasing.

As Jeff Bezos, the founder of AWS, said: "The real battle will be in databases". The cloud was first built from IaaS. Therefore, from virtual machines, storage, and networks, to popular intelligent applications nowadays (such as voice recognition, computer vision, and robotics), all are based on IaaS, and the database is the most critical link to connect IaaS with intelligent application SaaS. From data generation, to data storage, and to data consumption, databases are crucial at every step.

Databases are composed of four major types: OLTP, OLAP, NoSQL, along with database services and management tools. The four types are also the four directions for cloud database vendors. For OLTP, this technology has been developed for 40 years, and now everyone is still doing one thing: "Add 10 RMB and subtract 10 RMB", often referred to as transaction processing. As the amount of data becomes larger and conflicts occur between reading and writing, OLAP is derived from the demand for online real-time analysis of data. Due to the demand for scaling, strong consistency of data cannot be guaranteed. Therefore, NoSQL appears. Recently, a new term "NewSQL" emerged, because NoSQL is also insufficient. Therefore, the ACID guarantee of traditional OLTP is integrated with the Scale-out capability of NoSQL are integrated as the NewSQL.

Throughout the development history of databases over the past 40 years: In the earliest relational database period, SQL, OLTP and other technologies have been derived; With rapidly increasing data volumes, it is necessary to avoid read/write conflicts, so OLAP is implemented through ETL, Data Warehouse, Data Cube, and other technologies; Today, in the face of heterogeneous and multi-source data structures (from graphs to time series, space-time to vectors), databases, such as NoSQL, and NewSQL have appeared, and some new technologies have also emerged, such as Multi-Model and HTAP.

The most popular database system architecture is Shared Memory: shared processor core, shared memory, and shared local disks. This standalone architecture is a popular architecture, and the traditional database vendors basically adopt the same architecture.

However, with the large-scale development of internet enterprises, such as Google, Amazon, and Alibaba, it is found that the original standalone architecture has many limitations, and its scalability and throughput cannot meet the business development needs. Therefore, the Shared Disk/Storage architecture is derived. That is to say, the bottom layer of the database may be distributed storage. By using a fast network, such as RDMA, the upper-layer database kernel appears to be using local disks, but it is actually distributed storage. The architecture can have multiple independent computing nodes. Generally, Single-Write-Multiple-Read is used, but Multiple-Write-Multiple-Read can also be realized. This is the Shared Storage architecture, and is typically represented by the Alibaba Cloud PolarDB database.

Another architecture is Shared Nothing. Although Shared Storage has many advantages and solves many problems, RDMA also has many limitations. For example, its performance may be compromised when it crosses Switches or even across availability zones (AZs) and Regions. After the Distributed Shared Storage reaches a certain number of nodes, the performance will suffer a certain amount of loss, so the performance of accessing remote data and accessing local data cannot be guaranteed to be exactly the same. Therefore, when the Shared Storage architecture reaches the upper limit of scaling when it is scaled to more than a dozen nodes. What if the application needs to be further scaled? Then, we need to implement a distributed architecture. A typical example is Google Spanner, which uses atomic clock technology to achieve data consistency and transaction consistency across data centers. In Alibaba Cloud, the distributed version PolarDB-X implemented based on PolarDB also uses the Shared Nothing architecture.

Note that Shared Nothing and Shared Storage can be integrated. Shared Nothing can be used on the upper layer, while Shared Storage architecture is used for shards on the lower layer. The advantage of such a hybrid architecture is that it can reduce the pain points of too many shards, and reduce the probability of distributed commits for distributed transactions, because the cost of distributed commit is very high.

To sum up the three architecture designs, if the Shared Storage architecture is implemented with Multiple-Write-Multiple-Read, instead of Single-Write-Multiple-Read, SharedEverything is actually implemented. The hybrid architecture that integrates the Shared Nothing and Shared Storage architecture should be an important breakthrough point in the future development of database systems.

The above analyzes the mainstream database architecture in the cloud era from the perspective of architecture. Technically speaking, apart from architecture differences, some differences still exist in the Cloud Native era.

The first is the Multi-model, which is mainly divided into two types, namely Northbound Multi-model and Southbound Multi-model. Southbound Multi-model indicates that the storage structure is diverse, and the data structure can be structured or unstructured, and can be graphs, vectors, documents and so on. However, for users, only a SQL query interface or a SQL-Like interface is provided. Typical representatives in the industry are various data lake services. While the Northbound Multi-model indicates that only one kind of storage is available. Generally, structured, semi-structured and unstructured data are supported through the KV storage data format, but it is expected to provide different query interfaces, such as SPARQL, SQL, and GQL. In the industry, this is typically represented by the Microsoft Azure CosmosDB.

The Self-Driving of the database is also a very important development direction. Many technical points are available from the perspectives of both the database kernel and the control platform. In terms of Database Self-Driving, Alibaba believes that self-perception, self-decision-making, self-recovery, and self-optimization are required. Self-optimization is relatively simple, that is, using the machine learning method in the kernel for optimization. However, self-perception, self-decision-making, and self-recovery are more aimed at the control platform, such as how to ensure the inspection of instances, and how to automatically and quickly fix or automatically switch when problems arise.

The third core of the Cloud Native database is the integrated design of software and hardware. The database itself is a system, and a system needs to be able to use limited hardware resources safely and efficiently. Therefore, the design and development of the database system must be closely related to the hardware performance and development. We cannot stick to the old database design without making changes when faced with changing hardware. For example, after NVM is released, it may have some impact on the traditional database design. The changes brought about by new hardware also need to be considered in database system design.

The emergence of new hardware or architectures, such as RDMA, NVM, and GPU/FPGA, provide new ideas for database design.

High availability is one of the most basic requirements of Cloud Native. Cloud users do not want service interruption. The simplest solution for high availability is redundancy, which can be Table-level redundancy or Partition-level redundancy. No matter which one is used, it is basically three replicas, and even more often four replicas or five replicas are required. For example, financial-level high availability may require three centers in two locations, or four centers in two locations.

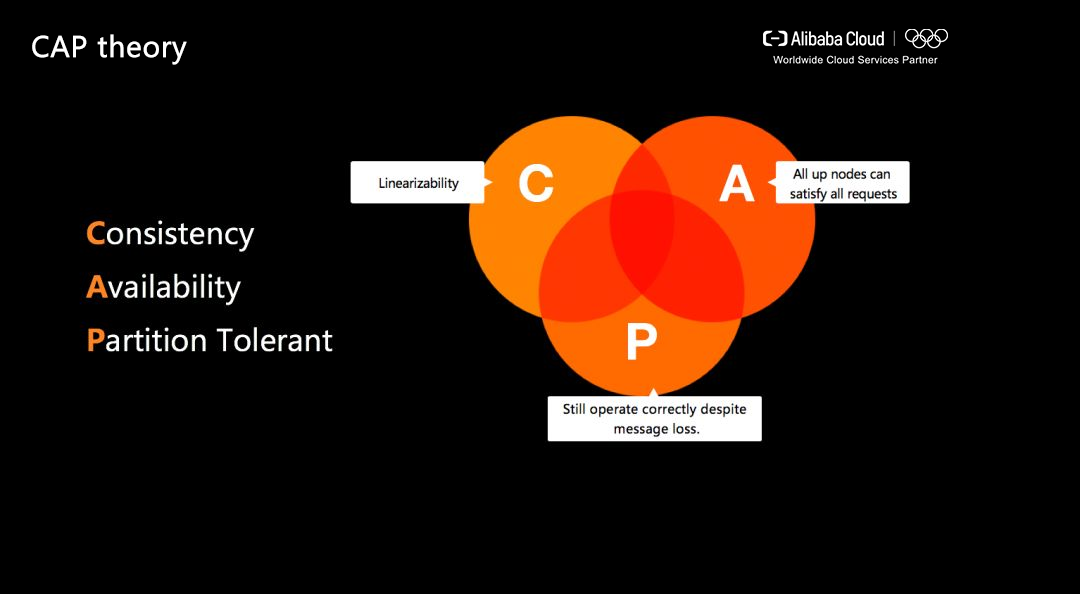

For highly available multiple replicas, how to ensure data consistency between replicas? A classic CAP theory exists in the database. The result of this theory is that only two can be selected among Consistency, Availability and Partition Tolerant. Now, we generally choose C + P. For A, it can reach 99.9999% or 99.99999% through the three-replica technology and distributed consistency protocol. In this way, the 100% CAP is basically achieved.

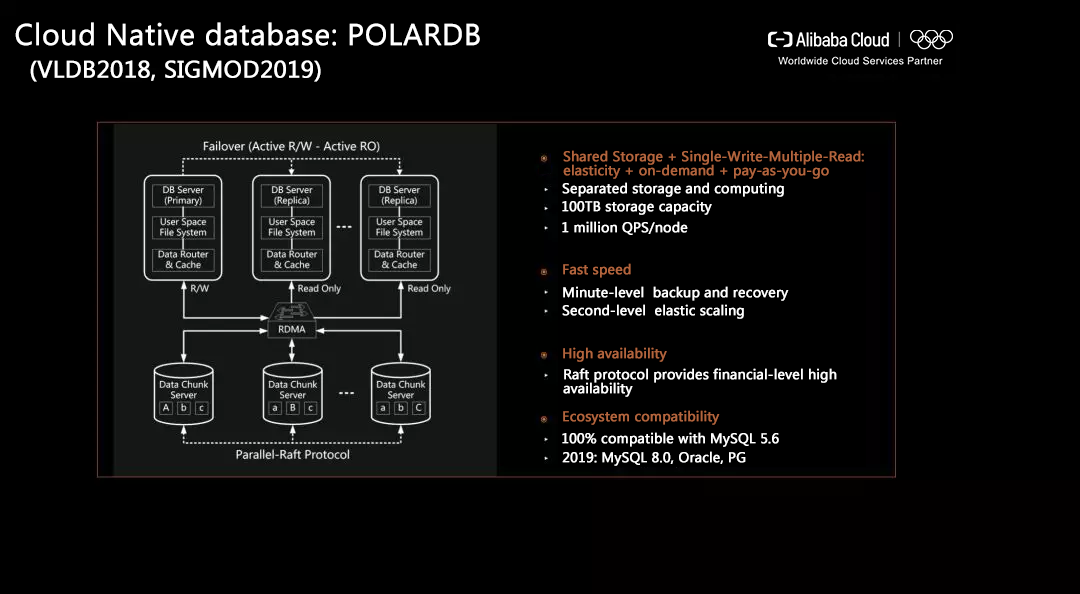

PolarDB, a Cloud Native database with ultimate elasticity and compatibility, is created for massive data and massive concurrency. The background of the database market and the basic elements of the Cloud Native database are introduced above. Next, I will share the specific implementation of the above technologies with two Database Systems: Alibaba Cloud PolarDB and AnalyticDB. PolarDB is a Cloud Native database of Alibaba Cloud, and has a deep accumulation of technical knowledge. We have published papers, which mainly introduce technological innovations in storage engines, at international academic conferences, such as VLDB 2018 and SIGMOD 2019.

PolarDB adopts the Shared Storage architecture with Single-Write-Multiple-Read. The Shared Storage architecture has several advantages. First, the computing and storage are separated, and computing and storage nodes can be can be elastically scaled. Secondly, PolarDB breaks through the limitations of MySQL, PG and other databases on single-node specifications and scalability, and can achieve 100 TB storage capacity and 1 million QPS per node. In addition, PolarDB can provide ultimate elasticity, and greatly improve backup and recovery capabilities. At the storage layer, each data block uses a three-replica high availability technology, and the Raft protocol is modified at the same time. By implementing a parallel Raft protocol, the data consistency among the three-replica data blocks is ensured, thus providing financial-level high availability. PolarDB is 100% compatible with database ecosystems, such as MySQL and PG, and can help users achieve imperceptible application migration.

Because the bottom layer is shared distributed storage, PolarDB belongs to the Active-Active architecture. The primary node is responsible for writing data, while the standby node is responsible for reading data. Therefore, for transactions entering the database, both the primary and standby nodes are Active. The advantage is that continuous data synchronization between the primary and standby nodes can be prevented with a physical storage.

Specifically, PolarDB has a PolarProxy, that is, the gateway proxy in front, below it are the PolarDB kernel and PolarFS, and the bottom is the PolarStore, which uses the RDMA network to manage the underlying distributed shared storage. PolarProxy will judge customer requirements, to distribute write requests to the primary node and distribute read requests based on the server load balancing and the read node status, so as to maximize resource utilization and improve performance as much as possible.

PolarDB uses distributed and three-replica Shared Storage. The primary node is responsible for writing, while other nodes are responsible for reading. The lower layer is PolarStore. Each part is backed up with three replicas, and data consistency is ensured through distributed consistency protocol. The advantage of this design is that the storage and computing can be separated, and lockless backup can be achieved, so backup can be achieved in seconds.

In the case of Single-Write-Multiple-Read, PolarDB supports fast scaling. For example, upgrading from a 2-core vCPU to a 32-core vCPU, or scaling from 2 nodes to 4 nodes can take effect within five minutes. Another benefit of separation of storage and computing is cost reduction, because storage and computing nodes can be elastically scaled independently, which fully reflects the cost advantage.

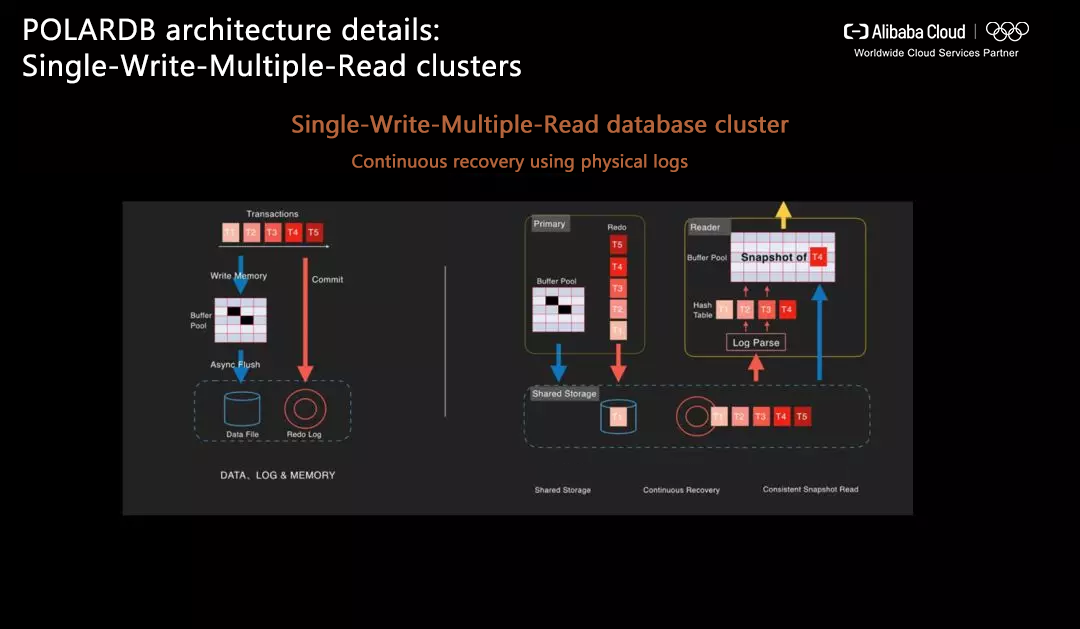

The following figure shows how PolarDB uses physical logs for continuous recovery. On the left side, it is the architecture of traditional databases, while in PolarDB, due to the use of Shared Storage is used, the process of recovery by using physical logs, which is similar to that of traditional databases, can be basically retained, and continuous recovery can be achieved through Shared Storage to perform Snapshot recovery of transactions.

Let's make a comparison. If MySQL uses the primary-standby architecture, first a logical log and a physical log are needed in the primary database. While in the standby database, the logical log of the primary database should be replayed, and then the logical log and the physical log are performed in the way of the primary database. However, in PolarDB, Shared Storage is used, so data can be recovered directly using a single log. The standby database can directly recover the required data without the need to replay the logic logs of the primary database.

Another major advantage of PolarDB Single-Write-Multiple-Read cluster is support for dynamic DDL. In the MySQL architecture, if you want to modify the data schema, you need to use Binlog to replay the data to the standby database. Therefore, the standby database will have a Blocking stage, and it takes some time to replay the dynamic DDL. While, in the PolarDB Shared Storage architecture, all schema information and metadata are directly stored in the storage engine in the form of tables. As long as the primary database is changed, the metadata of the standby database is also updated in real time, therefore, no Blocking process exists.

PolarDB Proxy is mainly used for read/write splitting, load balancing, high-availability switching, and security protection. PolarDB uses the Single-Write-Multiple-Read architecture. When a request comes in, read/write judgment is required to distribute the write request to the write node, and distribute the read request to the Read node. In addition, load balancing is performed for read requests. In this way, session consistency can be ensured, and the problem of not being able to read the latest data can be completely solved.

Lossless elasticity is one of the modules monitored by PolarDB. For distributed storage, it is necessary to know the amount of Chunks (/Chunk) to distribute, and PolarDB monitors the amount of unused Chunks. For example, when less than 30% is available, it is automatically scaled up on the back-end, which doesn't have an obvious effect on the application and enables it to write data continuously.

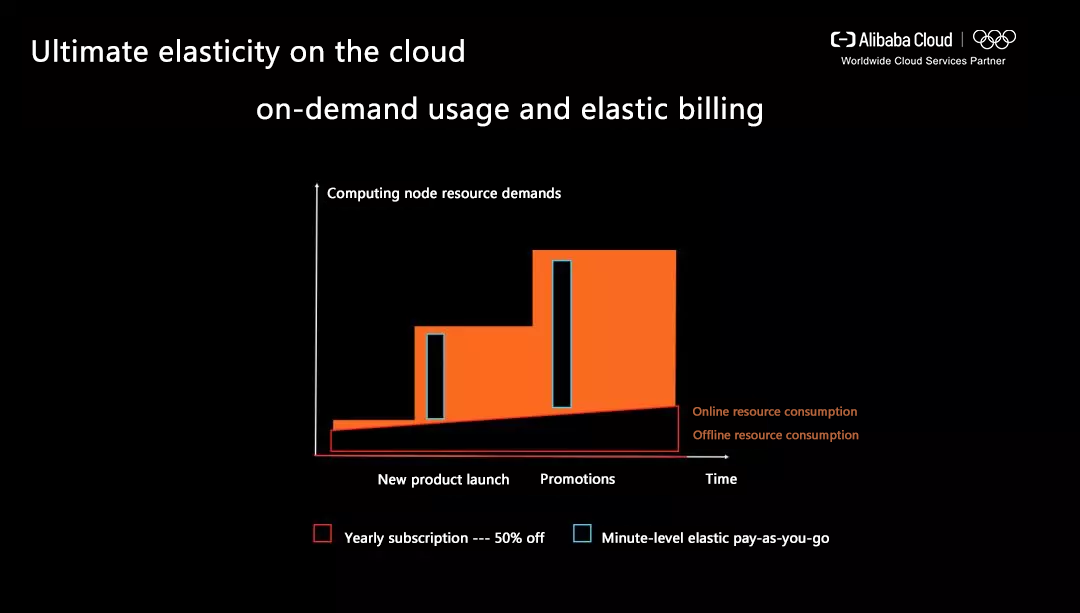

For the cloud database PolarDB, the biggest advantage of the above technologies is the ultimate elasticity. Here, let's take a specific customer case to illustrate. As shown in the following figure, the red line refers to the consumption of offline resources. These costs must be paid by the customer in any case, while the part above is the demand for computing resources.

For example, customers may have new products to be launched in March and April, and promotions in May. In these two periods, the computing demand will be very high. For the traditional architecture, it may be necessary to scale the capacity to a larger scale before the new product is launched, and maintain such a level. In the subsequent promotion phase, the capacity needs to be scaled to a further higher specification, which is costly. However, if ultimate elasticity can be achieved (for example, the storage and computing in PolarDB are separated to realize rapid elastic scaling), you only need to scale up the capacity before the Blue Square appears, and then scale it down, thus greatly reducing the cost.

In addition to the Cloud Native database PolarDB, the Alibaba Cloud ApsaraDB team has significantly explored other directions.

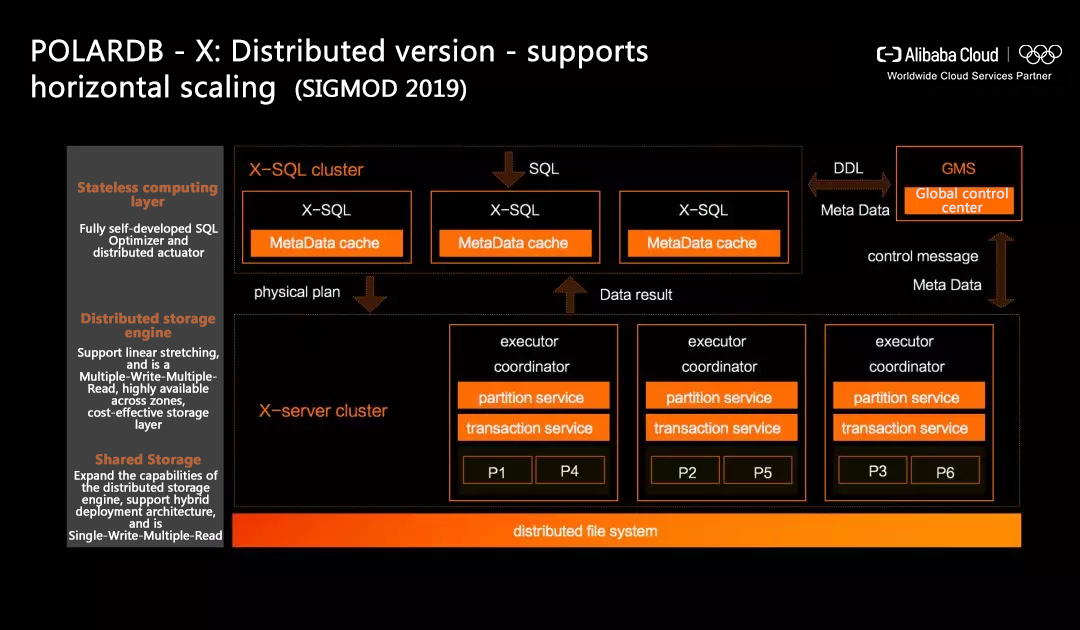

The distributed version PolarDB-X, which is highly concurrent and highly available across zones, supports horizontal scaling. If enterprises require extreme Scaling capabilities, such as Alibaba, and banks and power companies in traditional industries that require extremely high concurrency and massive data support, the Shared Storage architecture only supports scaling to a dozen nodes, which is definitely not enough. Therefore, the Alibaba Cloud ApsaraDB team also uses Shared Nothing for horizontal scaling. The Shared Nothing and Shared Storage are combined to form the PolarDB-X. PolarDB-X supports strong data consistency across availability zones at the financial level, and has excellent performance in supporting high concurrent transaction processing under massive data. At present, PolarDB-X has been put into use in Alibaba. It has successfully withstood the challenge of peak flow in all core business database links of Alibaba under the scenario of Double 11 Shopping Spree by using technologies, such as storage and computing separation, hardware acceleration, distributed transaction processing and distributed query optimization. We will launch the commercial version in the future. Stay tuned.

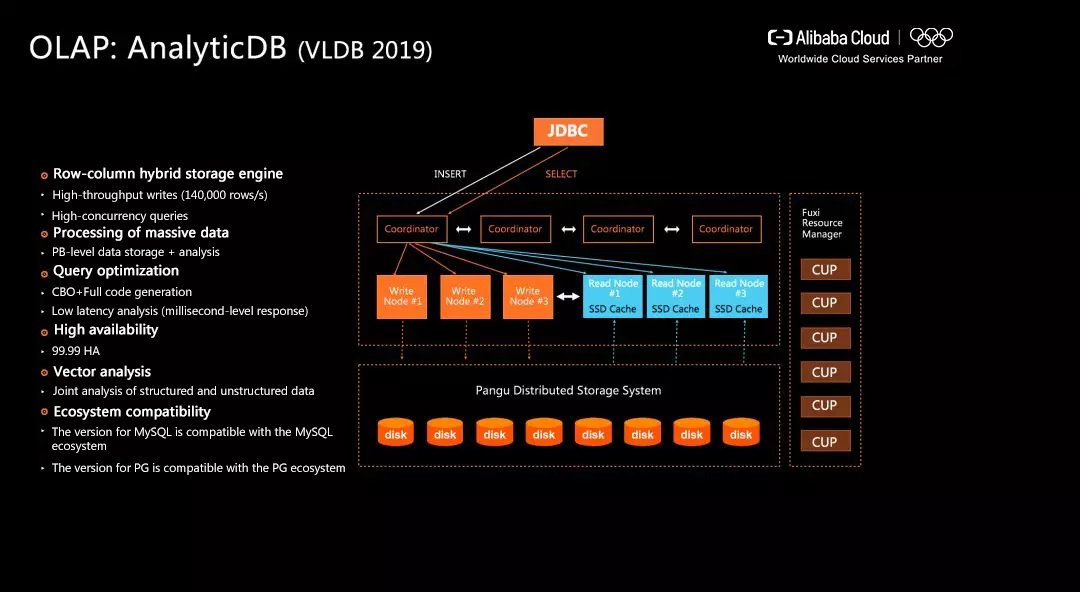

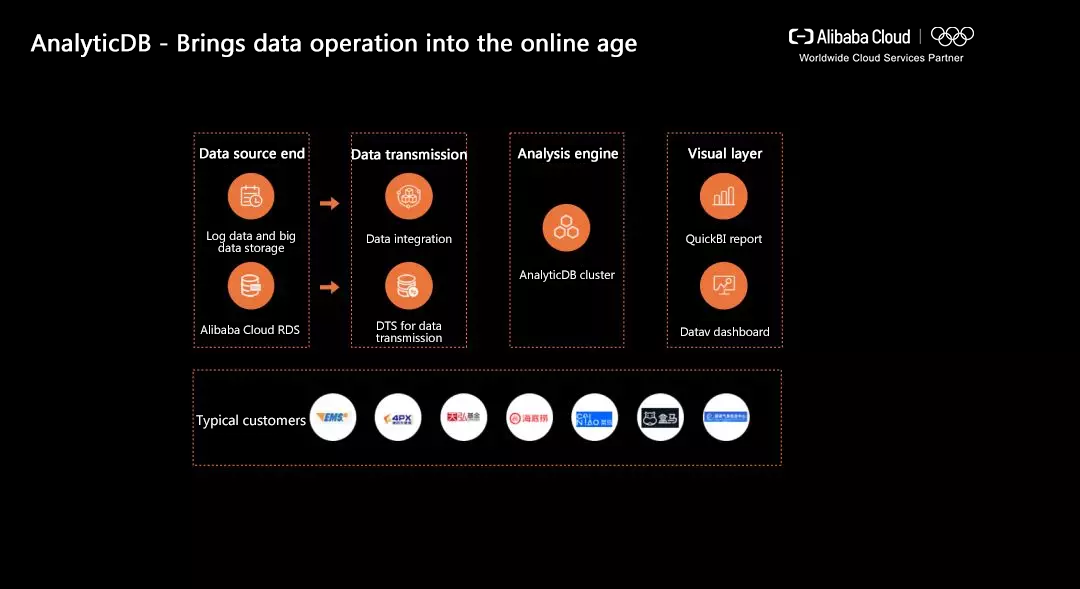

In addition, in the direction of OLAP analytical database, the Alibaba Cloud ApsaraDB team independently developed the database product - AnalyticDB, which is available on both the public and private clouds of Alibaba Cloud. AnalyticDB has several core architecture features:

Recently, AnalyticDB has ranked in TPC-DS, ranking the first in the world in terms of cost performance, and passing the TPC official strict certification. Moreover, the paper about the AnalyticDB system will be presented at the VLDB 2019 conference. The common application scenario of AnalyticDB is to transfer data from OLTP applications and synchronize the data to AnalyticDB through tool DTS for real-time data analysis.

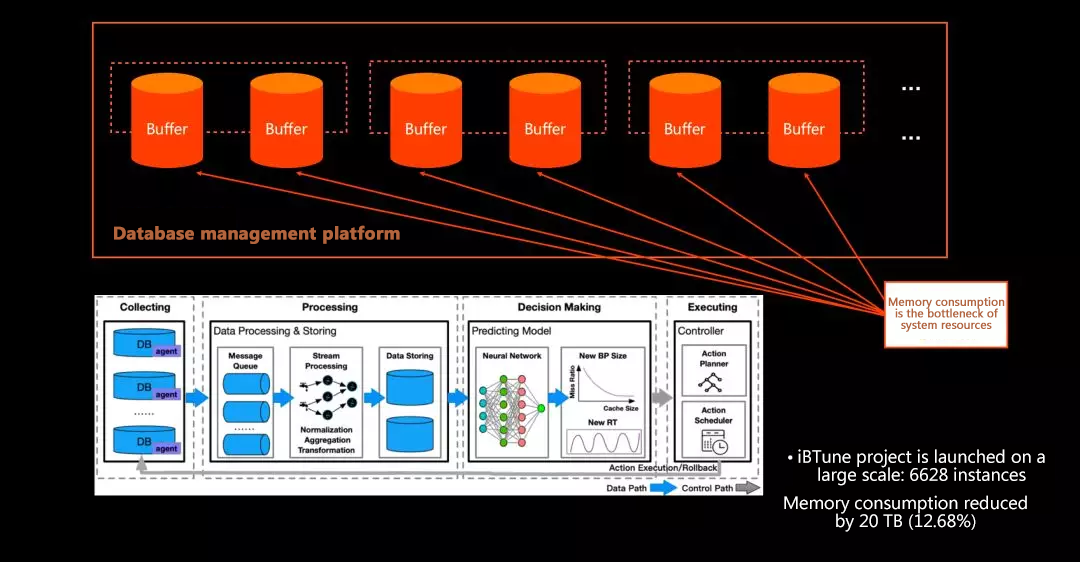

Self-driving database platform: iBTune (individualized Buffer Tuning)

One of the features of Cloud Native databases is Self-Driving. Alibaba Cloud has an internal platform called SDDP (Self-Driving Database Platform), which collects performance data of each database instance in real time, and uses the machine learning method to model for real-time allocation.

The basic idea of iBTune is that each database instance contains a Buffer Size, which is allocated in advance in traditional databases and cannot be changed. In large enterprises, Buffer is a resource pool that consumes memory. Therefore, it is hoped to flexibly and automatically allocate the Buffer Size for each instance. For example, if the database instance of a Taobao commodity library does not need a large Buffer at night, the Buffer Size can be automatically scaled down, then automatically scaled up in the morning, without affecting the RT. To meet the above requirements and implement automatic Buffer optimization, the Alibaba Cloud ApsaraDB team has built an iBTune system. Currently, it monitors nearly 7000 database instances, and can save an average of 20 TB of memory in the long run. The core technical paper introducing the iBTune project will also be presented at the VLDB 2019 conference.

Data security on the cloud is very important. The Alibaba Cloud ApsaraDB team has also done a lot of work in data security. First, the data is encrypted when it is stored in the disk. In addition, the Alibaba Cloud ApsaraDB supports BYOK. Users can bring their own keys to the cloud to implement disk encryption and transmission-level encryption. In the future, the Alibaba Cloud ApsaraDB will implement full-process encryption during memory processing, and trusted verification of logs.

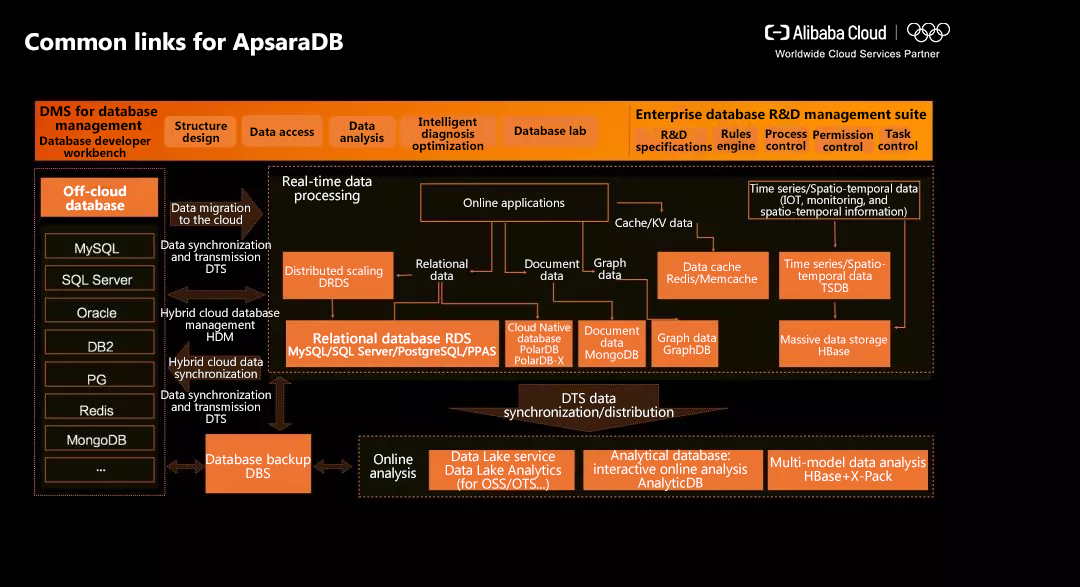

Alibaba Cloud ApsaraDB provides services according to tool products, engine products, and full-process database products under operation control. The following figure shows the common links of Alibaba Cloud ApsaraDB. Offline databases are migrated online through DTS, and distributed to relational databases, graph databases, and AnalyticDB based on data requirements and classifications.

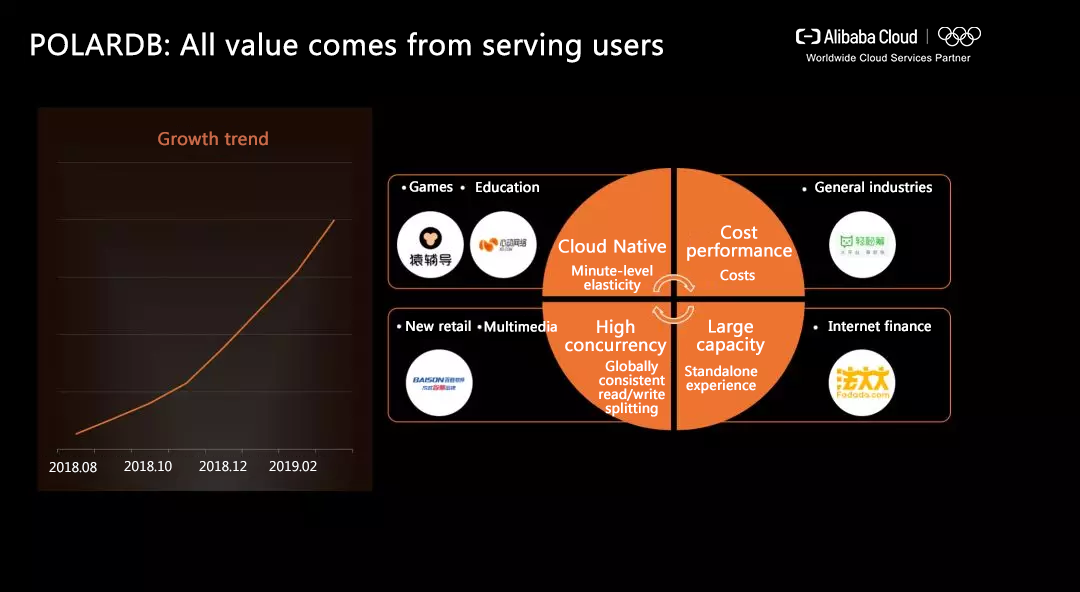

Currently, the PolarDB database is growing rapidly, and have served leading enterprises in many fields, such as general industries, Internet finance, games, education, new retail, and multimedia.

In addition, AnalyticDB also has an outstanding performance in the analytical database market, supporting real-time analysis and visualization applications

Based on the Alibaba Cloud ApsaraDB technology, Alibaba supports a series of key projects, such as City Brain, and a large number of customers on and off the cloud. So far, the Alibaba Cloud ApsaraDB has supported a total of nearly 0.4 million database instances successfully migrated to the cloud.

Cloud Native is a new battlefield for databases. It has brought many exciting new challenges and opportunities to the database industry that has been developing for more than 40 years. Alibaba hopes to push the database technology to a higher level with all technical colleagues in the database industry at home and abroad.

In-depth Analysis on HLC-based Distributed Transaction Processing

How Alibaba Cloud Redis Can Be Used for High-Concurrency and Low Latency Scenarios

Alibaba Clouder - July 25, 2019

Alibaba Clouder - September 8, 2020

Alibaba Clouder - February 24, 2021

blog.acpn - November 27, 2023

Alibaba Cloud Community - May 17, 2024

Alibaba Clouder - July 6, 2017

PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB