HDFS (Hadoop Distributed File System) is designed as a distributed file system suitable for running on commodity hardware. It has a lot in common with existing distributed file systems. But at the same time, the difference between it and other distributed file systems is also very obvious. HDFS is a highly fault-tolerant system, suitable for deployment on cheap machines. HDFS can provide high-throughput data access, which is very suitable for applications on large-scale data sets. HDFS relaxes some POSIX constraints to achieve the purpose of streaming file system data. HDFS was originally developed as the infrastructure of the Apache Nutch search engine project. HDFS is part of the Apache Hadoop Core project.

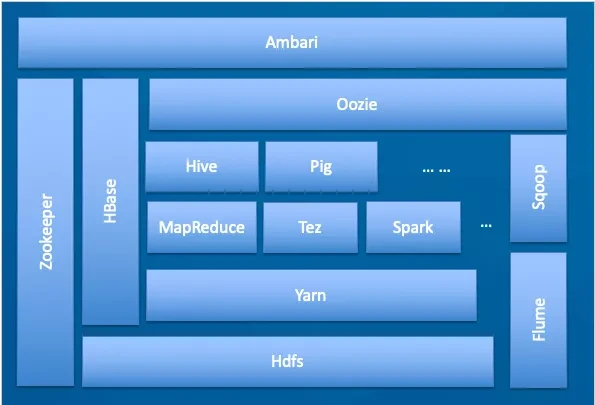

Let's first look at the Hadoop architecture. HDFS provides storage support for other components of Hadoop.

Visually compare the Linux file system and the HDFS file system (execute the ls command).

Linux:

#ls -l

-rw-r--r-- 1 root root 20 Jul 29 14:31 ms-server-download-zzzc_88888.idx

-rw-r--r-- 1 root root 8 Jul 29 14:31 ms-server-download-zzzc_88888.datHDFS:

#hadoop fs -ls /tmp/

-rw-r--r-- 2 xitong supergroup 181335139 2019-07-17 23:00 /tmp/java.tar.gz

-rw-r--r-- 2 xitong supergroup 181335139 2019-07-17 23:00 /tmp/jdk.tar.gzIt can be seen that HDFS and Linux file systems are very similar. They both have permissions, the user that the file belongs to, the group the user is in, and the file name, but there are also differences: the second column in HDFS indicates the number of copies.

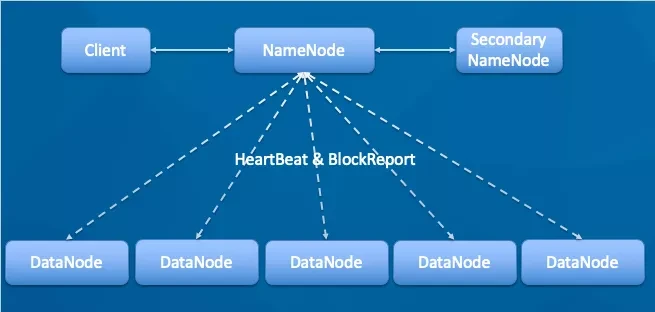

HDFS is a Master/Slave (Mater/Slave) architecture. HDFS consists of four parts, namely:

HDFS Client, NameNode, DataNode and SecondaryNameNode.

Client:

File segmentation. When a file is uploaded to HDFS, the client divides the file into multiple blocks and stores them.

Interact with the NameNode to obtain the location information of the file.

Interact with DataNode, read and write data.

Client provides some commands to manage and access HDFS, such as start and close HDFS.

NameNode: It is the master and the manager.

Manage the namespace of HDFS.

Management data block (block) mapping information.

Configure the copy policy.

Handle the client's read and write requests.

DataNode: It is Slave. The NameNode issues the command, and the DataNode performs the actual operation.

Store the actual data block.

Perform data block read/write operations.

Secondary NameNode: It is not a hot backup of the NameNode-when the NameNode is down, it cannot immediately replace the NameNode and provide services.

Auxiliary NameNode, share its workload.

Regularly merge fsimage and fsedits and push to NameNode.

In an emergency, it can assist in restoring the NameNode.

Suitable application scenarios

Storage of very large files: This refers to files of hundreds to thousands of MB, GB, or even terabytes, which require high throughput and no requirements for latency.

Using streaming data access methods: that is, one write, multiple reads, the data set is often generated or copied from the data source once, and then a lot of analysis work is done on it.

Run on cheap hardware: It doesn't need a machine with high performance, it can run on ordinary cheap machine, saving cost.

High fault tolerance is required. HDFS has a multi-copy mechanism. After a certain number of copies are lost/damaged, the integrity of the file will not be affected.

Used as a data storage system to facilitate horizontal expansion.

Unsuitable application scenarios

Low-latency data access: HDFS is not suitable for applications that require a delay of milliseconds. HDFS is designed for high-throughput data transmission with high latency.

A large number of small files: In the HDFS system, the metadata of the file is stored in the memory of the NameNode, and the number of files is limited by the memory size of the NameNode.

Generally, the metadata memory space of a file/directory/file block is about=150Byte. If there are 1 million files and each file occupies 1 block, about 300MB of memory is required. Therefore, the number of billion-level files is difficult to support on existing commercial machines.

Multi-party reading and writing requires arbitrary file modification: HDFS uses append-only to write data. It does not support any offset modification of the file, nor does it support multiple writers.

Advantage:

Disadvantage:

Hadoop is a free, open-source, scalable, and fault-tolerant framework written in Java that provides an efficient framework for running jobs on multiple nodes of clusters. Hadoop contains three main components: HDFS, MapReduce and YARN.

In this article, we will introduce how to set up a Hadoop file system on a single node cluster with Ubuntu.

DataWorks provides HDFS Reader and HDFS Writer for you to read data from and write data to HDFS data sources. This topic describes how to add an HDFS data source.

EMR is an all-in-one enterprise-ready big data platform that provides cluster, job, and data management services based on open-source ecosystems, such as Hadoop, Spark, Kafka, Flink, and Storm.

Alibaba Cloud Data Transport is a PB-level, point-to-point, offline data migration service. You can use secure equipment to upload large amounts of data to Alibaba Cloud. Data Transport can help you resolve common problems associated with data migration to Alibaba Cloud.

892 posts | 200 followers

FollowApsaraDB - February 25, 2021

Alibaba EMR - July 19, 2021

Alibaba EMR - April 27, 2021

Apache Flink Community China - November 6, 2020

Alibaba Clouder - October 10, 2018

Apache Flink Community China - June 8, 2021

892 posts | 200 followers

Follow Data Transport

Data Transport

A secure solution to migrate TB-level or PB-level data to Alibaba Cloud.

Learn MoreMore Posts by Alibaba Cloud Community