By He Linbo (Xinsheng)

As cloud native technologies evolve, the Alibaba Cloud Container Service team is constantly exploring the application ranges of the cloud native technologies during the specific implementation process. Also, the traditional edge computing architecture can no longer meet the needs of business development under the rapid development of the IoT and 5G.

How can we build a new-generation edge computing platform based on cloud native technologies has become a new focus of the industry? How do we solve the industry's challenges such as cloud-edge collaboration and edge autonomy to help developers easily deliver, maintain and control large-scale applications on massive amounts of edge and end resources?

OpenYurt, the first non-intrusive edge computing project based on Kubernetes in the industry, was constructed and open sourced. OpenYurt seamlessly integrates cloud native and edge computing and has been put into practice in scenarios with tens of thousands of edge nodes. This article will describe how to integrate cloud native technologies and edge computing for the delivery, O&M, and control of large-scale applications.

First, it is the 'intuitionistic' description of edge computing. Based on the development of industries and businesses such as 5G, IoT, audio and video, live broadcast, CDN, there is an inevitable trend. More and more computing power and businesses are sinking closer to the data sources or end users in order to achieve shorter response time and lower computing costs. Obviously different from the traditionally centralized cloud computing mode, edge computing is increasingly and widely used in various industries, including automobiles, agriculture, energy, and transportation. In a word, edge computing "makes computing closer to data and devices".

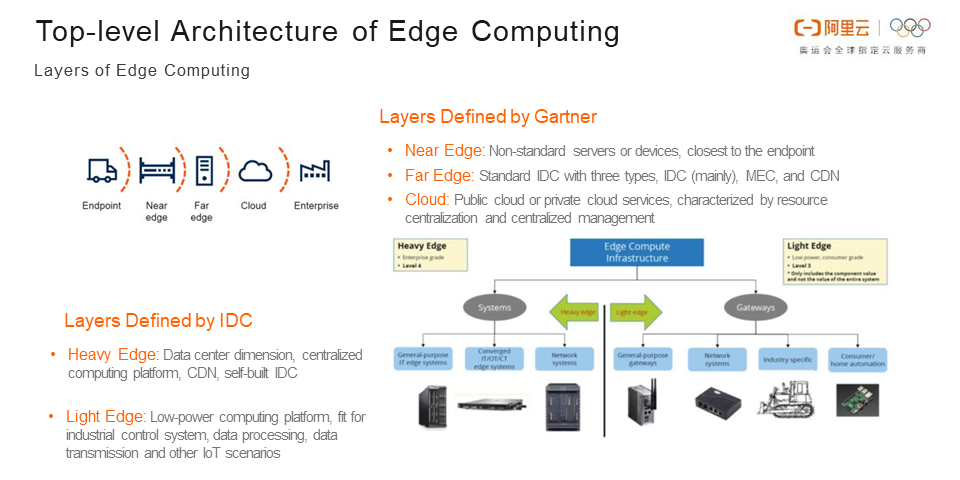

From the perspective of IT architecture, edge computing has an obvious hierarchical structure determined by business latency and computing forms. Here, the explanations on the edge computing architecture by Gartner and Internet Data Center (IDC) are quoted. Gartner divides edge computing into three parts which are "Near Edge", "Far Edge", and "Cloud", respectively corresponding to common device ends, off-cloud IDC/CDN nodes, and public cloud/private cloud. IDC defines edge computing more intuitively as "Heavy Edge" and "Light Edge" to represent the data center dimension and the low-power computing platform respectively. As shown in the figure, the interdependent layers of the architecture cooperate with each other. This definition is also a consensus reached by the industry on the relationship between edge computing and cloud computing. Next, it's the trend of edge computing.

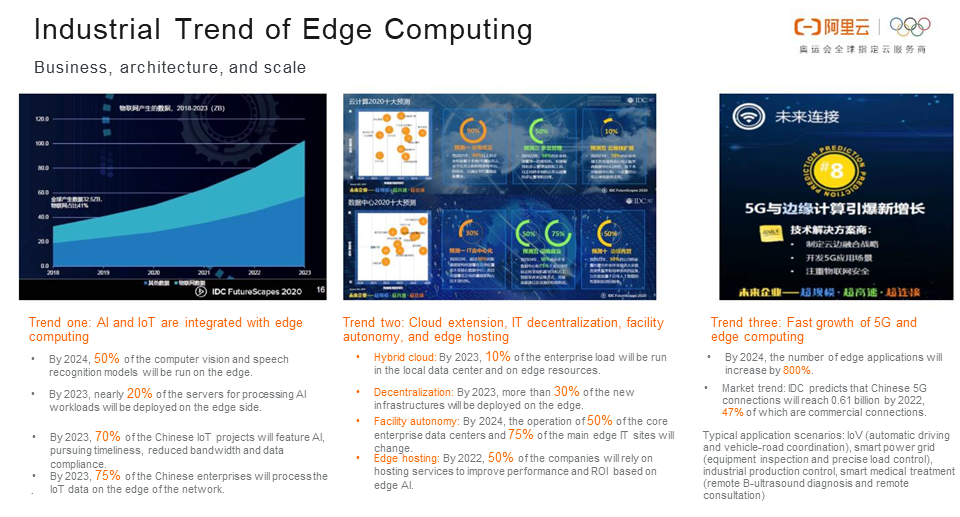

The analysis of a trend in the IT industry usually includes three dimensions: business, architecture, and scale. The three general trends of edge computing are:

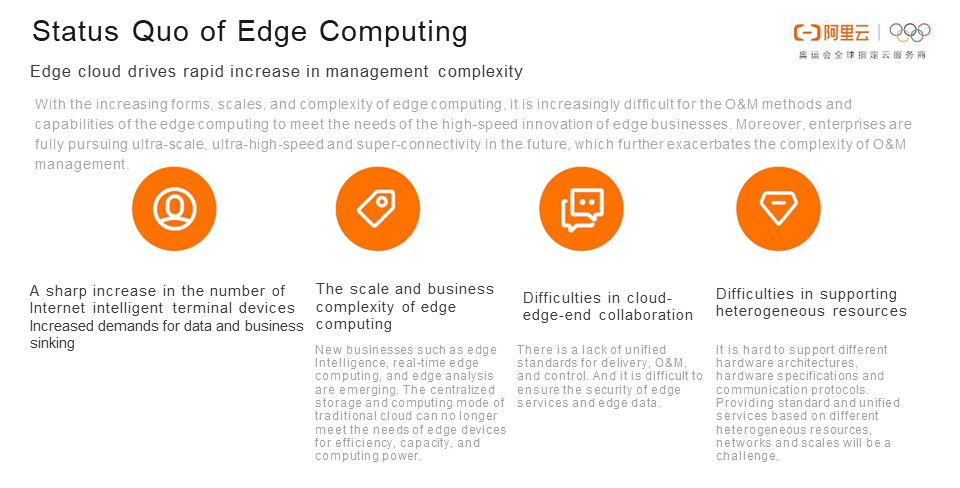

Though consensus on the architecture was formed, the scale and complexity of edge computing were increasing day by day in the implementation. As a result, the shortage of O&M methods and capabilities was finally overwhelming. How to solve this problem? The "cloud-edge-end unified" O&M collaboration is a common solution.

As practitioners in the field of cloud native, we try to consider and solve these problems from the perspective of cloud native:

First, it's a review of a cloud native definition and technical system. Currently, cloud native has become popular and widely used as a series of tools, architectures, and methodologies. Then, how is cloud native defined? Early meanings of cloud native included containers, microservices, DevOps, and CI/CD. After 2018, service mesh and declarative APIs were added by the Cloud Native Computing Foundation (CNCF). Then, it's the general development history of cloud native. In the early days, with the emergence of Docker, containers and Docker were adopted into a large number of businesses, and containerization led to the rapid development of DevOps by unifying deliverables and isolating applications. The advent of Kubernetes decoupled resource orchestration scheduling from the underlying infrastructure and enabled convenient application and resource control. Later, service implementation and service governance capabilities are decoupled by the service mesh technology represented by Istio. More and more enterprises and industries are embracing cloud native.

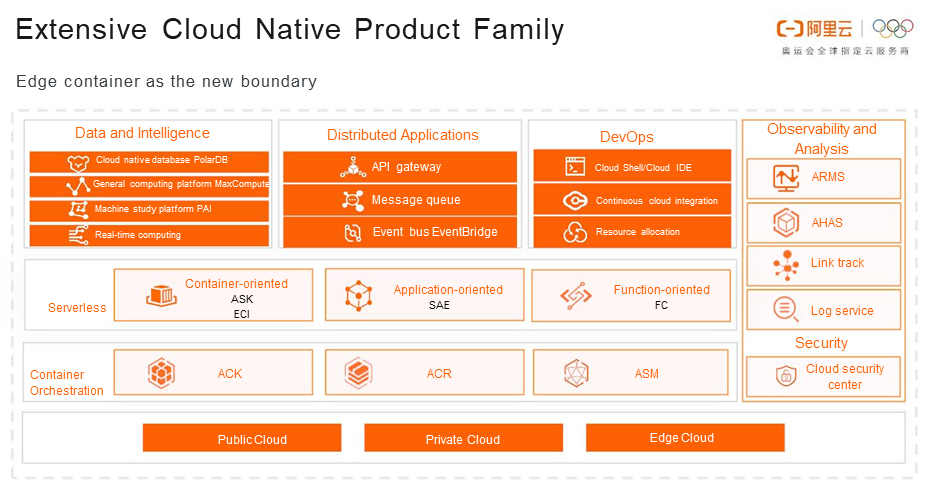

Now, it is an introduction to Alibaba Cloud's cloud native products. Alibaba Cloud migrates all internal instances to the cloud and provides extensive cloud native services for customers. Both prove that Alibaba Cloud is a practitioner of Cloud Native. Alibaba Cloud believes that cloud native is the future. As a cloud-native service provider, Alibaba Cloud believes that the ubiquitous cloud native technologies will continue to develop at a high speed and be applied to "new application loads", "new computing forms" and "new physical boundaries". As shown in the figure of the cloud native product family of Alibaba Cloud, containers are being applied to more and more applications and cloud services and loaded in more and more computing forms, such as Serverless and function computing. Additionally, these abundant forms are also moving from traditional center cloud to edge computing and toward device ends.

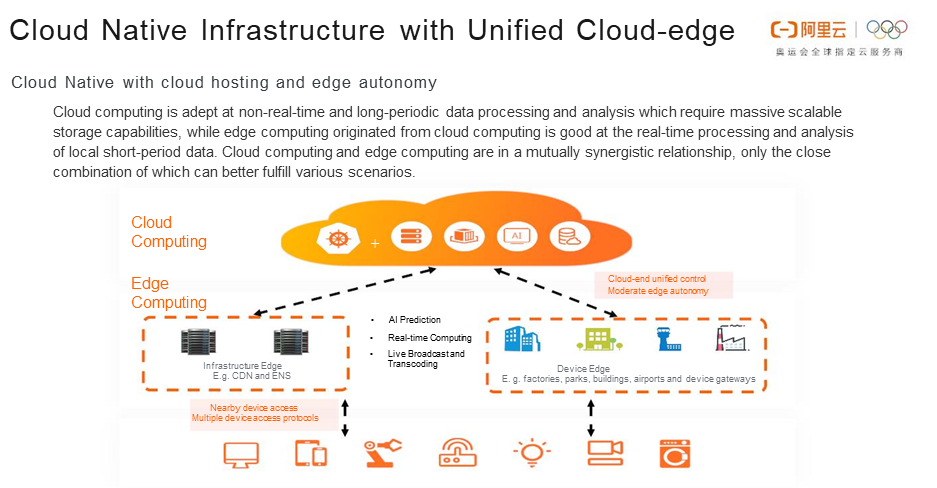

From the standard hosting architecture of the center cloud, the cloud-edge-end collaborated cloud native architecture is abstracted. In the center cloud, the original cloud native control and productization capabilities are retained. By sinking the control and productization capabilities to the edge through the cloud-edge control channel, massive numbers of edge nodes and businesses are transformed into cloud native workloads. Furthermore, these edge nodes and businesses better interact with device ends via service traffic and service governance, thus completing the unification of the business, O&M, and ecology.

Then, what is the benefit of the cloud-edge unified architecture? First, with the cloud-edge unification achieved through cloud native systems, users are provided with the same features and experience on any infrastructure as that on the cloud, thus realizing cloud-edge-end unified application distribution. Isolation and traffic control of containerization, network policies and other capabilities make the operation of workloads more secure. With the support of cloud native, "cloud-edge-end unification" will better accelerate the processes of multi-cloud and cloud-edge integration.

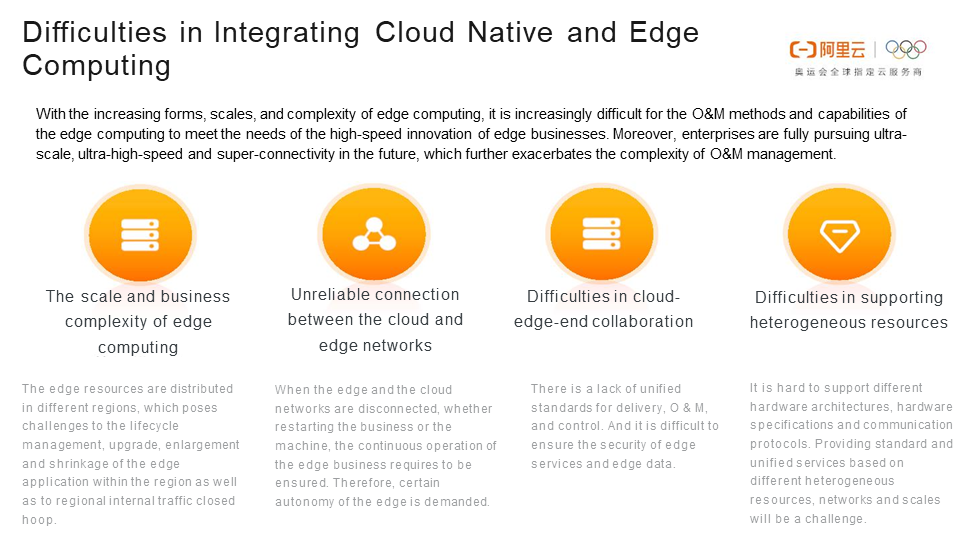

After the cloud native infrastructure of cloud-edge unification, it comes to the difficulties in the integration of cloud native and edge computing. In the actual implementation, the following problems are identified:

Next, OpenYurt, the first non-intrusive cloud native open source platform for edge computing based on Kubernetes in the industry, will be introduced.

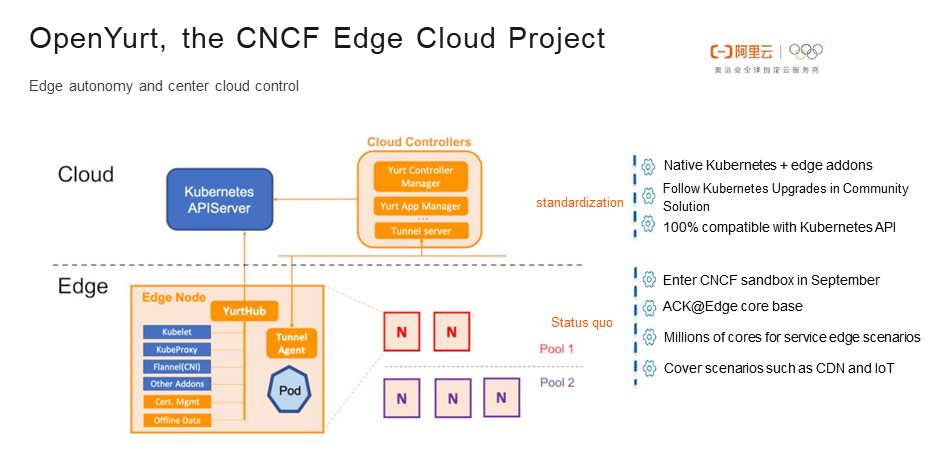

OpenYurt has a typical concise "center-edge" architecture. As shown in the above figure, the edge and the cloud are connected via public networks. The blue boxes indicate native Kubernetes components, and the orange boxes indicate OpenYurt components. Based on the powerful plug-ins and Operator of Kubernetes, OpenYurt ensures zero modification to upstream Kubernetes, thus ensuring that OpenYurt can evolve synchronously with the community while is compatible with mainstream technologies in the cloud native community. The OpenYurt project was donated to CNCF in September 2020, which also ensured the neutrality of the project. At present, OpenYurt has been widely used in Alibaba with the scale of millions of cores managed, covering CDN, IoT, audio and video, edge AI and other scenarios. This means that OpenYurt is proven to be robust in terms of quality and stability.

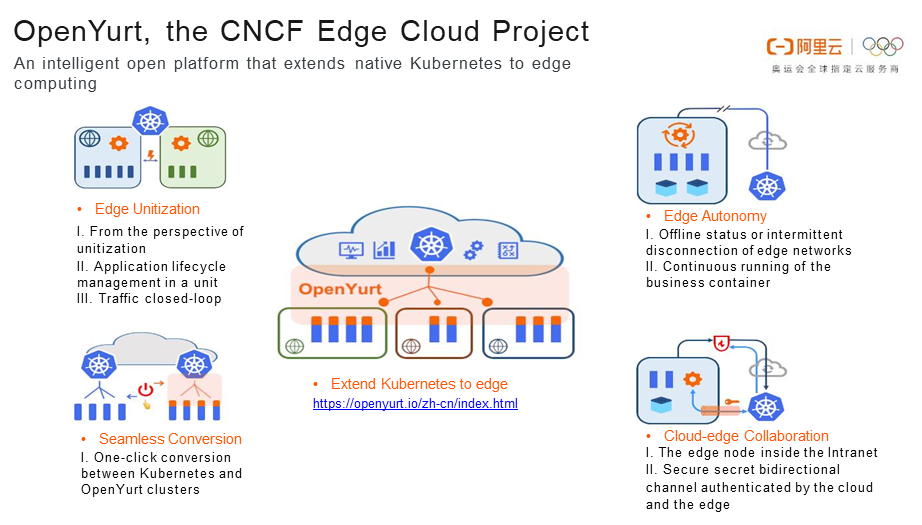

Currently, three versions of OpenYurt have been released, containing edge unitization, edge autonomy, cloud-edge collaboration, seamless conversion, support for heterogeneous resources (AMD64, ARM, and ARM64), elasticity and intercommunication of on-cloud and off-cloud businesses.

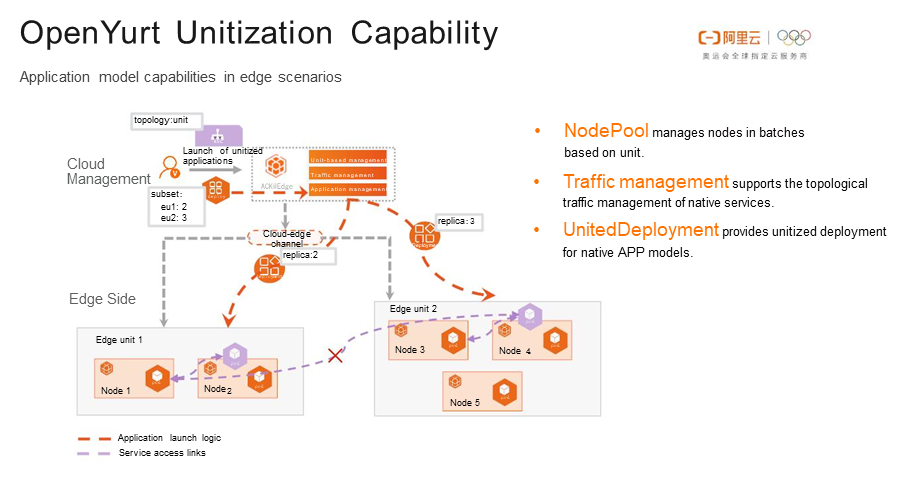

Targeting at the first integration difficulty mentioned above, edge unitization mainly groups the nodes and manages them in batches. Finer control over application orchestration deployment and business traffic is performed within the group. For example, confine the business traffic to a single unit for the security and efficiency of business traffic, or tag the nodes and configure scheduling policies so as to manage multiple nodes of an area in batches. Related capabilities are provided by the yurt-app-manager component.

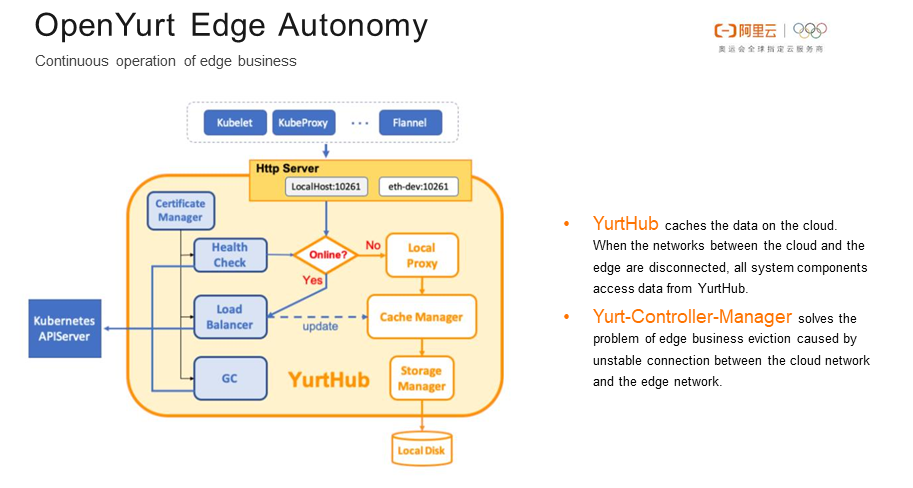

Edge autonomy targeting at the second integration difficulty mentioned above mainly ensures the continuous operation of the edge businesses when the cloud-edge networks are disconnected or unstable. Related capabilities are provided by the yurt-controller-manager and YurtHub components. In addition, the edge autonomy will be enhanced in the next version.

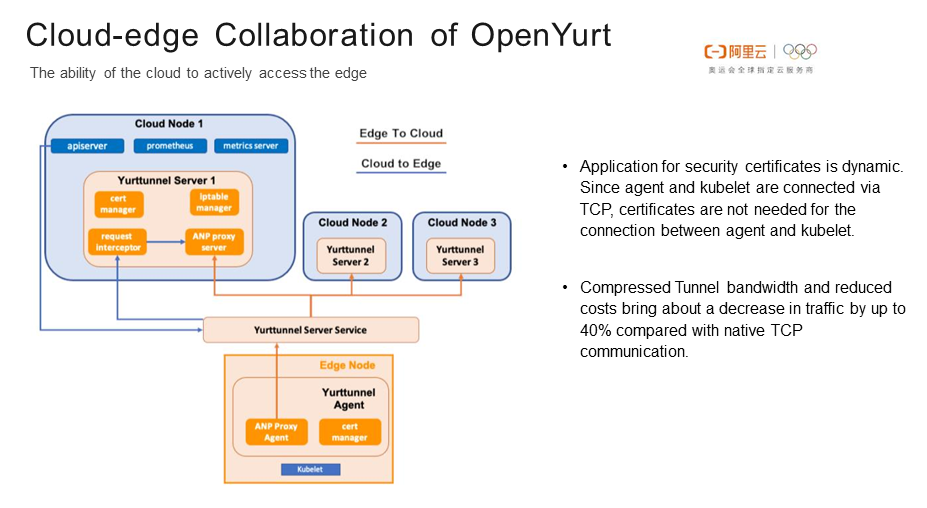

Cloud-edge collaboration targets the third integration difficulty. As the edge nodes are located in the user's internal network, the nodes cannot be accessed from the cloud. Therefore, native Kubernetes O&M capabilities such as kubectl logs/exec/port-forward and Prometheus cannot be supported. Cloud-edge collaboration solves the problem of unidirectional connection between the cloud and edge networks through cloud-edge tunnels, thus supporting the native Kubernetes O&M capabilities. Relevant capabilities are provided by the tunnel-server and tunnel-agent components.

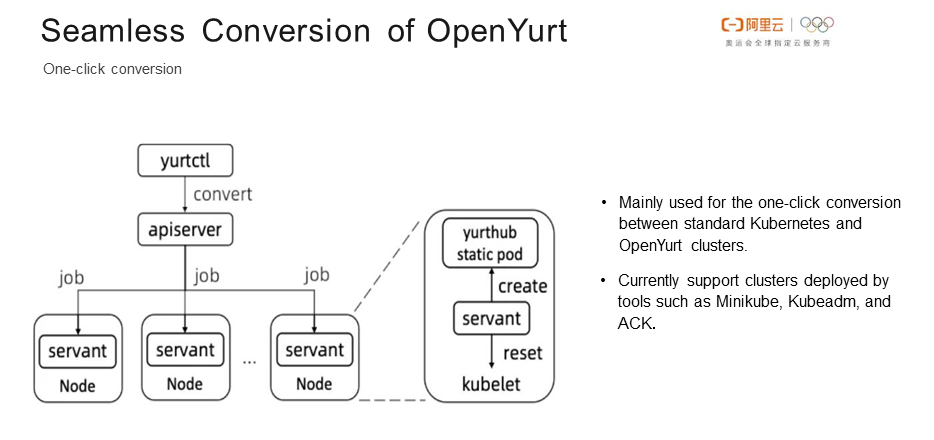

Seamless conversion is used to partially solve the fourth integration difficulty. This capability mainly implements the one-click conversion between standard Kubernetes and OpenYurt clusters and has been fully verified in clusters deployed with tools such as Minikube, Kubeadm, and ACK. Support and contribution of clusters deployed with other tools are welcomed. Related capabilities are provided by the yurtctl component.

Next, some practical cases of OpenYurt will be introduced.

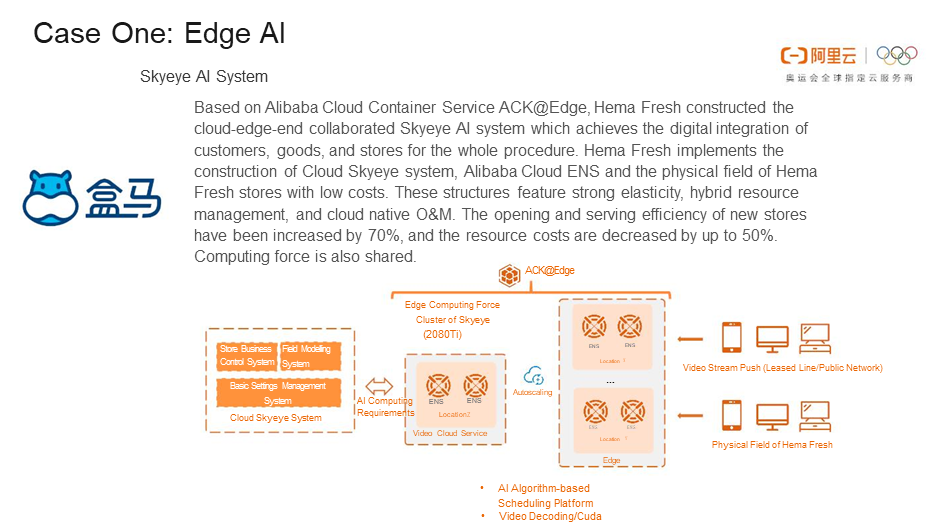

The first case is the "customer-goods-store" digital integration transformation based on edge cloud native from Freshippo (Hema Fresh). In this case, various heterogeneous edge computing power is accessed and scheduled uniformly through cloud native system, including Edge Node Service (Alibaba Cloud public cloud edge node services, offline self-built edge gateway nodes, and GPU nodes). Consequently, Freshippo (Hema Fresh) obtains powerful resource elasticity and flexibility due to business mixing.

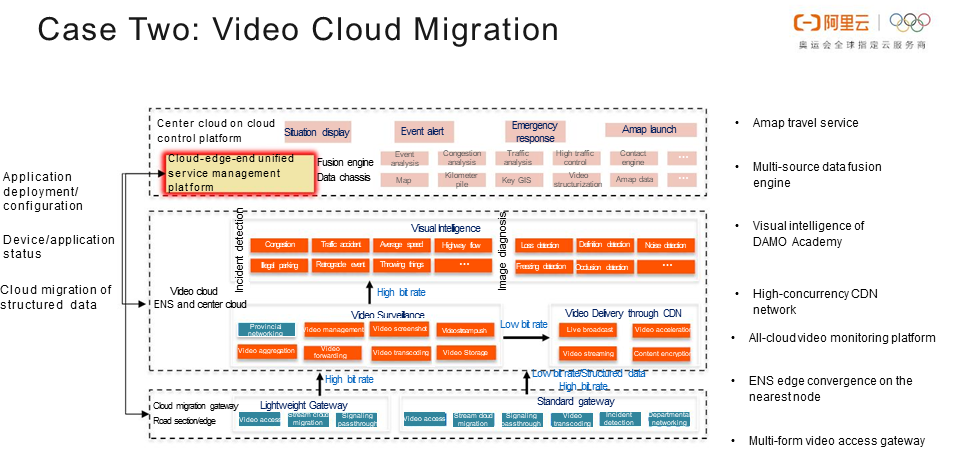

The second case is the video cloud migration in transportation. Through the cloud-edge-end unified collaboration, the intelligent big traffic capabilities of center cloud are integrated with computing power resources such as edge CDN and ENS. As such, businesses can access the nearest resource and video collection devices are managed centrally. Scheduling, orchestrating and service managing of edge cloud native are interspersed and integrated in the cloud migration.

Finally, OpenYurt is still an official CNCF Edge Computing Cloud Native project in its early stages which needs support and help from the public. Welcome to join in the OpenYurt community construction.

Q: Does any configuration need to be modified for the YurtHub proxy implementation mechanism?

A: The cloud access address of the edge node components needs to be adjusted to the local YurtHub listening address (http://127.0.0.1:10261). The other configurations need not be modified.

Q: How do I compare OpenYurt with KubeEdge?

A: First of all, it may be more objective for this problem to be evaluated by a third party. From the perspective of a programmer and a cloud native developer, I'd like to make some personal comments. As OpenYurt makes no modification to Kubernetes and enhances Kubernetes by means of Kubernetes' plug-ins and Operator mechanism, it is more friendly to native Kubernetes users. Yet KubeEdge makes major changes to Kubernetes, such as rewriting kubelet, kube-proxy, list-watch and other mechanisms, which is slightly less friendly to native Kubernetes users. Of course, there are many other differences. Space is limited. Those who have interests can study the differences by themselves.

Q: Which system versions are adopted on tens of thousands of machines in Alibaba? Is there any requirement for the kernel?

A: Currently, AliOS, CentOS, and Ubuntu are mainly adopted. And their kernel versions are all above 3.10 basically.

Q: What is the node size for case 1 and case 2 respectively?

A: Each cluster scale is more than 100.

Q: What are the main functions of the GPU node in case 1?

A: In case 1, AI training is completed on the cloud, and GPU nodes are mainly used for inference tasks.

Q: If the network between the cloud and the edge is completely disconnected, is the edge side able to continue to operate for a long time?

A: The business is able to continue to run when a node or business is restarted. But if a node goes down, the cloud is not able to accurately identify the node and reconstruct it on other normal nodes. This problem will be solved in the next version.

Q: Does Alibaba have a dedicated technical and product team for support?

A: Currently, the Alibaba Cloud Container Service ACK@Edge is implemented based on the open source OpenYurt project, so there is a dedicated team to provide unified support.

Q: Is there a limit for CPU on the edge? What about on the end side? For example, ARM.

A: Currently, the edge CPU architecture supports AMD64, ARM, and ARM64. The end side refers mainly to the device end, which is in the charge of businesses running on OpenYurt edge nodes. Therefore, the device end is not covered by OpenYurt currently.

Q: What are the mainstream application scenarios of edge in the next 3 years?

A: This is a good question. It is certain that cloud native solutions for edge computing are spreading across CDN, edge AI, audio and video, 5G MEC, IoT, and so on on a large scale. The coverage of the IoV, cloud gaming, and other traditional industries such as agriculture and energy is gradually expanding. In short, the edge has a relatively strong ToB attribute.

Q: What percentage of the total edge scenarios is estimated to be now covered by Kubernetes?

A: It is hard to say. Personally, the current proportion should be very low. The digital transformation of the entire edge computing scenario is just beginning. I believe it will be an industry that can last for 20 years.

He Linbo (Xinsheng), from the Alibaba Cloud Container Service team, is the author of and one of the start-up members of OpenYurt. Since 2015, he has been engaged in the design, R&D, and open source work of products related to Kubernetes. He has been responsible for and participated in IoT edge computing, edge container service, OpenYurt and other related projects successively.

Content source: Dockone

Lossless Migration of Business Traffic with Cloud Controller Manager for Kubernetes

Cloud-Native Boosts Full Cloud-Based Development and Practices

484 posts | 48 followers

FollowAlibaba Cloud Native Community - October 13, 2020

Alibaba Developer - January 11, 2021

Alibaba Developer - January 11, 2021

Alibaba Developer - January 21, 2021

Alibaba Cloud Native Community - January 9, 2023

Alibaba Developer - March 30, 2022

484 posts | 48 followers

Follow IoT Platform

IoT Platform

Provides secure and reliable communication between devices and the IoT Platform which allows you to manage a large number of devices on a single IoT Platform.

Learn More Link IoT Edge

Link IoT Edge

Link IoT Edge allows for the management of millions of edge nodes by extending the capabilities of the cloud, thus providing users with services at the nearest location.

Learn More IoT Solution

IoT Solution

A cloud solution for smart technology providers to quickly build stable, cost-efficient, and reliable ubiquitous platforms

Learn More Global Internet Access Solution

Global Internet Access Solution

Migrate your Internet Data Center’s (IDC) Internet gateway to the cloud securely through Alibaba Cloud’s high-quality Internet bandwidth and premium Mainland China route.

Learn MoreMore Posts by Alibaba Cloud Native Community