SaaS service providers must always consider the large number of users when designing an appropriate business architecture. The large user base and massive user data require both efficiency and stability in the infrastructure construction. However, traditional infrastructure construction programs are costly, laborious, and involve complex implementation.

As a result, a more economical cloud service platform that is more convenient for extension has become the first choice for SaaS providers. However, to build a high-performance SaaS application, cloud service infrastructure alone is not enough. The key to determining product performance is in designing and implementing a system architecture that complies with specific business needs using the cloud service platform.

Hangzhou HUPUN Network Technology Co. Ltd, a start-up SaaS provider, faced these problems in the early stages of development. This post elaborates how Hangzhou HUPUN Network Technology utilized Alibaba Cloud services and adopted relatively cost-effective solutions to solve database usage problems for massive users.

Note: Some of the Alibaba Cloud products mentioned in this article are not yet available for the international market.

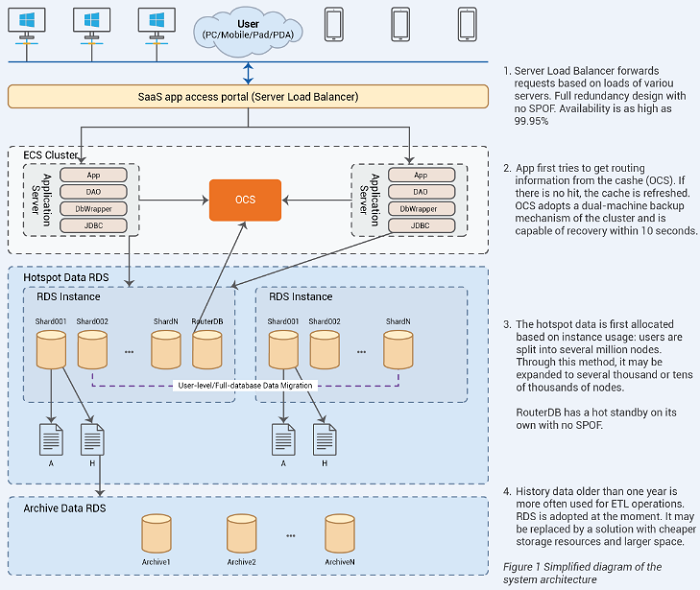

The overall architecture of our SaaS e-commerce ERP is based on the Alibaba Cloud service platform, as shown in Figure 1.

The scheme not only utilizes the advantages of Alibaba Cloud (no maintenance and wear of physical machines, flexible configuration upgrades, mature backup and snapshot solutions) but also avoids single points of failures that the system is prone to through a cluster architecture. Ideally, this improves the flexibility and availability of elastic system resizing.

For us, as a SaaS-oriented enterprise, data is concentrated and data volume is large. Therefore, databases are a key node in our overall structure, and ensuring the stability and performance of databases is our top priority.

When a user enters the rapid growth period, the stock data and growth rate of core business tables cannot be managed by a single database. Almost all single-database configurations are subject to physical performance bottlenecks. Even if you upgrade the configuration, there will be constraints on costs and resources. Therefore, we should give priority to database sharding as a feasible solution. The main analysis ideas are as follows.

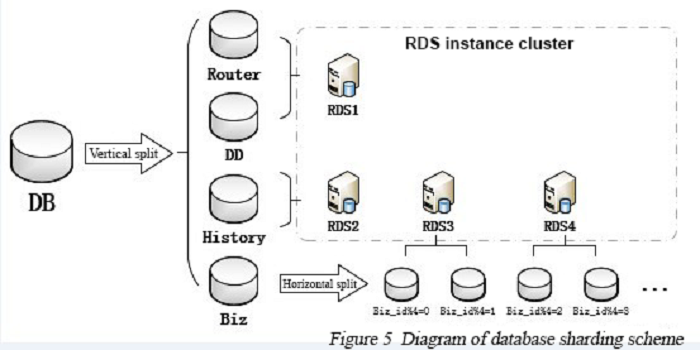

Considering the business characteristics, we finally adopted the more common horizontal splitting + vertical splitting policy in the industry, and independently completed the development data access encapsulation layer between DAO and JDBC.

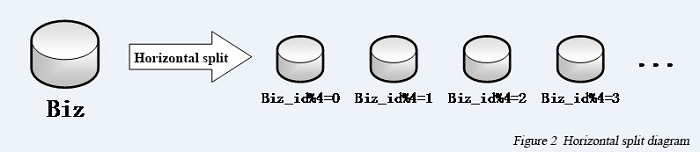

The philosophy of horizontal split is to split data originally stored in a single RDS database into multiple databases by business ID (see Figure 2). After the split, the number and structure of tables are consistent among various databases. To carry out the horizontal split, you should first establish a unique business master table, i.e, data in all the other tables has direct or indirect master-slave relationships with the master table ID (the business ID), which implies that you can perform the split of all the data through the master table ID. We chose the user table as the master business table, and other business tables or their parent tables contain a user ID. Therefore, the goal of our data split is to store the data of different users into different databases.

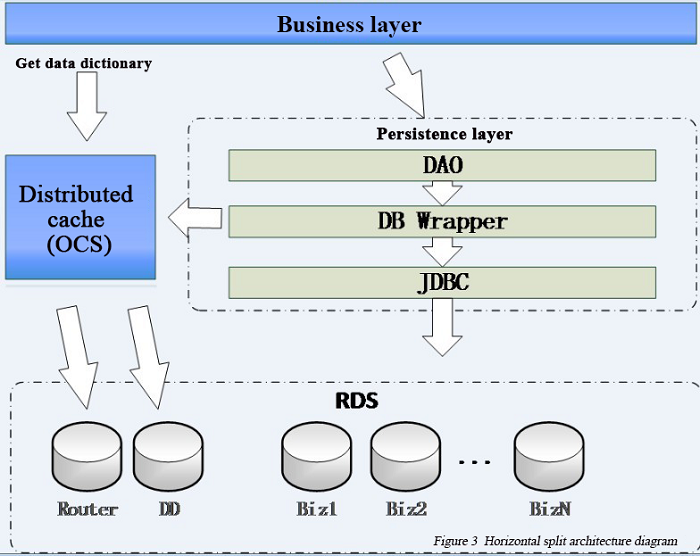

After determining the split rules, the next step entails encapsulating the Spring data access encapsulation layer (DB Wrapper). DB Wrapper is between Data Access Object (DAO) and Java Database Connectivity (JDBC), and basic operations on the database through each business DAO will go through DB Wrapper. It mainly serves to make the changes to the database architecture transparent to the business layer. The business layer can call the database operation interface provided by DB Wrapper as it operates on a single database. The DB Wrapper encapsulation completely handles the logic determining the database on which to operate (see Figure 3).

DB Wrapper mainly provides the initialization and database operation interfaces for new users. When initializing a new user to the system, DB Wrapper first needs to determine load distribution of various databases in the system dynamically. A rough calculation will determine the number of users of various databases. For example, there are four databases in total, and you can decide on the target database based on the user_id% 4. Then you can dig the distribution of core business data. The specific allocation algorithm should be set based on the business (such as considering the average orders of different users). After the comprehensive calculation of the pressure of various databases, proceed to identify the target database with the smallest pressure through analysis, and the new user data stored in the specified target database, with the routing information updated at the same time.

When the user completes the initialization and starts business operations, the business layer will call the operation interface of DB Wrapper. After DB Wrapper receives the request, it will match the router based on the User_id passed in from the business layer to determine the final RDS instance and database on which to operate. After making the judgment, the next task will be establishing connections and executing the requests systematically. Specific code implementation requires their combination with their respective persistence layer framework and a developer who has made some research on the persistence layer framework should be able to complete the process.

In this way, the overall data pressure of system users exhibits a relatively even distribution to multiple RDS instances and databases. This is indeed a very effective scheme, especially for tables with a large and fast-growing data size. However, in the follow-up implementation process, we found that the business pressure from a single user might occasionally get more prominent. To solve this issue, we can use human intervention, such as migrating data to a separate database, to fine-tune the database pressure. Of course, the final solution will constantly involve tuning our routing algorithms.

After data splitting, it is inevitable to consider the processing of data dictionaries (DD) and data routers (Router). We will put all the data dictionaries and routers into a separate database for now. Note that the database is only one implementation approach of the two businesses. Generally, we can also process these businesses by combining the databases with the distributed cache (we chose OCS). For all the possible single points of failures, the reserved expansion scheme is a horizontal split or the creation of a read-only node (read-only nodes can utilize the newly provided read-only instance by RDS which is still in the beta phase).

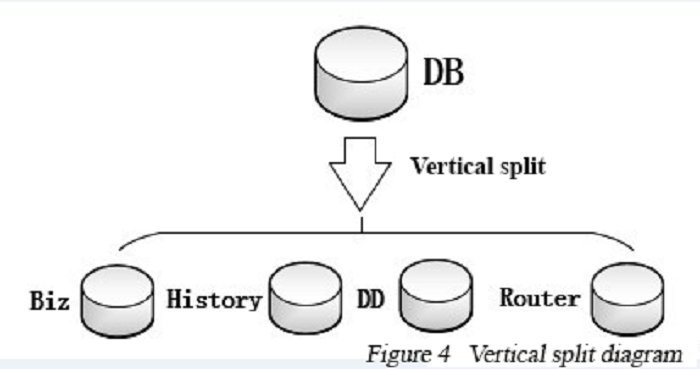

Vertical split is simpler than horizontal split. In vertical split, tables from a single database are grouped based on the degree of business coupling, and then split into multiple databases (see Figure 4). After the split, the table structures and business meanings in various databases will vary greatly with each other. Although the rules are simple and easy to implement, vertical split always involves the breakdown of some associations. In actual operations, basic resources often appear in various business scenarios, resulting in the need of splitting them into multiple databases. Therefore, you need to make multiple queries to the business layer and process data in the memory to achieve the effect of a database join.

Vertical split also requires DB Wrapper, but the encapsulation rules vary slightly with that for the horizontal split. You need to establish different DB Wrappers for different businesses. The DB Wrapper is no longer unperceivable to the business layer, and the business layer should use a targeted DB Wrapper for a business scenario. The implementation of a single DB Wrapper is consistent with that in the horizontal split.

The advantage of vertical split is that the overall business data is divided into several relatively independent segments, isolating the performance impacts between different businesses. Furthermore, vertical split makes business databases more concentrated, making it easier to find the master business table and perform horizontal split.

We currently apply vertical split to solve mainly data routing (including the user's basic information), data dictionary module, and common cold data problems. Cold data processing has always been a common problem in the industry (in fact, the cold data division is also horizontal split). We currently adopt the centralized storage solution, i.e. migrating the identified cold data increment to a database through a self-developed migration program as per our cold data partitioning approach. This scheme not only limits the impact of operations on the cold data to the hotspot data but also provides more convenient conditions for big data mining. The use of relatively independent cold data storage structures can facilitate the adoption of more efficient, less costly storage media. Of course, there are some potential problems with the scheme – what if the cold database is full? At present, our backup plan is to consider other forms of storage for the horizontal split of history databases.

Splitting has always been a keyword for database optimization (whether in database table structure or SQL script), and it is the only way for every high-concurrency product. The core of the split solution lies in the ability to expand the load capacity of the system flexibly by adding more RDS instances and databases (we can always deploy multiple databases on the same RDS instance to save costs). In the database architecture, we use horizontal split and vertical split in combination, and the order of the two depends on the specific circumstances. In general, vertical split is easier and can pave the way for horizontal split by facilitating the extraction of the master table. Furthermore, after the vertical split, you can perform horizontal split on tables with high pressure while keeping the single database structure for tables with slow business growth, thereby improving the efficiency of the split and reducing the implementation cost (see Figure 5).

In horizontal split, we configure the routers by business ID (user) only, so that the structures in various databases are consistent. This keeps the original business logic and implementations while avoiding cross-database associations, greatly reducing the implementation costs.

Although there are various benefits to splitting, control of distributed transactions are generally at the mercy of optimistic locks through the business layer. This is because of the high implementation complexity of splitting, and the poor availability of distributed transactions and cross-database joins. Furthermore, the cross-database associations between tables must be broken, otherwise, the performance and implementation complexity will both exceed the acceptable range. There is also a need to process cross-database joining and grouping at the business layer. At present, we adopt batch query and result assembly in the business layer.

Prior to the launch of Alibaba Cloud DRDS (distributed database) products, our team had to develop the underlying structure of the databases without any external assistance. Fortunately, with the successful launch of DRDS, our team no longer needs to reinvent the wheel, enables us to focus more on the core business.

Despite the optimizations in the architecture, often some unsatisfactory performance issues persist in the product development. In the Alibaba Cloud support center and forum, we can also see other business-style teams providing feedback about similar issues during the use of RDS. There are debates on whether the issues are the result of the problematic isolation of underlying resources of RDS. This leads to competition between multiple users who share resources, thus further leading to RDS performance problems. However, under the Alibaba Cloud DBA guidance and assistance, we found that the actual cause lies in the uncontrolled usage of the database in the product design. With the increase in concurrency pressure and data volume, minor performance problems are exposed and amplified.

In the database optimizing process, R&D teams tend to neglect database use on the business layer. Some optimization schemes can serve as standard criteria for development. Here we only list a few common optimization options.

The optimization of the execution plan often relates to the operation mechanism and underlying design of the database, so it is difficult to provide a clear explanation in a single blog article. We will just list a few optimization schemes from which we have gained extensive benefits. We recommend paying more attention to and analyzing the performance reports and suggestions in the iDB Cloud console when optimizing the execution plan. Thereafter, consult with Alibaba Cloud DBAs more often, by issuing tickets. If you are interested and eligible, your DBA can also make an appointment for site study with Alibaba Cloud. Additionally, the optimization of the execution plan requires a lot of debugging work. Through creating a temporary instance of the production database in the Alibaba Cloud console, you can accurately simulate the data structure, distribution, and pressure of the current system.

Selection of a reasonable field can often greatly reduce the size of row data in the database, and substantially improve the index matching efficiency, thus greatly enhancing the database performance. Using smaller data types, such as date instead of datetime for the date, tinyint instead of smallint or int for the type or label, and fixed-length fields instead of non-fixed-length fields (such as char instead of varchar) can all more or less reduce the data row size and improve the hit rate in the database buffer pool. The type selection of the primary key, a special member of the table fields, will generate a huge impact on the table index stability and efficiency. We generally recommend you to consider a unique value that is auto-incremented in the database or one frequently maintained by the database independently.

Highly separated fields always mean precise or partially precise conditions for queries. It is a scenario relatively easier to optimize – just to index the highly separated fields independently. We need to explore more details during the actual use. As business scenarios become more complex, the precise conditions priority principle will be more effective as an optimization solution. Although indexing highly separated fields independently will boost the efficiency significantly, too many indexes may affect the table writing efficiency. For effective indexing, refer to the suggestions on large table indexing in iDB Cloud.

Plainly put, covering index means that the execution plan can complete the data query and result set acquisition through indexes without returning the table (to the buffer pool or disk to look for the data). Due to restrictions of the MySQL index mechanism, the system will only utilize one query, or two indexes through index_merge. This means that in complex business scenarios, it may not be that helpful to establish indexes for every field alone. Therefore, for some specific query scenarios, we should establish appropriate index combinations. The application of covering indexes can avoid a large number of random I/O requests, therefore be a more recommended optimization scheme (if the execution plan contains Using Index in the Extra of Explain, it indicates the use of covering index).

However, the actual businesses are always more complicated than the index itself. There is always a lot of field information that the system needs to search for or obtain, yet the index combinations cannot cover all the fields (otherwise, we will have a huge index that is even greater than the data itself). In order to apply covering index, you need to leverage the deferred joins of the primary key. Specifically, you need to first make queries and get a smaller result set (result set-oriented principle) through the field conditions contained in the combination index. The result set only contains the primary key fields and the data tables can be joined through the obtained primary key queue.

General business-based R&D teams have difficulty spending additional effort on the database, and there are no professional DBAs to constantly tune the database configuration and optimize the performance of the database servers. As such, there are not many options left for the team at an earlier stage. It is hard for them to explore technical solutions in depth, and the only option is to exchange cost for time. The simplest solution is to upgrade the server configuration to cope with insufficient performance.

However, upgrading the configuration of a self-deployed database requires not only the adjustment in the database configuration parameters but also being subject to the physical limitations of the machines. Therefore, it is necessary to devise a more complex backup and synchronization policies for the database. Note that this approach may not be realizable within a short term for the business team, and configuration upgrades can become a complex problem. Nevertheless, we have utilized the elastic upgrading policy of RDS and we feel that it is the best solution to this problem.

In our extensive experience of optimizing databases and the entire product at large, we noticed that a perfect solution is hard to come by. Even if a solution you select can solve only 80% of the problems it will greatly enhance the overall efficiency of the team. The evolution and optimization of products and architecture is a gradual process; we have to proceed one step at a time. Based on the Pareto principle, addressing the top 20% root causes first can help us achieve a solution that solves 80% of the problems.

As a technical developer in a start-up company, I have summarized the advantages of using cloud-computing products through my experience with Alibaba Cloud products as follows:

In addition to the experience in using cloud products, we have also discussed on various database-tuning practices. Regarding database architecture design and performance optimization, our team adheres to the principle of divide and conquer, solving primary problems gradually and then tapping into the details, round after round. Consequently, the system architecture is also evolving in the process. I believe that over time, several other solutions will be made popular. Especially with the continuous development of cloud services, the effort and cost invested will reduce significantly. These factors will lead to a more focused business research and development team, creating more innovative Internet products and the reshaping the way that businesses are run.

Conway's Law – A Theoretical Basis for the Microservice Architecture

2,593 posts | 791 followers

FollowApsaraDB - February 14, 2021

Alibaba Clouder - November 21, 2019

Alibaba Clouder - July 23, 2019

Alibaba Cloud MVP - March 31, 2020

Farruh - December 5, 2025

Alibaba Clouder - June 29, 2018

2,593 posts | 791 followers

Follow Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn More Database Migration Solution

Database Migration Solution

Migrating to fully managed cloud databases brings a host of benefits including scalability, reliability, and cost efficiency.

Learn More DBStack

DBStack

DBStack is an all-in-one database management platform provided by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Clouder

Raja_KT March 2, 2019 at 6:13 am

It makes me think of PolarDB.