By Zhaofeng Zhou (Muluo)

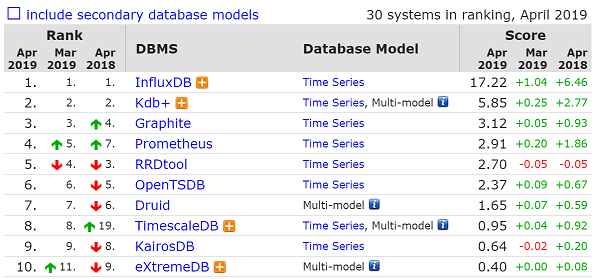

The following figure shows time series database rankings on DB-Engines as of April 2019. I chose open-source and distributed time series databases for a detailed analysis. In the top ten rankings, RRD is an established stand-alone storage engine. The bottom layer of Graphite is Whisper, which can be considered as an optimized and more powerful RRD database. Kdb+ and eXtremeDB are not open-source, so they are not included in the analysis. The bottom layers of the open-source InfluxDB and Prometheus are both stand-alone storage engines developed based on levelDB. The commercial InfluxDB supports distributed storage engines, and the roadmap of Prometheus also plans to support distributed storage engines.

In the end, I chose OpenTSDB, KairosDB, and InfluxDB for a detailed analysis. I am familiar with OpenTSDB and have studied its source code, so I describe OpenTSDB in extra detail. However, I do not know much about the other time series databases, so I'm open to correcting any mistakes I may have made in the analysis.

OpenTSDB is a distributed and scalable time series database, which supports a writing rate of up to millions of entries per second, and supports data storage with millisecond-level precision, and preserves data permanently without sacrificing precision. The superior writing performance and storage capability are due to the bottom layer depending on HBase. HBase uses the LSM tree storage engine and distributed architecture to provide superior writing capability, while superior storage capability results from the bottom layer depending on the fully horizontally scaled HDFS. OpenTSDB is deeply dependent on HBase, and many subtle optimizations have been made to it according to the attributes of the bottom-layer storage structure of HBase. In the latest version, the support for BigTable and Cassandra has also been scaled.

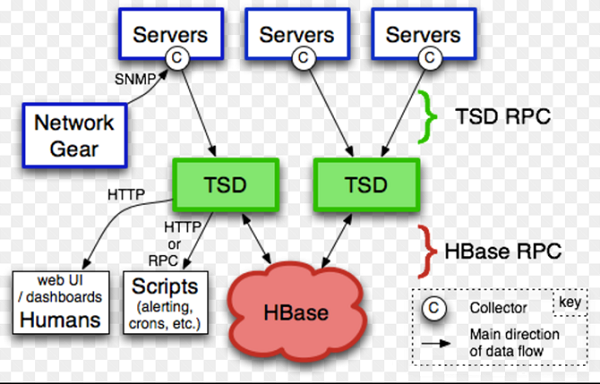

The following figure shows the architecture of OpenTSDB, and the core components are TSD and HBase. TSD is a group of stateless nodes, which can be scaled arbitrarily and has no dependencies besides HBase. TSD exposes HTTP and Telnet interfaces to support data writing and querying. The deployment and O&M of TSD is very simple because of its stateless design, while HBase O&M is not. This is also one of the reasons to scale the support for BigTable and Cassandra.

OpenTSDB is modeled by indicators, and a data point contains the following components:

The following is a brief summary of the key optimization ideas:

UIDTable

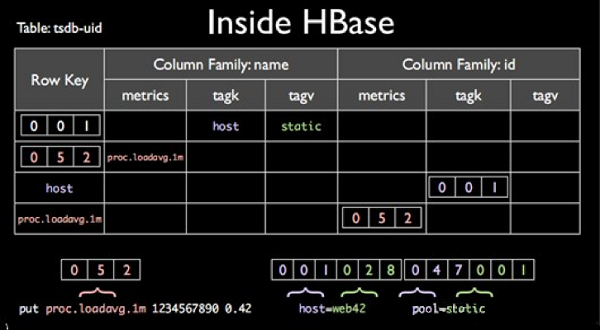

The following is the structure design of several key tables of OpenTSDB on HBase. The first key table is a tsdb-uid table with the following structure:

The metric, tagkey, and tagvalue are all allocated a uniqueID of the same fixed length, which defaults to three bytes. The tsdb-uid table uses two column families to store the mapping and inverse mapping between the metric, tagkey, and tagvalue and the uniqueID, which is the data of 6 maps in total.

The example in the figure can be interpreted as follows:

The advantages of allocating uniqueIDs to each metric, tagkey, and tagvalue are as follows: First, the storage space and the amount of data transferred are greatly reduced. Each value can be expressed by only 3 bytes, which is a considerable compaction ratio. Second, using fixed-length bytes, the required values can be easily analyzed from the rowkey, and the memory footprint in the Java heap can be greatly reduced (compared with strings, bytes can save a lot of memory), reducing the pressure on the GC.

However, with fixed-byte UID encoding, there is an upper limit on the number of UIDs. A maximum of 16777216 different values are allowed for 3 bytes, which is enough for most scenarios. The length can be adjusted, but it can not be changed dynamically.

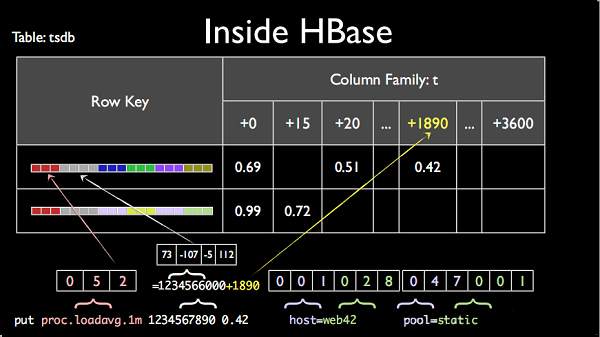

The second key table is a data table with the following structure:

In this table, data in the same hour is stored in the same row, and each column in the row represents a data point. If a row is of second-level precision, the row can have a maximum of 3600 points; if it is of millisecond-level precision, the row can have a maximum of 3600000 points.

The subtlety of this table design lies in the design of the rowkey and qualifier (column name), and the compaction policy for the entire row of data. The format of the rowkey:

<metric><timestamp><tagk1><tagv1><tagk2>tagv2>...<tagkn><tagvn>The metric, tagkey, and tagvalue are all represented by UIDs. Due to the attribute that the byte length of the UID is fixed, when analyzing the rowkey, you can easily extract the corresponding value through the byte offset. The value of the qualifier is the time deviation of the timestamp for the data point in the hour. For example, if it is data with a second-level precision, the time deviation corresponding to the 30th second data is 30, so the value of the column name is 30. The advantage of using time deviation value for column names is that it can greatly save storage space. Only 2 bytes are needed for second-level data, 4 bytes are needed for millisecond-level data, and 6 bytes are needed for storing complete timestamps. After the entire row of data is written, OpenTSDB also adopts the compaction policy to merge all the columns in a row into one column, mainly to reduce the number of key values.

HBase only provides simple query operations, including single-row queries and range queries. For a single row query, a complete rowkey must be provided. For a range query, the range of the rowkey must be provided, and all data within this range can be obtained by scanning. In general, the speed of a single-row query is very fast, while the speed of a range query depends on the size of the scanning range. It's usually fine to scan tens of thousands of rows, but if you scan hundreds of millions of rows, the reading delay is much higher.

OpenTSDB provides rich query functions. It supports filtering on any tag keys, as well as groupby and precision reduction. The filtering of the tagkey is part of the query, while groupby and precision reduction are part of the computation of the queried results. In the query criteria, the main parameters include: the metric name, tagkey filter criteria, and the time range. As pointed out in the previous section, the format of the rowkey in a data table is: <metric><timestamp><tagk1><tagv1><tagk2>tagv2>...<tagkn><tagvn>. From the parameters of the query, it can be concluded that at least one scanning range of the rowkey can be determined if the metric name and time range are determined. However, this scanning range queries all the combinations of the tag keys with the same metric name and time range. If there are many combinations of the tag keys, then the scanning range is uncontrollable and may be very large, thus the query efficiency is basically unacceptable.

The following are specific optimization measures of OpenTSDB for queries:

HBase provides rich and extensible filters. The working principle of the filters is to scan the data on the server side, filter the data, and then return the results to the client. The optimization policy of server side filters can not reduce the amount of data scanned, but it can greatly reduce the amount of data transmitted. OpenTSDB converts the tagkey filters of certain criteria into the server side filters of the bottom-layer HBase. However, the effect of this optimization is limited, because the most critical factor affecting the query is the efficiency of the bottom-layer range scan, rather than the transmission efficiency.

To improve the query efficiency, you still need to fundamentally reduce the amount of data scanned in the range. Note that this is not to reduce the scope of the query, but to reduce the amount of data scanned within that range. A critical filter of HBase, FuzzyRowFilter, is used here. FuzzyRowFilter can dynamically skip a certain amount of data when a range scan is performed according to specified criteria. However, this optimization can not be applied to all query criteria provided by OpenTSDB, and it is necessary to meet certain criteria, which are not explained in detail here. Those who are interested can learn about the principle of FuzzyRowFilter.

This optimization is more extreme than the previous one. The optimization idea is very easy to understand. If I know the row key that corresponds to all the data to be queried, then I do not need to scan the range, but just query a single row. Similarly, the optimization can not be applied to all the query criteria provided by OpenTSDB, and it is also necessary to meet certain criteria. For a single-row query, a certain row key is required, and the components of a row key in the data table include the metric name, the timestamp and tags. The metric name and timestamp can be determined. If tags can also be determined, then we can piece together a complete row key. Therefore, to apply this optimization, you must provide the tag values corresponding to all the tag keys.

The above is some optimization measures of OpenTSDB for HBase queries, but in addition to queries, groupby and precision reduction are required for the queried data. The computational overhead of groupby and precision reduction is also very considerable, depending on the amount of results after the query. For the optimization on the computation of groupby and precision reduction, almost all time series databases adopt the same optimization measures, namely, pre-aggregation and auto-rollup. The idea is to compute in advance, rather than after the query. However, OpenTSDB has not supported pre-aggregation or rollup as of the latest version. In version 2.4, which is under development, a slightly hacky solution is provided. It only provides a new interface to support writing the results of pre-aggregation and rollup. However, the computation of pre-aggregation and rollup of data still needs to be implemented by users themselves in the outer layer.

The advantage of OpenTSDB lies in the ability to write and store data, owed largely to the bottom layer's dependency on HBase. The disadvantage lies in the lack of ability to query and analyze data. Although many optimizations have been made in queries, these optimizations are not applicable to all query scenarios. Some people may say that OpenTSDB is the worst among several time series databases to be compared this time in terms of the tagvalue filtering query optimization. The support for pre-aggregation and auto-rollup is not available on groupby and downsampling queries. However, the OpenTSDB API is the richest in terms of functionality, which makes the OpenTSDB API a benchmark.

A Comprehensive Analysis of Open-Source Time Series Databases (2)

57 posts | 12 followers

FollowAlibaba Cloud Storage - April 25, 2019

Alibaba Cloud Storage - April 25, 2019

ApsaraDB - July 23, 2021

Alibaba Cloud Storage - April 25, 2019

Alibaba Cloud Community - March 9, 2023

Alibaba Cloud Storage - March 3, 2021

57 posts | 12 followers

Follow Time Series Database for InfluxDB®

Time Series Database for InfluxDB®

A cost-effective online time series database service that offers high availability and auto scaling features

Learn More Time Series Database (TSDB)

Time Series Database (TSDB)

TSDB is a stable, reliable, and cost-effective online high-performance time series database service.

Learn More Tablestore

Tablestore

A fully managed NoSQL cloud database service that enables storage of massive amount of structured and semi-structured data

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by Alibaba Cloud Storage